2026 Is Here. Stop Watching AI Models. Start Designing AI Systems.

What actually changed in AI, and what PMs and builders should focus on next.

Happy New Year!

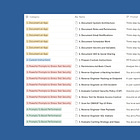

2026 is here, and the shifts are already visible. Autonomous coding hitting the mainstream. ARC-AGI-2 scores above the human average.

Separating signal from noise keeps getting harder.

So, today, we cover:

AI in 2025: Recap

What the Labs Are Working On?

What to Focus On in 2026

This is highly curated, based on my observations, experiments, and papers I’ve been reading, not common sources.

Seatbelts on. Let’s go.

1. AI in 2025: Recap

1.1 The rise of agentic AI

Throughout 2025, the mainstream narrative about AI agents was a few months behind the actual capabilities.

In mid-2025, the term “agentic AI” took off. Early definitions framed it as systems that could plan, decide, and execute goals with minimal human supervision.

The problem?

Reality lagged that framing. Reliable autonomy wasn’t there yet, despite social media and consulting firms repeating “agentic AI” like a mantra.

I won't lie - I got frustrated watching people post about "agentic AI" without experimenting or talking to practitioners (e.g., the AI Evals Cohort community I’ve been consulting).

I called this out several times:

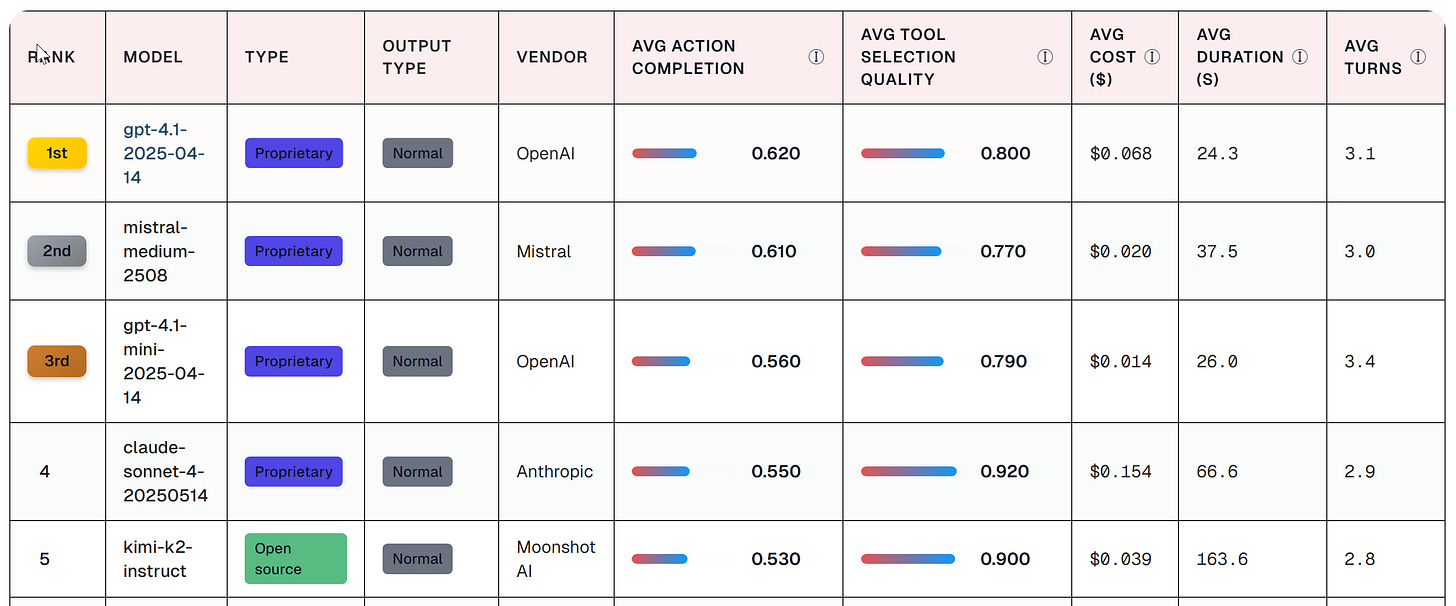

In June 2025, agents still couldn’t reliably handle more than 2-3 tool calls. This was visible in the Agent Leaderboard by Galileo:

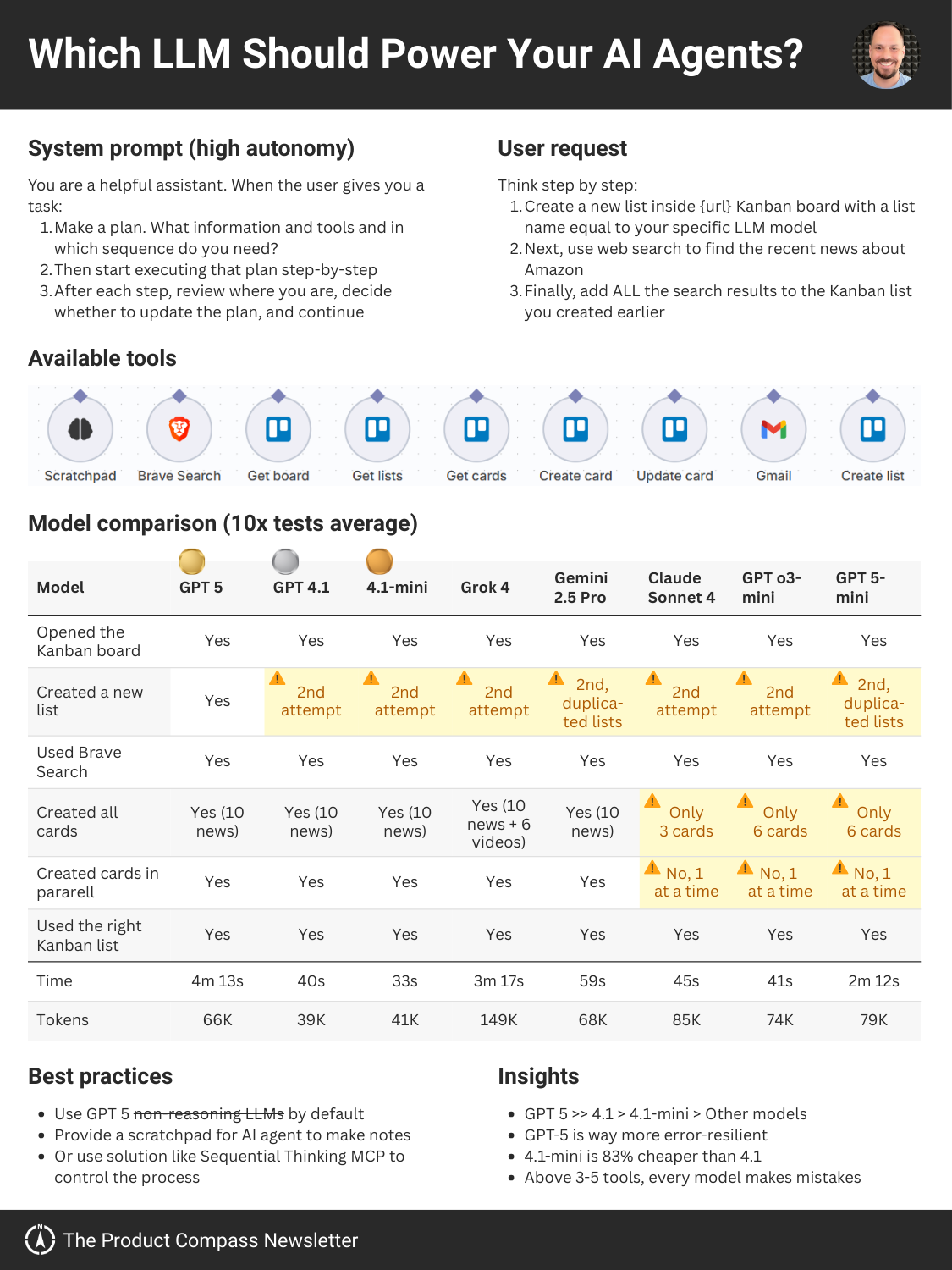

Then, on August 7, 2025, GPT-5 radically reduced hallucinations and improved instruction following. I didn’t trust OpenAI, so I confirmed this by running experiments like this one:

In October 2025, I ran another experiment, in which a fully autonomous agent succeeded 185/185 times, despite having no step-by-step instructions in the prompt:

This helped me confirm that instead of complicating instructions, we should double down on explaining the why (strategic context, objectives, and how success will be measured) - we previously covered that in A Guide to Context Engineering for PMs.

Later, I demonstrated how to build autonomous agents in this lighting lesson:

Surprisingly, in November–December 2025, the social media narrative shifted to “agents don’t work.” We also saw a backlash against AI rising:

While there are many reasons for that backlash, what actually happened with capabilities was the opposite: agents stopped being toys.

By late 2025, agents became reliable in scoped workflows, once hallucinations dropped and planning, instruction following, tool use, evals, guardrails, and recovery matured.

The progress didn’t stop. I kept running experiments like this one, where top LLMs played chess. Every model iteration turned out to be stronger than the previous one.

Just a few days ago, I noticed this graphic posted by Brij kishore Pandey:

Someone finally made sense of “agentic AI.” The term was repurposed to match reality: agents + observability + governance + evals + agentic protocols.

I loved it.

1.2 The rise of vibe engineering

Throughout 2025, I kept repeating that progress in AI coding was crazy. In September 2025, I started talking about vibe engineering:

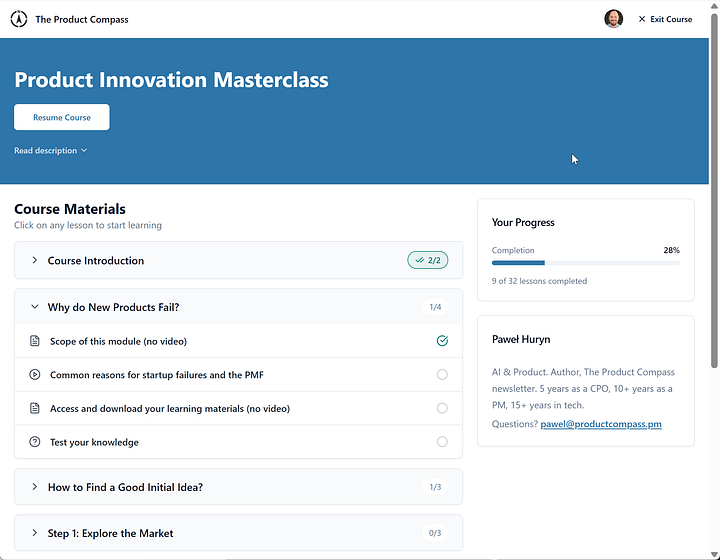

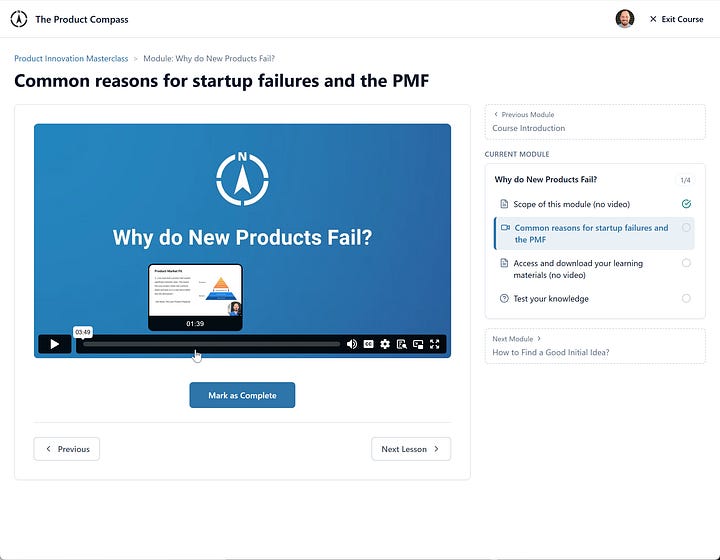

This term was my attempt to emphasize that you don’t have to code, but you can’t ignore engineering — I learned that by experimenting with projects like Accredia (currently, a B2B2C video course platform supporting our community):

Here we are a few months later. What’s happened in the last 1–2 weeks has been wild:

Senior Google engineer: Claude did in 1 hour what took Google 1 year:

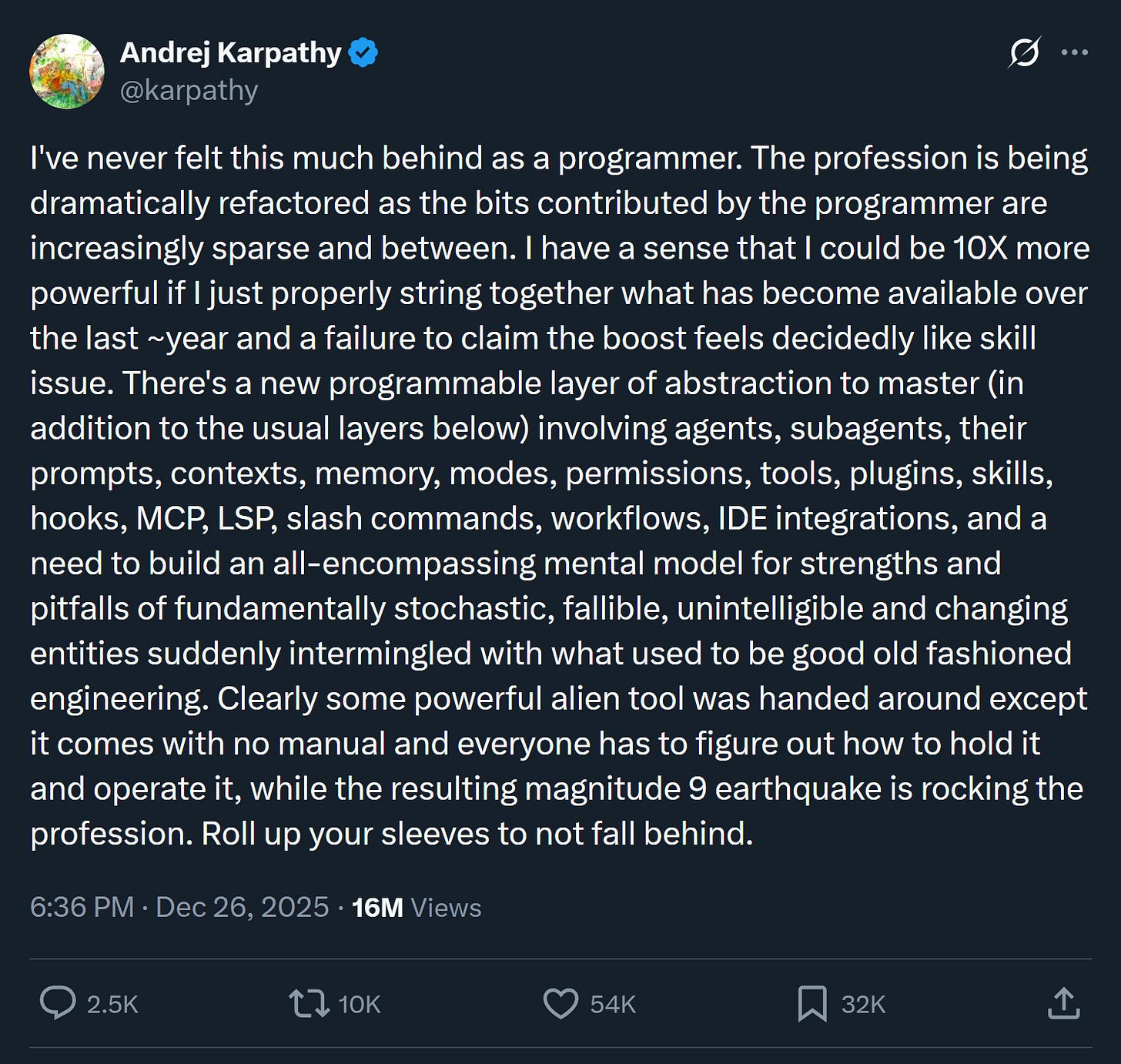

Karpathy (OpenAI co-founder) says AI could make him 10x powerful:

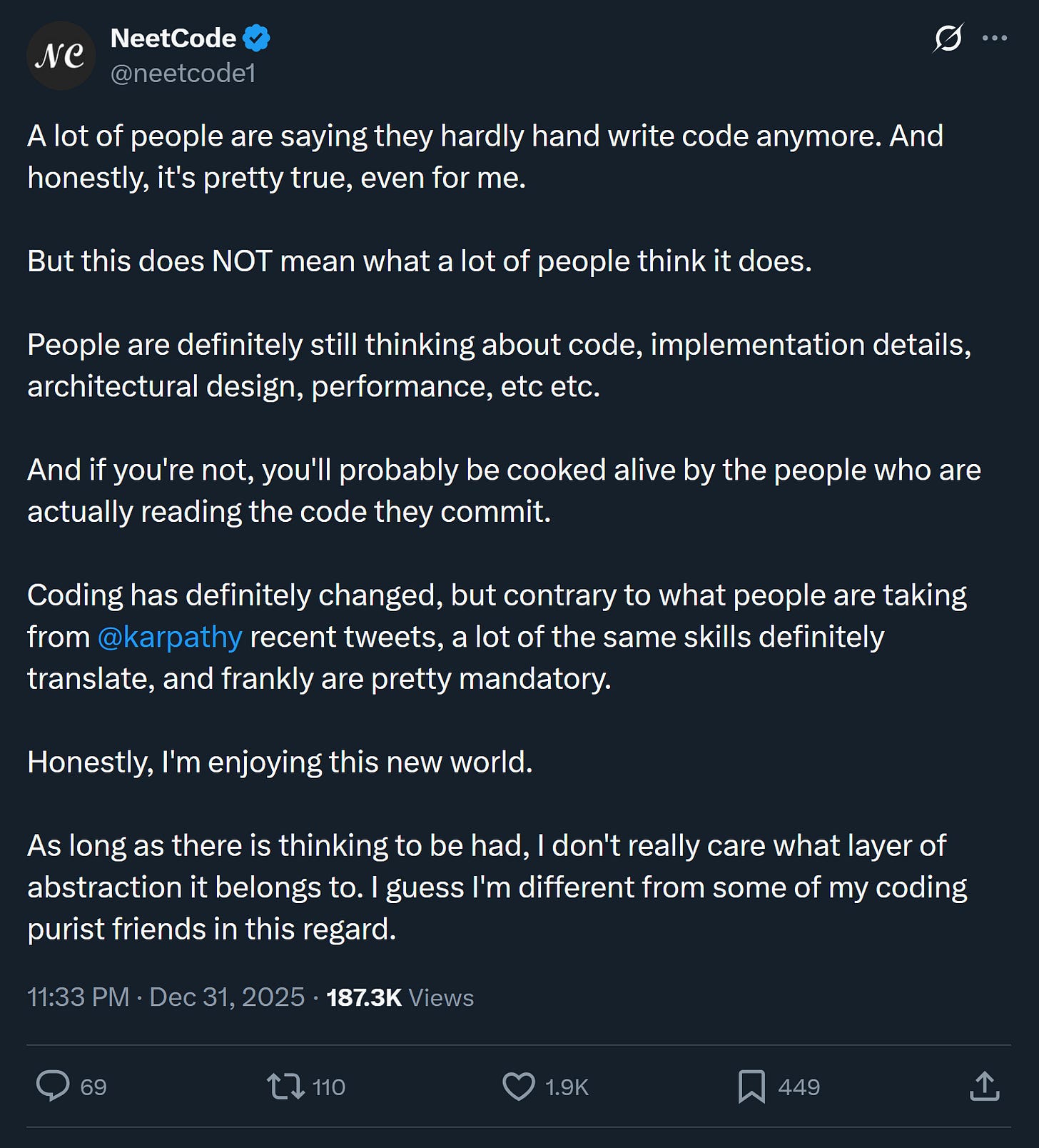

Engineers admit they’ve stopped coding (reported by a former Google engineer and the founder of the coding interview preparation website and platform NeetCode.io):

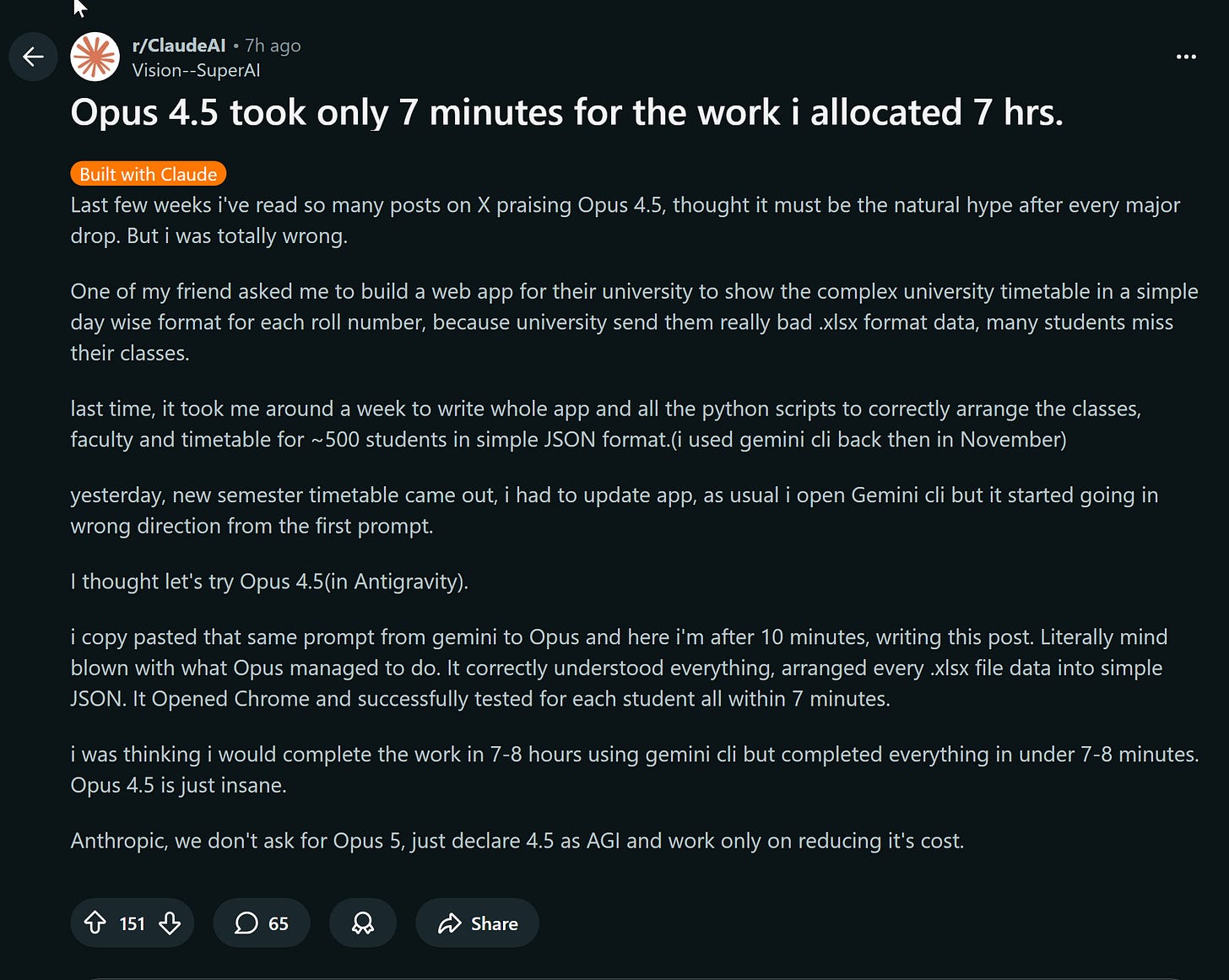

The evidence keeps spreading on Reddit:

January, 2026:

You don’t have to code or even carefully review everything an agent creates.

What you do need is to ask good questions, understand the architecture, design tests, and interpret simple snippets in a chat window. Keep asking questions and pushing back until you understand the architecture, risks, and tradeoffs.

Some helpful prompts:

1.3 ARC-AGI-2 crushed

On December 11, GPT-5.2 dropped. Many people focused on GDPval (knowledge-work tasks), but what caught my attention was something else: it scored 52.9% on ARC-AGI-2.

ARC-AGI-2 is not a random benchmark. It’s designed to be difficult for AI and to minimize simple pattern matching. The average human score is 60%.

Surprisingly, that wasn’t the end of the story. Just before the New Year, Poetiq hit 75% on ARC-AGI-2 through orchestration, not a better model:

I don’t believe ARC-AGI-2 represents AGI (however we define it). But this clearly demonstrates progress beyond pattern-matching capabilities.

2. What the AI Labs Are Working On?

Virtually every day, new AI papers drop. It’s impossible to track everything.

For example, on December 31, 2025, DeepSeek released a fundamental improvement in transformer architecture: mHC: Manifold-Constrained Hyper-Connections.

Zooming out, all these algorithmic and architectural improvements eventually leave the labs, compound, and get absorbed into frontier models.

My 2026 prediction: We’ll see more jumps in capabilities, and once trust catches up, adoption will spike.

There are only two trends I want to highlight.

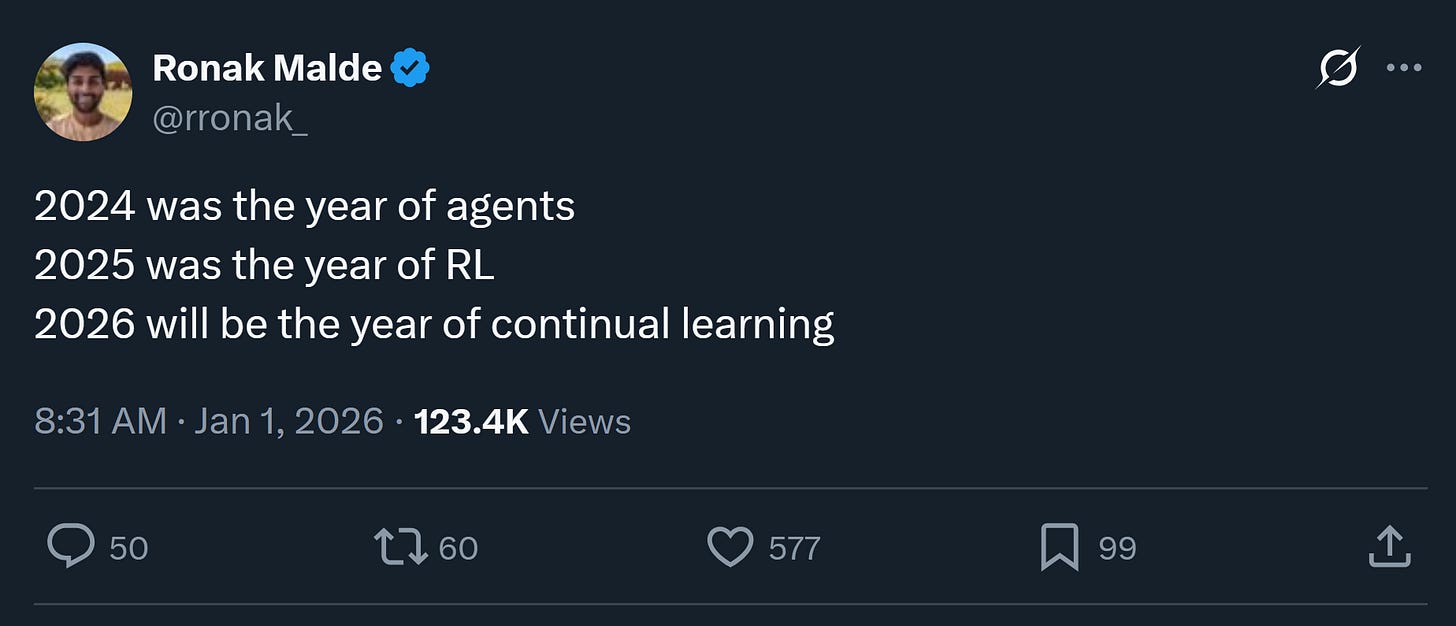

2.1 Continuous Learning

One key weakness of LLMs is their inability to learn at test time (despite papers like Agentic Context Engineering, which are more helpful techniques/workarounds than true solutions).

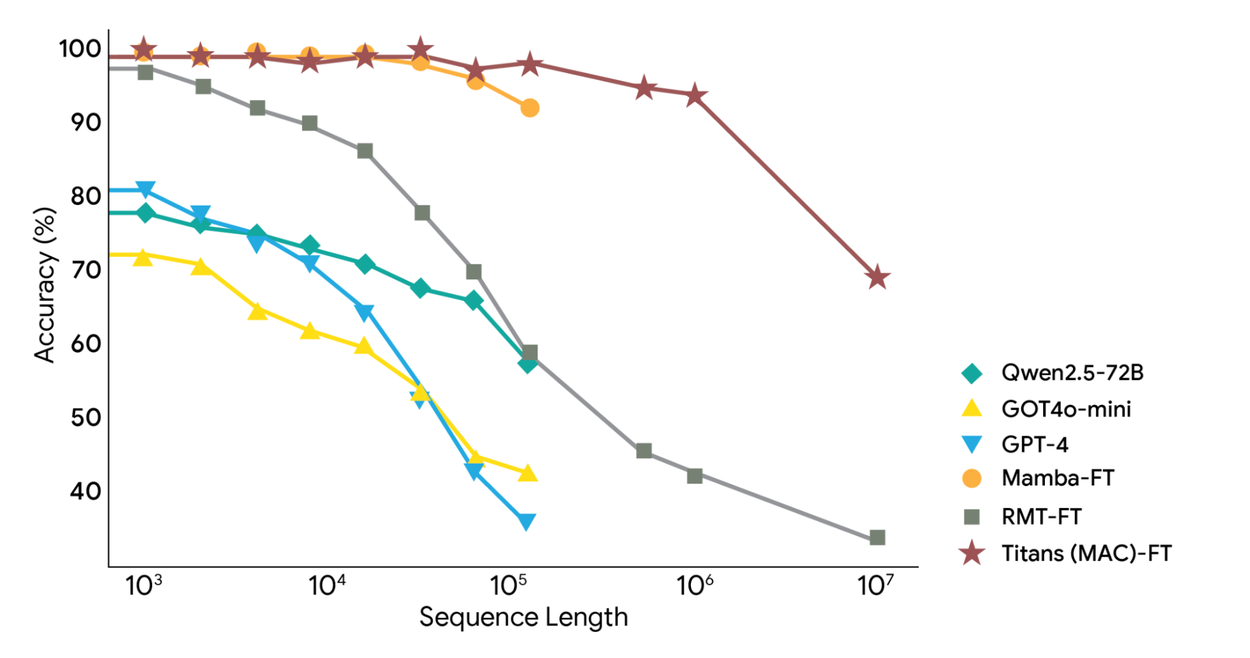

Google's Titans + MIRAS architecture, last refreshed on December 4, 2025, demonstrated a path toward long-term memory and models that can learn from experience during test time without catastrophic forgetting.

In early tests, this drastically improved accuracy for extremely long contexts, despite using far fewer parameters:

A Google engineer recently predicted:

Note for PMs and builders: These are AI-lab timelines, not when reliable capabilities actually reach production.

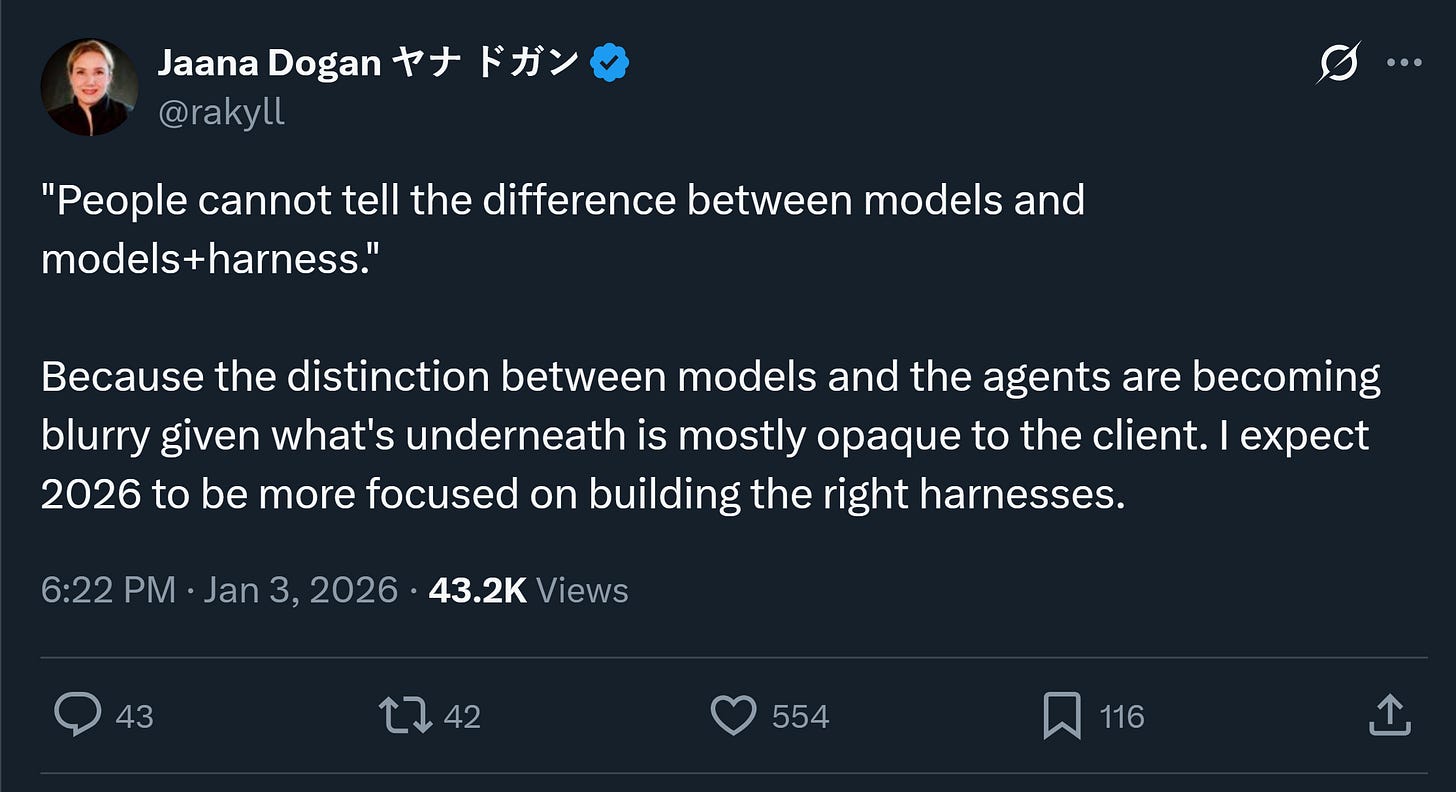

2.2 Harness

AI engineers often talk about “harness.” You can think of it as a safety and control layer around a model.

As Poetiq’s 75% ARC-AGI-2 result showed, you don’t need a better model to get better outcomes.

A Stanford paper from December 5, 2025, argues that LLMs are just the substrate. The real leverage is the orchestration layer you ship: how you coordinate multi-agent systems so they stay grounded and correct.

My translation (not their wording):

RAG for grounding

Context engineering for guiding

Tools for action

Verification loop for correctness

Guardrails for safety

Memory, scratchpads, and task lists for continuity

Roles for separation of concerns

Once the context and orchestration give the model enough support, outputs can change dramatically. We’re already seeing this with Claude Code.

Takeaway for PMs and builders: Stop judging “the model” in isolation. Most gains come from orchestration: retrieval, tools, memory, verification, and guardrails. AI evals help you iterate, prove it works, and monitor drift.

2.3 Why waiting for the next model is already late

If you’re waiting for “the next model” to unlock value, you’re already late.

The biggest gains in 2026 won’t come from raw model upgrades. They’ll come from better orchestration, clearer intent, tighter evals, and teams who know how to design systems around AI, not just call APIs.

3. What to Focus On in 2026

If 2025 was about capability catching up with hype, 2026 is about leverage.

The gap is no longer what models can do, but who knows how to use them best. Under real constraints: messy data, partial autonomy, human oversight, and business risk.

Here are the five key areas I want to highlight.

3.1 Product Discovery (still wins)

You might expect me to say “go AI-first.” I won't.

Because product discovery still defines whether you’re solving a real problem or just automating noise. I explained that also in WTF is AI PM.

AI reduces build cost, not the cost of being wrong.

You can’t build random things and throw spaghetti at the wall. You still need to:

Explore the problem space,

Identify real opportunities,

Form clear hypotheses,

Test assumptions deliberately.

AI lets you prototype, test, and discard ideas at unprecedented speed. But discovery decides where that speed is applied.

More & link to a video course free for premium members:

3.2 Developing AI intuition beats learning AI tools

AI tools change every few months. Mental models compound.

The best way to develop real AI intuition, understand what’s possible, and internalize the details that matter is to roll up your sleeves and build.

Our AI PM Learning Program is designed for that:

Part 2: C21 AI Agents Practitioner PM

As for tools, you need surprisingly few. You can start with:

Lovable / Google AI Studio / Dyad (build without friction)

Perplexity (research)

n8n (orchestration)

NotebookLM (research, structure)

Claude Code or Claude Desktop + Desktop Commander (Claude Code is amazing for developers, Desktop Commander enables file access and shell commands without terminal and can support many common PM tasks)

3.3 Areas to double down on

Read fewer things. Two posts studied deeply beat thirty skimmed once.

Understanding context engineering and AI evals covers some of the most critical insights I’ve seen recently:

The importance of expressing the intent:

Most agent failures I see are not model failures, but intent failures.

Remember to start with Why not What.

When given the strategic context, agents usually can make reasonable assumptions.

Defining what the good behavior is:

Start with Evals, not instructions.

You’re unlikely to get instructions or examples right without data and iteration.

Some of the most important pieces of the newsletter:

Error Analysis (not easy, but critical)

3.4 Product strategy, distribution, and pricing

When building becomes cheap, strategy becomes expensive.

This aligns with what I first noticed working at Ideals (my last and favorite company, I still wear their t-shirt): PMs were expected to explore the market, contribute to strategy, and identify expansion opportunities.

Useful reads:

3.5 Career shift and the future of PM

You don’t have to code. But roles in AI require increasingly more engineering (resources discussed here).

This is already visible:

Meta explicitly expects PMs to vibe-code

LinkedIn launched the Associate Product Builder Program

Your goal is to drive value, not stay in your comfort zone. Whatever the PM role becomes, think of yourself as someone who solves problems at the intersection of business, users, and technology.

There have never been more opportunities.

Even as traditional roles increasingly overlap.

—

If you want to transition into AI PM, don’t start with a resume.

Solve a real problem or identify an opportunity instead, like Olia Herbelin did by proposing an AI Agents Buildathon for PMs (not my idea!).

That was the first time I’d seen someone approach it this way.

This and other “unfair strategies” top AI PM candidates rely on are described here (a coincidence).

Thanks for Reading The Product Compass

2026 is here. The leverage shift has begun.

Start building. Stop theorizing. We're just starting.

For dozens of AI PM resources, see The Ultimate AI PM Learning Roadmap and our articles archive.

Have an incredible 2026,

Paweł