A Guide to Context Engineering for PMs

Context engineering is the new prompt engineering. And it’s becoming the most critical AI skill.

Hey, Paweł here. Welcome to the freemium edition of The Product Compass.

It’s the #1 AI PM newsletter with practical tips and step-by-step guides read by 119K+ AI PMs.

Here’s what you might have recently missed:

Consider subscribing or upgrading your account for the full experience:

Updated: 11/2/2025

Just a few weeks ago, everyone in AI started talking about context engineering as a better alternative to prompt engineering.

A recent post by Tobi Lutke, CEO of Shopify, shared by Andrej Karpathy (ex-OpenAI):

Understanding context engineering is becoming the most critical component for anyone working with AI systems. As Aaron Levie (CEO Box) puts it:

But there are many misconceptions around the term.

We discuss:

What Is Context Engineering

Context Types in Detail

Context Engineering Techniques

Where RAG Fits In

Recommended Resources

Conclusion

Let’s dive in.

1. What is Context Engineering

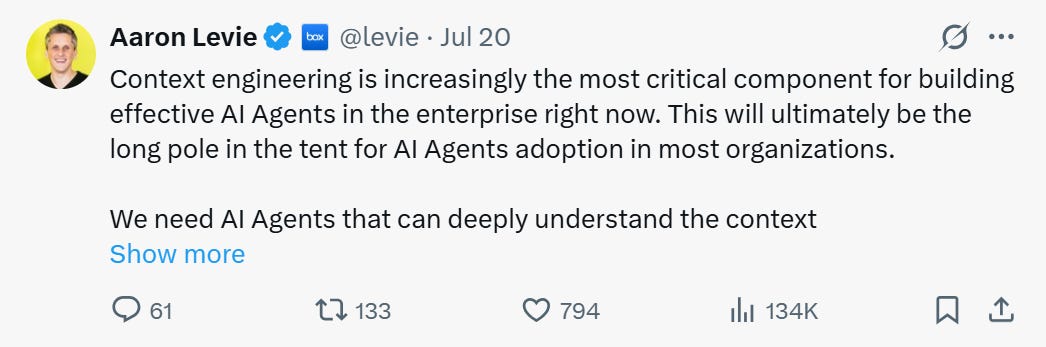

Context engineering is the art and science of building systems that fill LLM (large language models) context window to improve their performance.

Unlike prompt engineering, which we often associate with writing better prompts, context engineering is a broader term with many activities that happen also before the prompt is even created.

This involves:

Providing broader context (e.g., strategy, domain, market) to enhance autonomy.

Retrieving and transforming relevant knowledge (e.g., from external systems or other agents).

Managing memory so that an agent can remember its previous interactions, collect experiences, save user preferences, and learn from mistakes.

Ensuring an agent has the necessary tools and knows how to use them.

Providing Minimal Required Information

When preparing the context, we need to be intentional about what we want to share.

All the context types fill in a (limited) context window, affect the performance, and consume your input tokens.

2. Context Types in Detail

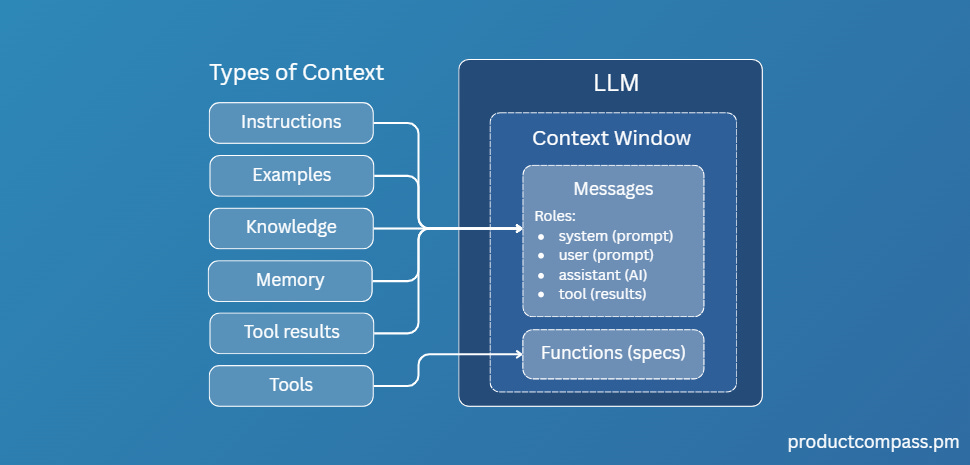

2.1 Context Types

The most common ways of formatting the context are JSON, XML, markdown, and structured prompts.

I prepared two examples you can analyze:

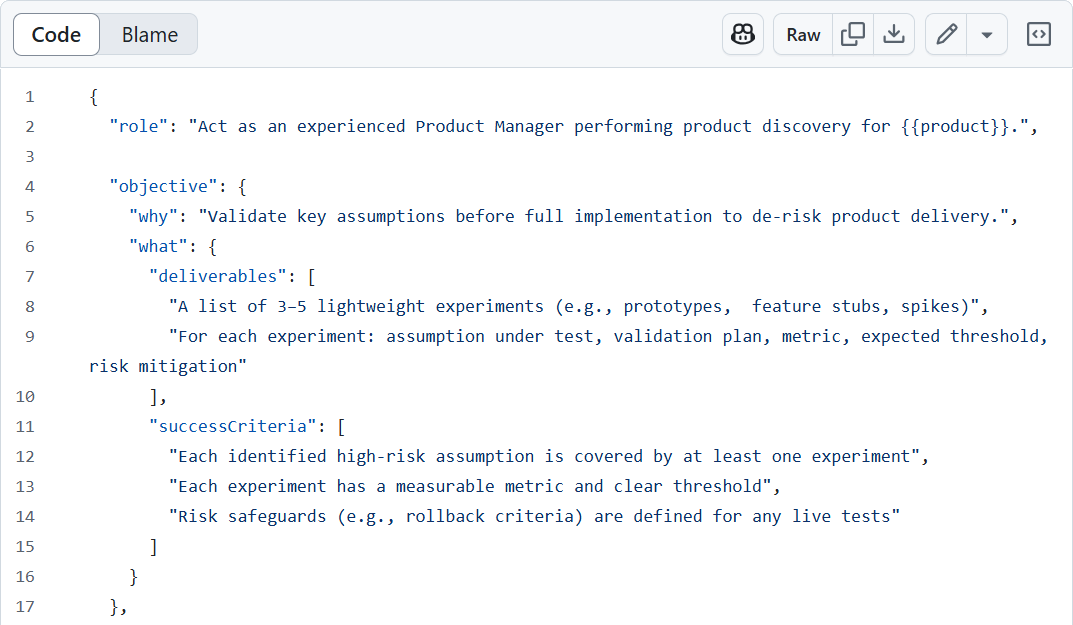

PM Agent Helping With Experiments (view as JSON on GitHub)

Lovable Bug Fixing Agent (view as XML on GitHub)

Below, I discuss types of context using the first example:

2.1.1 Instructions

The most common elements:

Role (Who): Encourage an LLM to act as a persona, e.g., PM or market researcher

Objective:

Why is it important (motivation, larger goal, business value)

What are we trying to achieve (desired outcomes, deliverables, success criteria)

Requirements (How):

Steps (reasoning, tasks, actions)

Conventions (style/tone, coding rules, system-design)

Constraints (performance, security, test coverage, regulatory)

Response format (JSON, XML, plain text)

Note that I like providing objectives explaining not just what but also Why we want to achieve. This clarifies the strategic context and helps agents make better decisions. I’ve been doing this since publishing my Top 9 High-ROI ChatGPT Use Cases for Product Managers.

Just recently, I found scientific paper arXiv:2401.04729 that demonstrates how supplying strategic context beyond raw task specifications improves AI autonomy.

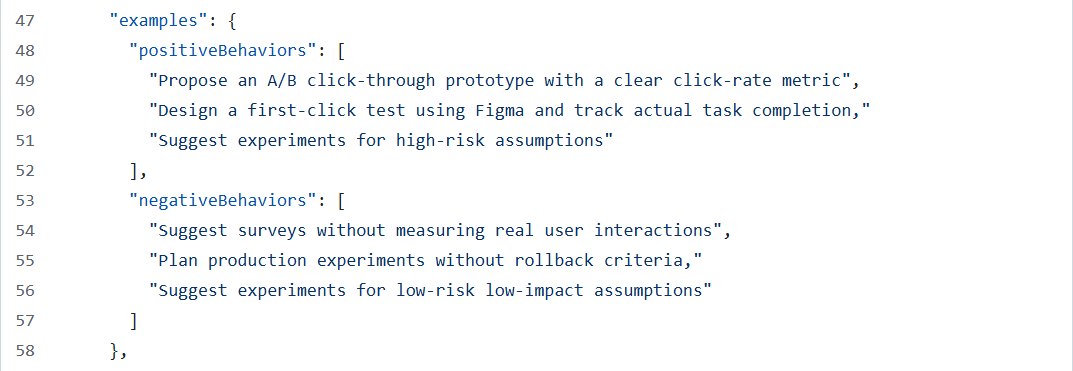

2.1.2 Examples

Consider including positive and negative examples. In particular, negative examples might help you address issues identified during error analysis:

Behavior Examples:

Positive

Negative

Responses Examples:

Positive

Negative

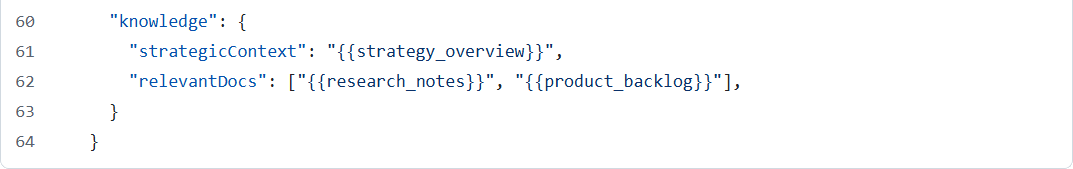

2.1.3 Knowledge

Including external context (e.g., about the market or the system the agent is part of) increases the awareness and often helps agents make better decisions:

External Context

Domain (strategy, business model, market facts)

System (overall goals, other agents/services)

Task Context

Workflow (process steps, place in the process, hand‑offs)

Documents (specs, procedures, tickets, logs)

Structured Data (variables, tables, arrays, JSON/XML objects)

2.1.4 Memory

The key distinction involves long-term and short-term memory:

Short-term memory (often lives within a user session):

Previous messages, chat history

State (e.g., reasoning steps, progress)

Long-term memory (stored in database or file system):

Semantic (facts, preferences, user/company knowledge)

Episodic (experiences, past interactions)

Procedural (instructions captured from previous interactions)

Memory is not part of the prompt. Instead, it can be provided in several ways:

Automatically attached by the orchestration layer to the list of messages. You can only see the result of those actions.

Accessed by the agent as a tool.

For example:

When using OpenAI Assistants API, the conversation history is attached automatically. This memory is limited to a specific conversation (thread), and the model’s context window. I previously demonstrated that in Base44: A Brutally Simple Alternative to Lovable.

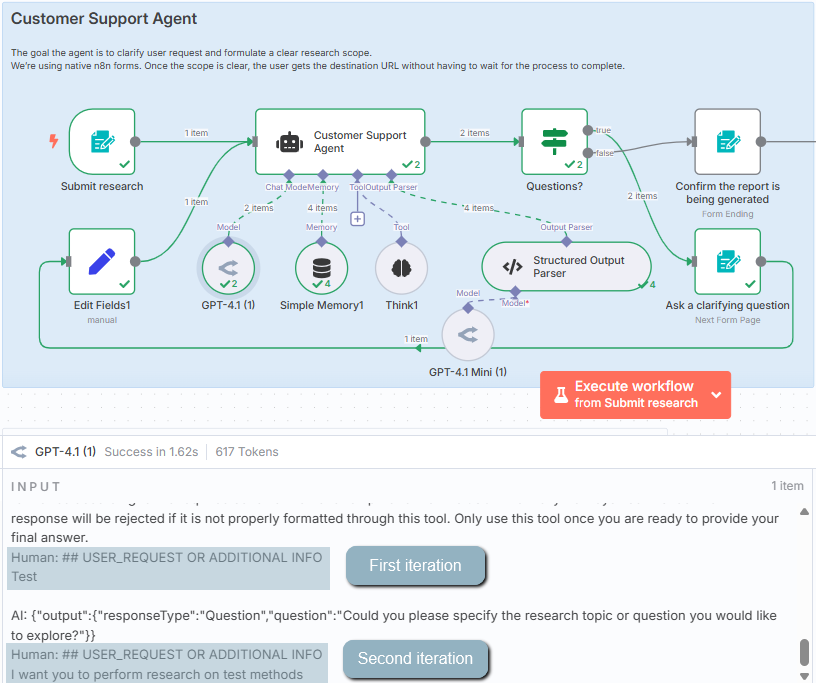

When using n8n, the memory (e.g., Simple Memory) is, too, injected automatically.

Other types of memory like scratchpads or saved user preferences (as seen in ChatGPT) are, in fact, special tools. This information is attached automatically to the messages list so that LLM can see them.

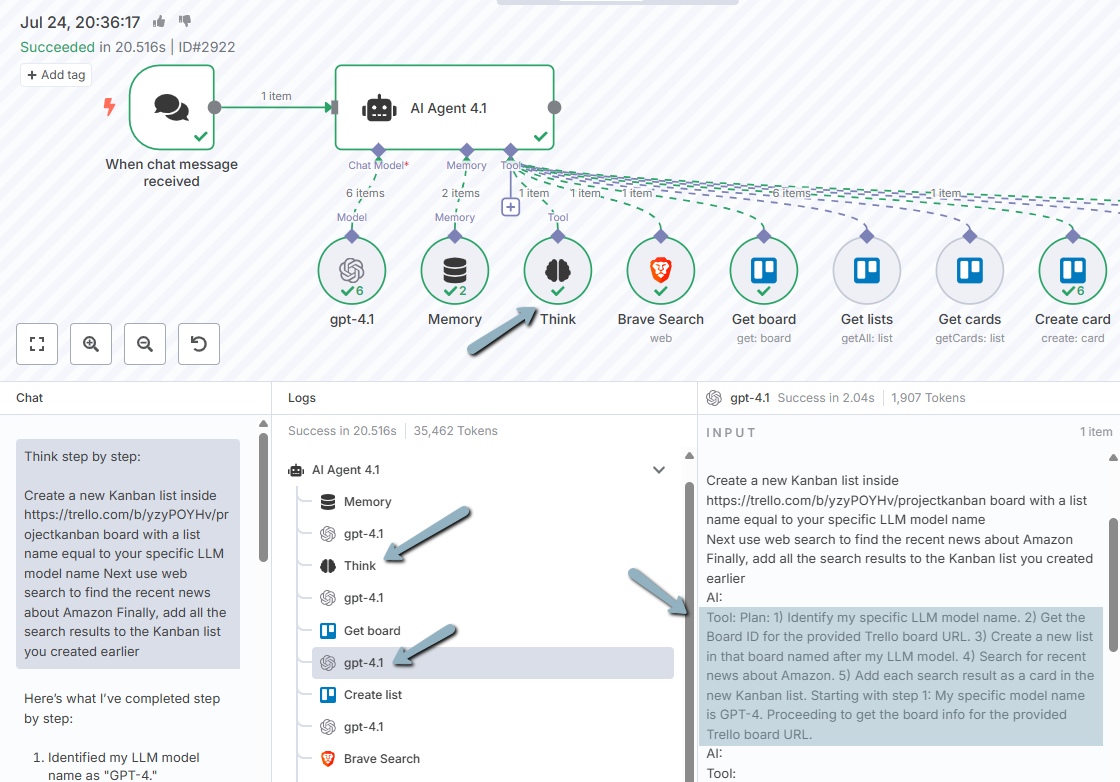

A good example is the n8n Think Tool that enables structured thinking. The agent uses that tool to save information. The saved memories are then attached automatically to future LLM calls:

2.1.5 Tools

A special “functions” block in the LLM context window where for every tool we specify:

Name

Description (what it does, how to use it, return value)

Parameters

Type

Description (often with examples)

Is it required

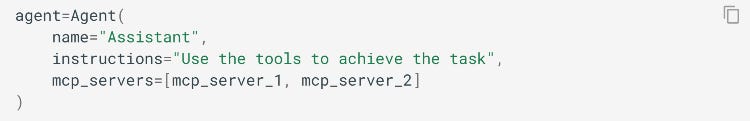

In some APIs you can see functions abstracted as “tools.” For example, when using OpenAI Agents API, you work with tools and MCP servers. But at the end of the day, the LLM can see them as functions:

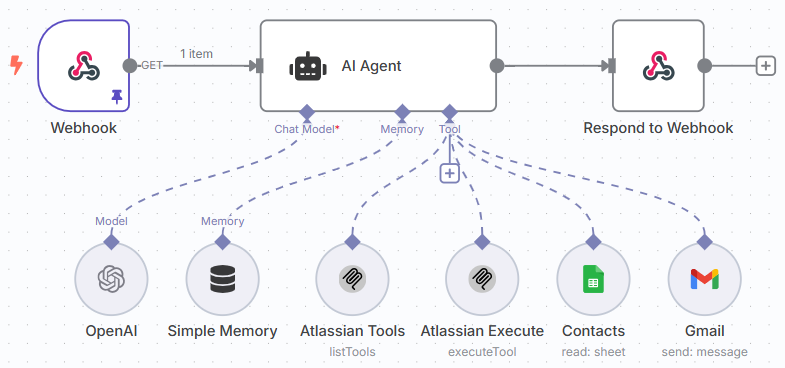

When using n8n you don’t have to write any code. You just visually plug an MCP or a tool node:

2.1.6 Tool results

An LLM can’t call functions directly. It always responds with text.

To call a function, an LLM uses a special format interpreted by the system. It’s like saying, “Please call this tool with these parameters:”

Next, an orchestration layer responds by attaching a special message to the messages list:

3. Context Engineering Techniques

3.1 What is RAG and Where It Fits In

First, let’s clear up a common misconception.

RAG (Retrieval-Augmented Generation) is often labeled a context engineering technique, but that’s only partly true. RAG is a three-step pipeline:

Information Retrieval: Pulling data from external sources (e.g. vector DBs, APIs)

Context Assembly: Structuring and filtering the retrieved data into a prompt

Generation: Using an LLM (or agent) to generate the output

Context engineering focuses on how we select and prepare the context (steps 1-2). It stops before generation.

So when papers or posts say “RAG is context engineering,” they blur the line. RAG includes context engineering but also goes a step further.

3.2 Information Retrieval Techniques

Keep reading with a 7-day free trial

Subscribe to The Product Compass to keep reading this post and get 7 days of free access to the full post archives.