OpenAI’s Product Leader Shares 3-Layer Distribution Framework To Win Mind & Market Share in the AI World

Features get cloned. Models commoditize. But distribution compounds. This is your definitive guide to AI distribution: from GTM wedges to PLG loops to moat flywheels.

Hey, Paweł here. Welcome to the #1 actionable AI PM newsletter. Every week I share hands-on advice and step-by-step guides for AI PMs.

Here’s what you might have missed recently:

5 Phases To Build, Deploy, And Scale Your AI Product Strategy From Scratch

AI Agent Architectures: The Ultimate Guide With n8n Examples

Join 124,284 subscribers and consider upgrading your account for the full experience. Prices rise next month, but those who upgrade before Oct 1 keep their lifetime rate.

The Forgotten Battlefield: Why Distribution Is the Only Moat Left

Every product wave has its myth.

In the early days of SaaS, the myth was that “features win markets.” Build a product with enough capability, and adoption would follow.

For mobile, the myth was that “design wins,” beautiful apps rise above the noise.

In the AI era, the myth is already clear: many believe that “models win.”

Whoever has the best model will win the market.

That belief is not just wrong. It’s dangerous.

Because, as we’ve learned, models commoditize. Every 90 days, the next release from OpenAI, Anthropic, or Google wipes out the advantage of the one before it. The cost curve shifts, capabilities improve, and suddenly the moat you thought you had disappears overnight.

In AI, your features will be cloned (like I cloned popular products with Accredia PoC), your models will be overtaken, and your “unique capability” will be commoditized faster than in any other wave of technology.

We spent several hours discussing this with our guest.

The only thing that endures, the only battlefield that can’t be taken away by an API update, is distribution.

But here’s where most PMs and product leaders trip up: they treat distribution as a marketing problem. Something you figure out after the product is built.

“Let’s launch a Product Hunt.”

“Let’s run some paid ads.”

“Let’s sign a few influencer deals.”

That mindset is fatal in AI. Distribution is not something you tack on. Distribution is the product now.

It is the set of design choices, wedges, loops, and moats that determine not just how users show up, but whether every new user compounds value or erodes your economics.

Get it wrong, and you risk burning money and becoming irrelevant.

Think about Perplexity.

On the surface, it’s “just another LLM-powered search.” But the brilliance wasn’t just in their retrieval-augmented generation. It was in how they positioned distribution: a wedge into the information workflow with transparent citations. That choice made it sharable, trustable, and viral.

Their distribution engine wasn’t ads, it was users themselves using Perplexity answers as sources in Slack threads, blog posts, and research decks. Distribution was baked into the product.

Or take Midjourney.

They could have launched as a standalone site like every other AI image generator. Instead, they built inside Discord.

That wasn’t a UX accident, it was a distribution wedge: every image created was public by default, every prompt was social, and every user became a node of viral growth.

Or consider Figma AI.

They didn’t hold a flashy AI launch day. They quietly tucked AI into the exact moments designers already struggled: mockups, auto-layout, copy tweaks. The distribution wasn’t about reaching new users, it was about embedding deeper into the workflows of their existing user base.

That subtle distribution choice meant their AI didn’t need a campaign to spread; it was instantly useful inside a workflow millions already lived in.

Remember: distribution is the real battlefield. Not who has the flashiest demo. Not who fine-tuned the model best.

The winner is the one who designs distribution so well it compounds faster than commoditization can catch up.

We’ll unpack it all today with our guest, Miqdad Jaffer, Product Lead at OpenAI. This might be one of the best, if not the best, guest posts we’ve had in this newsletter.

Side note: If you want to build a defensible strategy, join the 6-week AI Product Strategy Cohort led by Miqdad Jaffer, Product Lead at OpenAI.

After 6 weeks, you won’t just master AI product strategy. You’ll walk away with a battle-tested blueprint for your own product: how to architect it, price it, package it, align your tech stack so it scales without collapsing under costs, and more.

For the first 100 students (only 25 spots left), you’ll also get a personal written review of your AI strategy (a $10K value) from Miqdad, and I secured $550 off.

70+ leaders from UberAI, Rippling, and other top companies have already joined.

Go here:

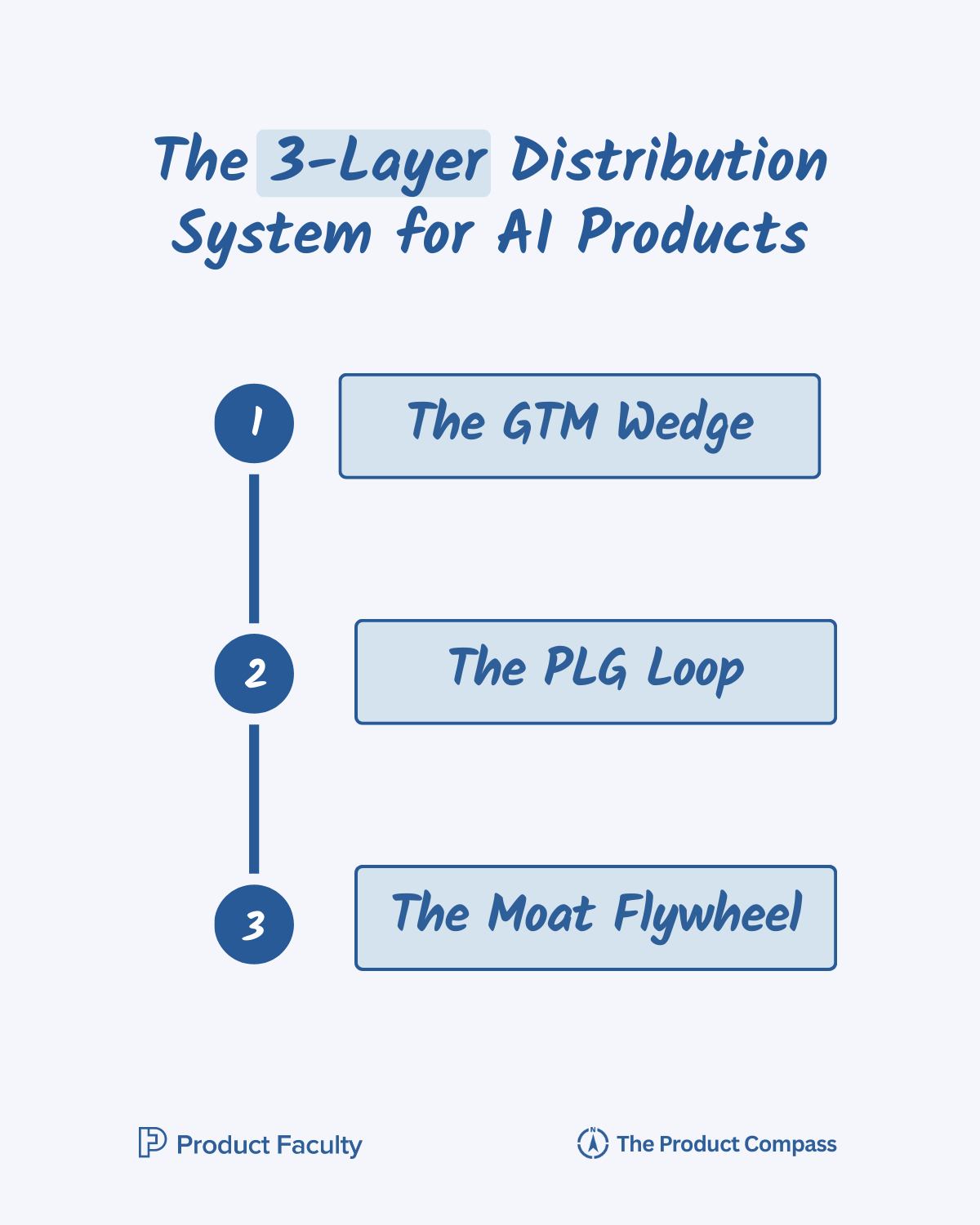

The 3-Layer Distribution System for AI Products

Distribution is not a single act. It’s a system with three layers:

Layer 1: The GTM Wedge: How you enter. The precise vector that gets you into the workflow or conversation without being crushed by giants or drowned in noise.

Layer 2: The PLG Loop: How you compound. The viral, collaborative, or data-driven feedback loops that ensure every new user doesn’t just show up, but makes the product stronger, cheaper, or more valuable for the next.

Layer 3: The Moat Flywheel: How you defend. The structural lock-ins—data, workflow, or trust that ensure competitors can’t simply clone your wedge and ride your loops.

Most PMs get stuck on a single layer. Some obsess over the GTM wedge (“we just need a killer launch”). Others fixate on the PLG loop (“let’s engineer a viral hook”). A few jump straight to the moat (“we’ll build a data flywheel eventually”).

The truth: you need all three.

Without the wedge, no one notices you.

Without the loop, you bleed cash with every new user.

Without the moat, your users churn the moment a cheaper clone shows up.

The companies that define decades are the ones that deliberately design all three layers.

Next, we cover:

I. Layer 1: Why the GTM Wedge Matters More in AI Than in SaaS

II. Layer 2: The 7 PLG Loops for AI Products

III. Layer 3: The Three Defensible Moats in AI Distribution

IV. 6 Laws of AI Distribution Based on 6 Case Studies

V. The 7-Step Distribution Strategy Playbook for AI PMs

VI. 9 Advanced Distribution Tactics (What Billion-Dollar Founders Do Differently)

VII. Conclusion

Let’s dive in.

I. Layer 1: Why the GTM Wedge Matters More in AI Than in SaaS

In traditional SaaS, you could afford to launch broad. If you built a project management tool, you could pitch “better collaboration” and still carve out space, because marginal costs trended toward zero, and even lightweight differentiation like integrations or UI design gave you breathing room. AI doesn’t give you that luxury.

Here’s why:

Costs punish vague wedges. Every time a user queries your AI, you pay for it. If your wedge isn’t tightly defined, you’ll attract casual, low-value users who burn GPU minutes without compounding into retention, data, or referrals. You’ll literally bleed money by the click.

Commoditization collapses broad positioning. “AI writing” sounds exciting until OpenAI drops a better base model for free. Suddenly, your broad wedge dissolves overnight because your story wasn’t anchored in a defensible doorway.

Speed compresses entry windows. In SaaS, you had years to refine your wedge before a competitor caught up. In AI, that window is measured in months, sometimes weeks. The wedge must hit hard and immediately anchor you into a workflow or community before clones flood the market.

In other words: your wedge is not just your entry, it’s your survival mechanism.

Advanced Characteristics of a Strong Wedge

When you evaluate wedges for AI products, you need to look for five deeper traits beyond the basics:

Asymmetry of Pain vs Cost. A great wedge solves a pain point that feels disproportionately big to the user, but is disproportionately cheap for you to deliver. Meeting notes look like a small wedge, but the pain (hours wasted writing summaries) is massive, while the solution (record → transcribe → summarize) can be run cheaply with smaller models. That asymmetry is gold.

Proof on First Use. You don’t get 30 days of trial in AI. Users need to see value in 30 seconds. A wedge must deliver a “wow” immediately, not in the fourth week of onboarding. That’s why “AI notes” works but “AI project productivity” doesn’t. The latter is too abstract to validate in a single shot.

Obvious Storytelling Handle. A wedge needs a story so crisp it spreads itself. “AI legal contract reviewer” is sticky. “AI enterprise workflow optimizer” is vague. The sharper the language, the easier the wedge travels through word-of-mouth, Slack threads, and LinkedIn posts.

Expansion Optionality. The wedge must be narrow to land, but broad enough to expand later. Grammarly started with spelling corrections (narrow, painful, obvious) but expanded into tone adjustments, rewriting, and now generative writing. The wedge was tight, but the expansion surface was huge.

Resistance to Immediate Displacement. Ask yourself: if OpenAI launched this as a free button tomorrow, would we still have a reason to exist? If yes, you’ve got a wedge. If not, you’re just a demo.

Wedge Examples

To make this more concrete, let me highlight wedges that don’t usually get discussed, but perfectly illustrate the principle:

Perplexity’s “Citations by Default”. Their wedge wasn’t “better search.” It had trustable answers with citations. A tiny design decision that created a defensible wedge into researchers, analysts, and power users who needed credibility. Google or OpenAI could have done it, but they didn’t. That was the doorway.

Copy.ai’s “Marketing-Specific Templates”. Instead of going broad like Jasper, Copy.ai leaned into one wedge: marketers who wanted templates for blog posts, ads, and emails. Not just “AI writing” but “AI writing for marketing use cases.” That wedge created an entry into teams where ROI was measurable.

Runway’s “Editor-First Tools”. Runway didn’t wedge with “AI video.” They targeted professional editors and filmmakers with tools like background removal and timeline automation. Those problems were painful, frequent, and measurable. They weren’t trying to win the entire creative market at launch, just the pros who had real budgets and clear workflows.

Each of these wedges wasn’t about showing “AI magic.” They were about picking a very precise doorway into a very specific user base and anchoring themselves before competitors could react.

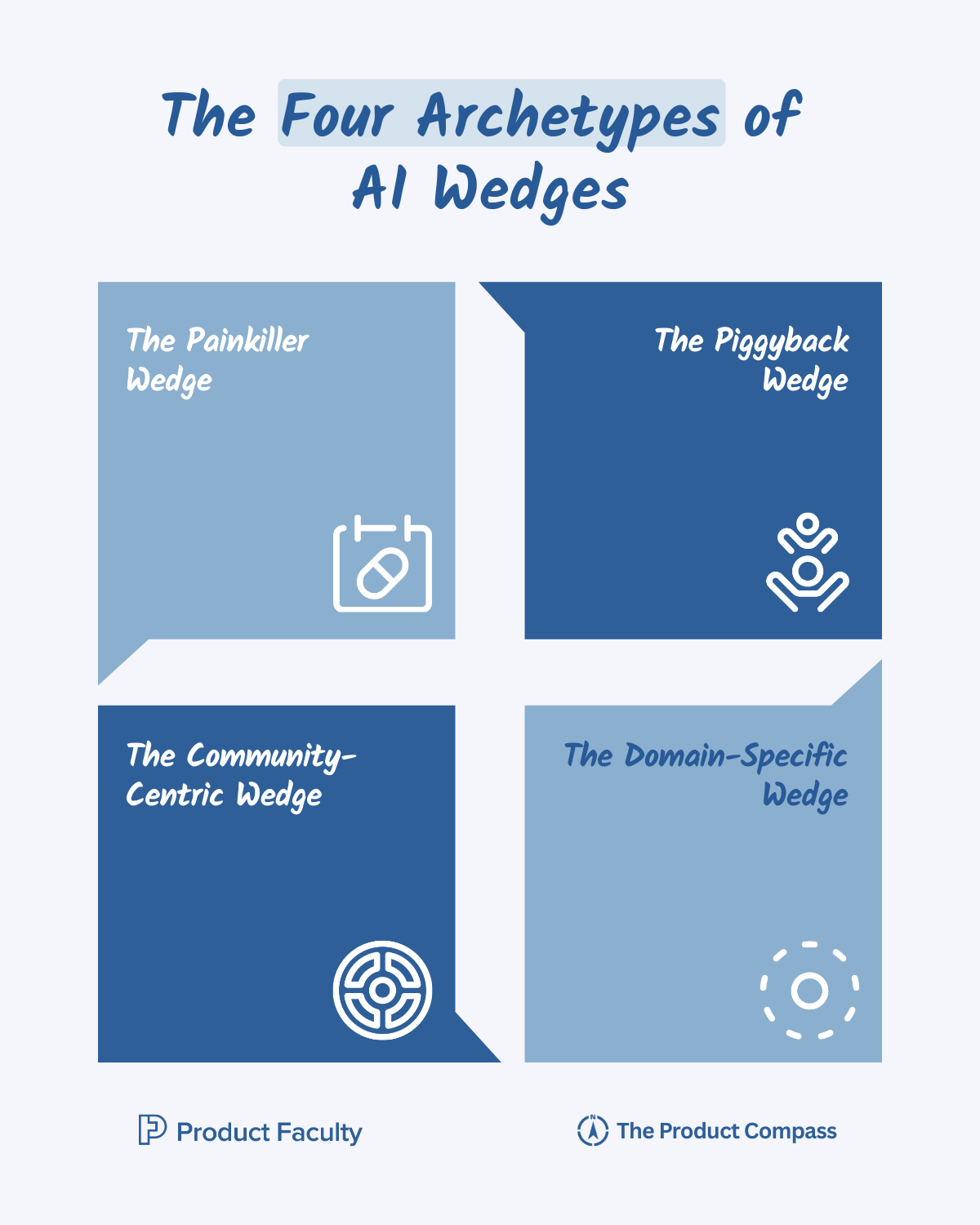

The Four Archetypes of AI Wedges

Over years of watching AI products launch, scale, and collapse, I’ve noticed a recurring pattern: the ones that survive almost always enter through one of four archetypal wedges. These aren’t “categories” in the abstract sense; they’re practical distribution doorways that make or break early adoption.

If your product didn’t take off, nine times out of ten it’s because you picked a wedge that was too broad, too expensive, or too easily cloned. Get the wedge wrong, and no amount of downstream GTM or PLG magic can save you. Get it right, and you buy yourself the most precious commodity in AI: time to expand before the giants notice.

Archetype 1: The Painkiller Wedge

The most obvious, and often the most effective, is the painkiller wedge. This is when you attack one repetitive, universally hated task and remove it so completely that users feel immediate, almost physical relief.

Granola is the classic example: it didn’t brand itself as “AI productivity,” which would have been too vague and too crowded. Instead, it picked one specific job every knowledge worker loathes: writing meeting notes. The pain was high-frequency (every day), high-friction (always distracting from the real meeting), and high-visibility (everyone knows when notes are missing or late). By solving this with near-instant accuracy, Granola didn’t need a big marketing budget; its users evangelized it because the relief was obvious on first use.

The power of a painkiller wedge is that you rarely need to educate users. They already understand the pain and immediately recognize the value. But the danger is commoditization: if the wedge is too shallow, competitors can copy it overnight. Which means you must either move quickly to stack moats (data, distribution, trust) or design the wedge in a way that naturally expands into adjacent workflows before clones catch up.

Archetype 2: The Workflow Piggyback Wedge

The second archetype is the workflow piggyback wedge. Instead of convincing users to adopt something new, you ride the momentum of tools and habits they already have. This works because users don’t want to “learn AI.” They want to keep doing their job, but faster and easier.

Figma AI nailed this by quietly slipping into the design flow with auto-layouts, copy tweaks, and mockup generation. Designers didn’t have to leave the canvas, didn’t have to open another tab, and didn’t even need to change their mental model. AI was simply there, augmenting familiar steps. Adoption felt frictionless because it piggybacked on muscle memory.

The brilliance of workflow piggybacking is that it feels invisible. The risk, however, is platform dependency. If you’re a plugin or extension, you’re always one API change away from irrelevance or worse, from the host platform simply building your wedge natively. The way to mitigate this is to use piggybacking as a short-term wedge but expand into standalone surfaces as soon as you prove traction.

Archetype 3: The Domain-Specific Wedge

The third, and in my opinion one of the most underrated, is the domain-specific wedge. This is where you go deep into a vertical where general-purpose AI is unreliable, and you build trust by delivering precision where others fail.

Harvey is the poster child here. Instead of building yet another “AI legal assistant,” they attacked one high-value, high-risk job: contract review in mergers and acquisitions. It’s repetitive, it’s expensive, and it’s riddled with nuance that general LLMs consistently miss. By going deep into that narrow but lucrative vertical, Harvey built credibility with top firms and gained access to proprietary workflows and datasets that strengthened their moat.

The reason this wedge works is simple: in most industries, generic AI is not good enough. It hallucinates, misses context, or fails compliance. Domain-specific wedges win because they encode expertise that general-purpose models cannot replicate. But the trade-off is scale: the narrower the wedge, the harder it is to grow beyond the initial vertical unless you’ve planned your expansion path from day one.

Archetype 4: The Community-Centric Wedge

Finally, there’s the community-centric wedge, which is less about solving an individual pain point than about turning the product into a cultural engine. This wedge works when outputs are inherently visible, remixable, and social, so every new user attracts the next wave of users.

Midjourney exemplifies this. By forcing prompts and outputs into public Discord channels, they transformed individual usage into collective spectacle. Every generated image wasn’t just an output, it was a marketing asset that lived in the community. The network effects compounded: users learned by watching others, experimented with new styles, and competed for recognition. Midjourney didn’t spend millions on ads; the community was both the product and the distribution channel.

The upside of community wedges is explosive virality. The downside is fragility: without strong curation, communities collapse into spam, and without clear incentives, creators drift away. To make this wedge sustainable, you must treat the community as a first-class product surface, not an afterthought.

Why Most Wedges Fail

If wedges are so powerful, why do most AI products still flop?

Because PMs confuse “feature novelty” with “distribution entry.”

Here are the three killers I’ve seen firsthand:

Going Broad Instead of Sharp. “We do AI design” sounds big, but it’s actually weak. Compare that to “We fix auto-layout pain in Figma.” The sharper wedge wins because it cuts deeper and faster.

Chasing Demos, Not Distribution. A demo can go viral on Twitter, but it doesn’t embed in workflows. Jasper chased viral demos (“AI can write anything!”) while Copy.ai anchored itself in marketing templates. Guess who sustained longer.

Ignoring Cost in the Wedge. A wedge that costs $5 per query might impress in a demo, but it’s not sustainable at 10,000 users. The wedge must be cheap enough to validate without bankrupting you. This is why I tell teams: model cost-per-wedge before you launch, not after.

The Wedge Finder Canvas

Workflow Mapping → What’s the full workflow the user runs today? Write every step.

Friction Heatmap → Where are the high-frequency, high-pain moments? Circle them.

Obviousness Test → Which of those could deliver value in <30 seconds? Mark those.

Defensibility Stress-Test → Which circled points survive the “OpenAI test”—If OpenAI built your exact feature and made it free, would you still be able to win?” Keep only those.

Narrative Handle → Write the 3–5 word story you’d put on a slide. If it takes a sentence, it’s too vague.

Do this exercise, and you’ll walk out with one wedge that is actually distribution-worthy.

So far, we’ve covered:

I. Layer 1: Why the GTM Wedge Matters More in AI Than in SaaS

Next, you’ll learn:

II. Layer 2: The 7 PLG Loops for AI Products

III. Layer 3: The Three Defensible Moats in AI Distribution

IV. 6 Laws of AI Distribution Based on 6 Case Studies

V. The 7-Step Distribution Strategy Playbook for AI PMs

VI. 9 Advanced Distribution Tactics

VII. Conclusion: The Distribution Mindset Shift

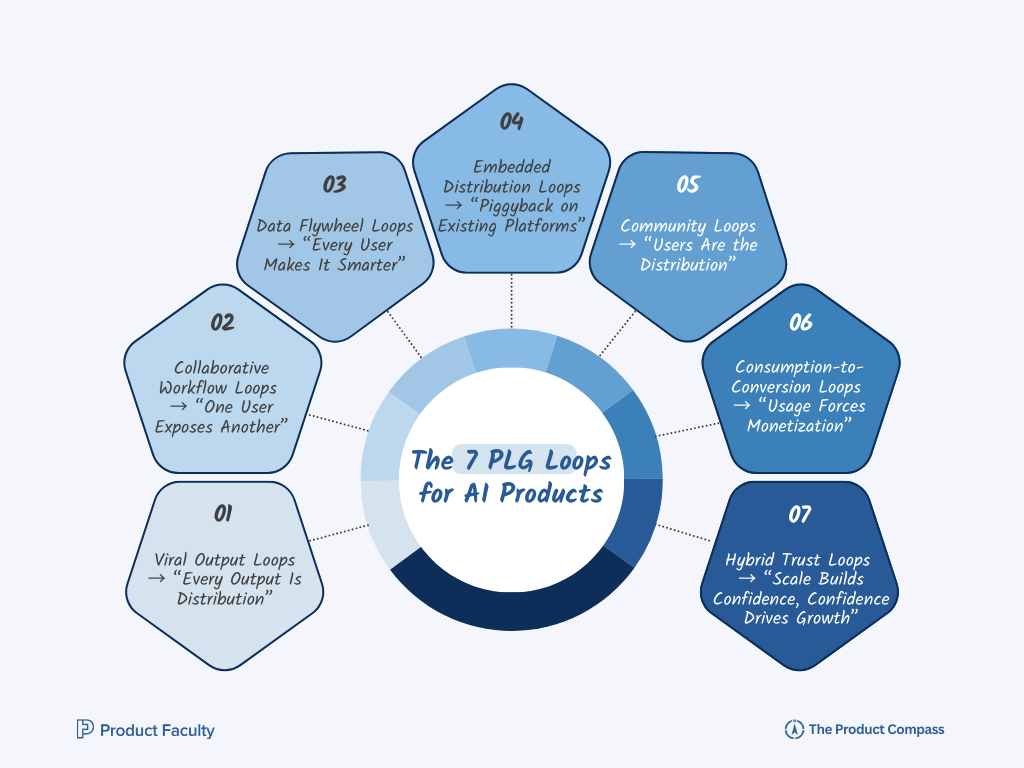

II. Layer 2: The 7 PLG Loops for AI Products

In SaaS, PLG usually meant free trials, referral bonuses, or the classic “invite your team” mechanic. But AI changes the game. Because every query, every output, every workflow has the potential to generate more distribution if it’s designed as a loop.

Here’s the shift: in AI, PLG isn’t just about virality, it’s about compounding adoption.

A single user generating value should create visibility, data, or incentives that pull the next user in, without marketing spend. Done right, your product doesn’t just retain; it recruits, educates, and sells itself.

Over the last few years, working with AI founders and product leaders, I’ve found seven distinct loops that consistently drive this kind of compounding growth. Each one is different, but all share the same DNA: usage → creates value → attracts new usage → strengthens the moat.

Let’s break them down with examples and a playbook you can follow:

Keep reading with a 7-day free trial

Subscribe to The Product Compass to keep reading this post and get 7 days of free access to the full post archives.