The AI Product Pricing Masterclass: OpenAI Product Lead on Why SaaS Pricing Fails in AI (and How to Fix It)

If you think pricing AI products is about tokens, you’re already behind. This deep dive exposes the hidden economics of AI and how PMs can design pricing that survives real-world usage.

Hi, Paweł here. Welcome to the freemium edition of The Product Compass newsletter.

Each week, I share actionable insights and resources for AI PMs. Here’s what you might have missed:

5 Phases To Build, Deploy, And Scale Your AI Product Strategy

A Guide to Context Engineering for PMs (one of the most important posts)

I just released a new premium section and a new archive of articles.

Subscribe and upgrade for the full experience:

This is a guest article written in collaboration with Miqdad Jaffer, Product Lead at OpenAI. I work with Miqdad as AI Labs Build Leader for the AI PM Certification Cohort.

In traditional SaaS, the system’s behavior is deterministic. You click a button, the same logic runs every time. Costs also behave nicely: once you amortize infrastructure, the marginal cost of serving one more user trends toward zero.

Pricing models grew up around that reality. Seats, tiers, bundles, “unlimited” plans. The core assumption was simple: more usage is good, because costs flatten as you scale.

AI flips that assumption.

In AI systems, the product does not just execute code. It reasons. And reasoning is not free. It is variable, sometimes unpredictable, and often unbounded unless you explicitly design it to be.

Every interaction triggers a cascade of costs that depend not just on how many users you have, but on how they behave, what they ask, how often they retry, how complex their workflows become, and how much parallel demand they create.

This is why AI pricing is not an extension of SaaS pricing. It is a different discipline altogether.

In this post, we discuss:

Why AI Product Pricing Is Fundamentally Different From SaaS

The Real Cost Structure of AI (The 7 Layers)

The Four AI Pricing Models (and When to Use Each)

🔒 Stability vs Scale: The Strategic Tension

🔒 How to Choose Your AI Pricing Model: A Decision Tree

🔒 AI P&L: A Full Unit Economics Breakdown

🔒 Conclusion

🔒 AI Cost Glossary

Let’s dive in.

1. Why AI Product Pricing Is Fundamentally Different From SaaS

1.1 The death of “zero marginal cost” thinking

The most dangerous SaaS habit AI teams carry forward is the belief that marginal cost eventually disappears. In AI, it never does.

Every meaningful AI interaction has a real cost attached to it: not just tokens, but compute allocation, latency trade-offs, orchestration overhead, retrieval, and often retries or fallback paths.

There is no finish line where you’ve “paid the cost once” and can now scale freely.

Even worse, the marginal cost isn’t stable. It changes as your product evolves, as users get more sophisticated, and as edge cases accumulate.

The same feature that was cheap at launch can become expensive six months later simply because users learned how to push it harder.

In SaaS, growth tends to smooth costs. In AI, growth often amplifies them.

This is the first mental shift pricing must reflect: your AI system remains economically alive forever.

1.2 Costs scale with behavior, not just users

In SaaS, two customers on the same plan usually cost roughly the same to serve. In AI, two customers paying the same amount can have radically different cost profiles.

One user might ask short, well-scoped questions, accept imperfect answers, and move on. Another might run long, iterative workflows, repeatedly refine prompts, trigger multiple retries, and expect high accuracy every time.

From a pricing perspective, these two users look identical. From a P&L perspective, they are opposites.

This is what makes AI pricing unintuitive. The thing you celebrate (engagement) is often the thing that destroys margins if it isn’t constrained or monetized properly.

Traditional pricing assumes usage correlates with value. AI breaks that assumption.

Usage correlates with cost volatility, not guaranteed value. Some of the most expensive interactions are exploratory, redundant, or compensating for system weaknesses rather than delivering incremental benefit.

That means pricing can no longer be passive. It has to shape behavior.

If your pricing doesn’t influence how users interact with the system, the system will eventually influence your margins instead.

1.3 Variance is the real enemy

Most teams obsess over average cost per request. That’s the wrong metric.

AI systems don’t fail on averages. They fail when real usage creates variance.

Variance shows up everywhere: prompt length, context size, retries, parallel usage spikes, long-tail edge cases that require heavier models, and moments where the system has to work much harder to maintain quality.

SaaS pricing models assume variance is negligible. AI pricing models must assume variance is inevitable.

The uncomfortable implication: pricing must be designed for worst-case behavior, not typical behavior. If your pricing only works when users behave “nicely,” it doesn’t work.

This is why many AI products look profitable in early metrics and then fall apart as usage deepens. Early users are forgiving and exploratory. Later users are demanding and efficient at extracting value which usually means extracting cost.

1.4 AI pricing is a system control mechanism

Here’s a framing most teams miss: pricing in AI is not just a way to charge money. It is one of the strongest control mechanisms you have.

Pricing determines:

how often you invoke reasoning

how much you experiment

whether you batch work or stream it

whether you tolerate latency

whether you retry aggressively

whether you push the system to edge cases

In SaaS, pricing mostly controls access. In AI products, pricing controls behavioral pressure on the system.

If pricing encourages unbounded exploration without cost feedback, you will push the system until it breaks.

If pricing is too restrictive too early, you will never discover value.

Great AI pricing doesn’t just extract revenue. It teaches you how to use the product in a way the system can sustainably support.

1.5 Why “fair” pricing is a trap

A lot of early AI products aim for fairness: flat plans, simple tiers, “unlimited” usage with soft limits. It feels user-friendly, and in the short term it often boosts adoption.

But fairness is not the goal. Survivability is.

AI pricing that feels fair but ignores variance transfers all risk to the company while giving you no incentive to behave efficiently.

Over time, the system absorbs more stress until engineering adds silent limits, quality degrades, or finance forces abrupt pricing changes that anger customers.

The irony: “unfair” pricing that reflects real costs and constraints often builds more trust in the long run.

You can tolerate explicit limits. What you hate is inconsistency: unpredictable throttling, sudden downgrades, or quiet degradation.

Honest pricing aligned with system reality beats generous pricing that lies.

1.6 The PM role changes here

This is where the AI PM role diverges from traditional product management.

In SaaS, PMs could largely ignore pricing mechanics once tiers were set. In AI, PMs cannot. Pricing decisions influence architecture, and architectural decisions influence pricing viability. You cannot separate the two.

An AI PM must understand:

which user actions are expensive

which costs are fixed vs variable

which behaviors create cascading load

which quality improvements are linear vs exponential in cost

Without this, PMs accidentally design features that are economically incompatible with the pricing model.

The product looks great, usage climbs, and finance quietly panics.

AI pricing failure is rarely one bad decision. It’s a slow accumulation of small misalignments between system behavior, user behavior, and pricing assumptions.

1.7 The core AI pricing mistake, stated plainly

The most common mistake teams make is pricing AI as if cost is something to optimize later.

In AI, pricing is system design, not a go-to-market tweak.

It decides who absorbs variance and which behaviors your system must constrain under real user pressure.

If you don’t design pricing with the same rigor as system architecture, the system will expose that weakness at scale. Not immediately. Not loudly. But inevitably.

SaaS taught us to chase growth first and fix economics later. AI punishes that mindset.

Growth without pricing discipline is not momentum. It’s deferred failure.

Everything builds on this foundation: AI pricing is different because AI systems never stop costing money, never behave predictably, and never forgive lazy assumptions.

If you accept that early, pricing becomes a strategic weapon. If you don’t, it becomes the reason your product dies quietly while “everything looked fine.”

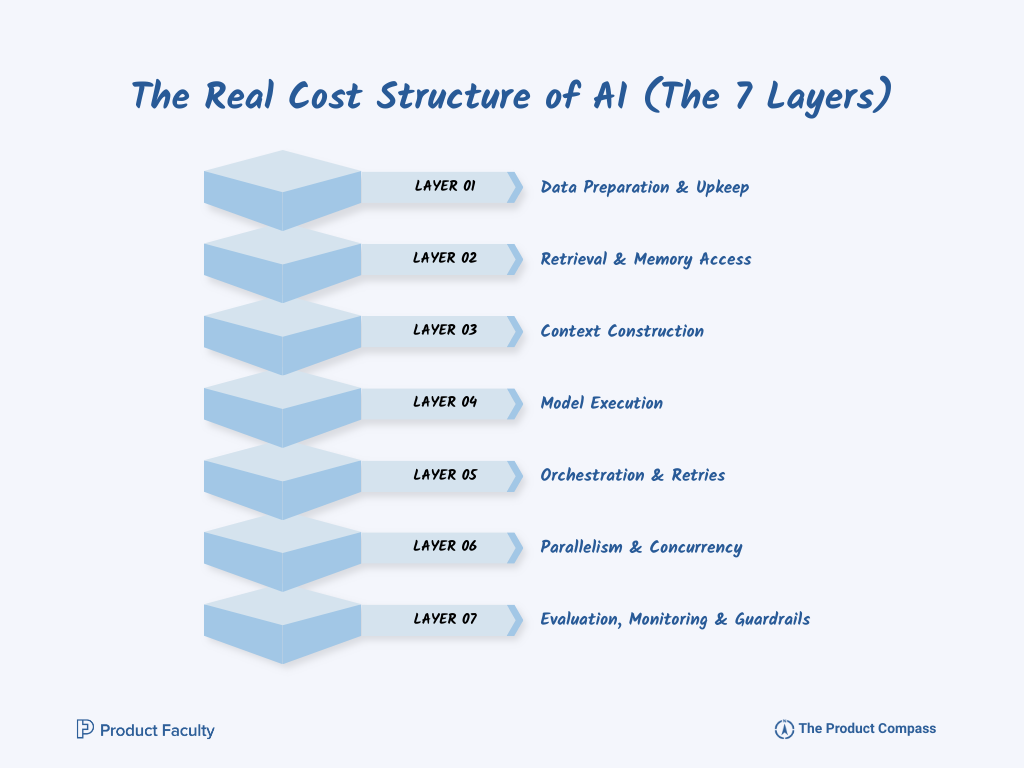

2. The Real Cost Structure of AI (The 7 Layers)

If you ask most teams where their AI costs come from, they’ll say “tokens.”

That answer is understandable, visible, and dangerously incomplete.

Tokens are the easiest cost to see because they show up cleanly on invoices, dashboards, and alerts. But in real AI systems, tokens are rarely what kills you.

What kills you is everything around them: the quiet layers that compound, interact, and magnify each other until your unit economics collapse while your token graphs still look “reasonable.”

To price AI correctly, you have to understand its true cost structure not as a single line item, but as a layered system where inefficiencies stack.

I’ve found the most accurate way to think about AI cost is as seven layers, each one capable of quietly multiplying the next.

2.1 Layer 1: Data preparation and upkeep

This is where most teams underestimate cost before they even ship.

AI products don’t run on “data” in the abstract. They run on data that has been cleaned, structured, embedded, versioned, and kept up to date.

Every document you ingest eventually needs reprocessing. Every schema you introduce creates maintenance overhead. Every shortcut you take early turns into recurring cost later.

This cost doesn’t care about usage. It cares about scope.

And because it isn’t directly tied to queries, it’s often excluded from pricing discussions, even though it belongs in your COGS model.

If your pricing doesn’t account for the fact that knowledge must be continuously refreshed and reshaped, you are subsidizing every future feature you add.

2.2 Layer 2: Retrieval and memory access

Retrieval is often introduced as a quality improvement, not a cost driver. That’s why it’s frequently mispriced.

Every retrieval operation has a cost: vector searches, ranking, filtering, post-processing, and latency overhead.

But the real cost isn’t the retrieval call itself. It’s what happens when retrieval is sloppy.

Poor retrieval design pulls too much information “just in case.” That extra context flows downstream into the model, inflating context length, increasing inference cost, and slowing responses.

In other words, retrieval mistakes don’t just cost money once. They amplify cost everywhere else.

Teams justify this with “better safe than sorry.” Economically, that mindset is disastrous.

Pricing models that don’t account for retrieval discipline reward inefficiency. You don’t see the cost, so you trigger workflows that retrieve far more than you need.

The system absorbs it until it can’t.

2.3 Layer 3: Context construction

Context is where AI systems quietly bleed money.

Every extra paragraph added to context increases cost, latency, and variance. Context growth is subtle. A small instruction added here. A clarification added there. A “just in case” rule appended after an incident. None of these decisions feel expensive in isolation.

Six months later, you have a bloated prompt that costs five times what it did at launch, and nobody remembers why.

From a pricing perspective, this is critical: context is one of the cost drivers you directly control, yet it’s rarely treated as an economic decision.

Pricing that ignores context growth assumes the system will never evolve.

It always does.

2.4 Layer 4: Model execution

This is the layer everyone fixates on, and for good reason. Models are expensive, and choosing the wrong one at the wrong time can wipe out margins.

But the real mistake isn’t using large models. It’s using them by default.

In production systems, the correct model choice is almost never static. Some tasks require deep reasoning. Others require speed. Others require consistency.

Routing everything through the “best” model is a convenience decision disguised as a quality decision.

The economic cost of this laziness shows up slowly. Margins thin. Finance asks why. Engineering points to user demand. PMs argue quality. Everyone is technically correct, and the system still loses money.

Pricing that assumes a single model cost is fantasy pricing. Real AI pricing must assume dynamic routing, and it must be resilient to mistakes in that routing.

2.5 Layer 5: Orchestration and retries

Modern AI products are not single calls. They are workflows.

A single user action might trigger a planner, a worker, a validator, a formatter, and a fallback path if confidence is low. Each of these steps may call a model. Some may retry automatically. Others may escalate to a heavier model.

None of this is visible to you, which makes it easy to forget it exists when pricing.

But orchestration is where AI costs multiply silently. One user request becomes five or ten model calls. A single retry doubles cost instantly. A safety check adds latency and compute but no visible feature.

These costs are the result of good intentions: reliability, safety, quality. That’s why teams hesitate to price for them explicitly. But ignoring them doesn’t make them free. It just hides them until margins collapse.

Pricing that doesn’t fund orchestration complexity is effectively betting that reliability won’t matter.

It always does.

2.6 Layer 6: Parallelism and concurrency

If there is one layer that kills otherwise healthy AI businesses, it’s this one.

Parallelism is not about how many total requests you handle. It’s about how many you handle at the same time.

Ten users spread across an hour are cheap. Ten users hitting the system in the same second are expensive.

They force you to provision capacity for peak load, not average behavior. That capacity costs money whether it’s used or not.

This is why AI systems feel fine in testing and fall apart under success.

Early usage is staggered and forgiving. Real adoption is spiky, synchronized, and merciless.

Pricing that doesn’t account for concurrency implicitly promises infinite capacity. The system cannot deliver that promise without burning cash.

Capacity-aware pricing is not an enterprise luxury. It’s a survival mechanism.

2.7 Layer 7: Evaluation, monitoring, and guardrails

The final layer is the one serious teams can’t avoid.

If your AI system matters, you will pay to monitor it. If it touches money, decisions, customers, or risk, you will log outputs, evaluate quality, audit failures, and add guardrails.

These costs scale with importance, not usage. The more people rely on the system, the more you invest here. And unlike tokens, these costs don’t shrink when usage drops. They are structural.

Pricing models that pretend evaluation is “overhead” are lying to themselves. If your product requires trust, trust must be priced in.

2.8 Why these layers compound, not add

The most important thing to understand about the seven layers is that they interact.

A retrieval inefficiency inflates context. Inflated context increases inference cost. Higher inference cost encourages routing to smaller models, which increases error rates. Errors trigger retries. Retries increase concurrency pressure. Concurrency pressure forces overprovisioning. Overprovisioning raises baseline cost.

This is how AI costs spiral without any single decision looking “wrong.”

And this is why pricing must be conservative by design. You are not pricing a stable machine. You are pricing a living system that accumulates complexity over time.

2.9 What this means for pricing decisions

Once you see the full cost stack, one thing becomes clear: pricing must absorb uncertainty.

You cannot price AI assuming perfect efficiency, perfect routing, perfect retrieval, and perfect behavior. You must price assuming drift, mistakes, and growth in complexity.

The teams that survive are not the ones with the lowest per-token cost. They are the ones whose pricing models are resilient to the system getting messier over time.

If your pricing only works when everything goes right, it doesn’t work.

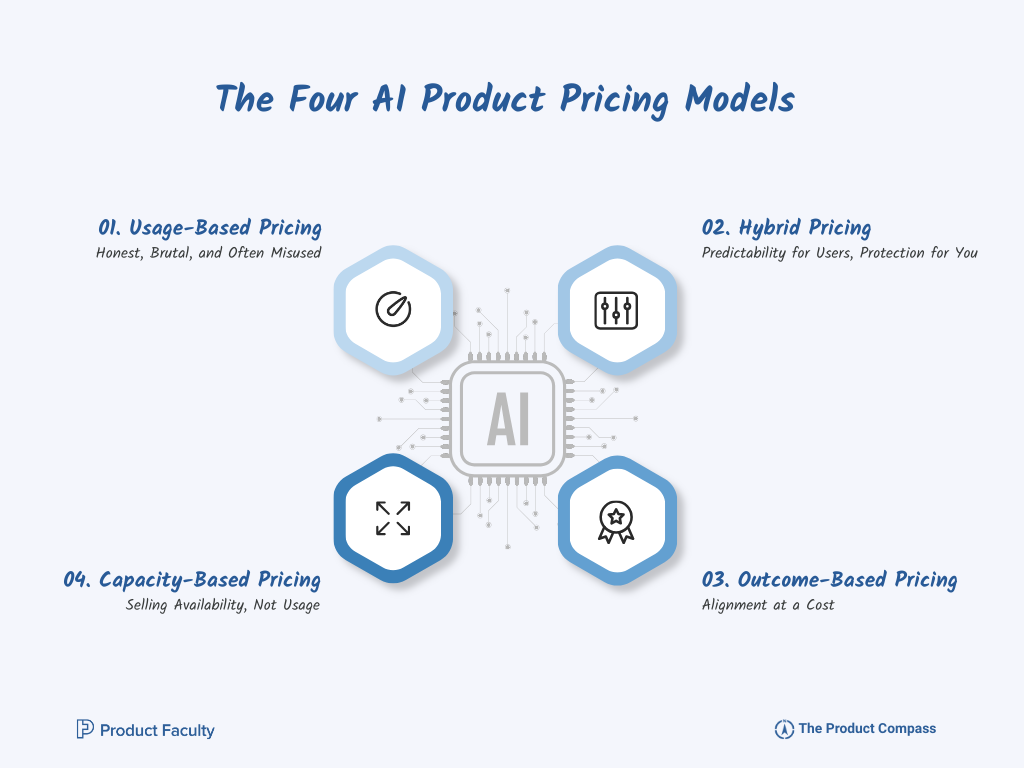

3. The Four AI Product Pricing Models (and When to Use Each)

Once you accept AI pricing can’t be treated like SaaS pricing, the next mistake is over-engineering the solution.

Teams invent exotic hybrids, clever credit systems, abstract “AI units,” or opaque bundles that look smart on a slide but collapse the moment real users touch the product.

In practice, AI products converge to four pricing models that survive real usage.

What matters is not creativity. What matters is whether the pricing model maps cleanly to how cost is generated inside your system, and whether it nudges users toward behavior your system can afford.

3.1 Usage-based pricing: honest, brutal, and often misused

Usage-based pricing is the most straightforward model: users pay for what they consume. Tokens, queries, compute units, requests… pick your unit.

This model feels “right” to engineers and finance teams because it aligns cleanly with marginal cost. Every extra unit of usage produces revenue. Every spike in cost is theoretically covered.

The problem is that users are not economists.

In practice, usage pricing introduces something that kills many AI products before they reach maturity: meter anxiety. The moment users feel like every interaction is ticking a meter, they subconsciously pull back. They stop experimenting. They avoid edge cases. They use the product less precisely when they should be discovering where the value actually lies.

This is why usage pricing works best in environments where users already expect it. Developer tools. APIs. Infrastructure. Places where buyers think in throughput, budgets, and efficiency. In those contexts, usage pricing is not scary; it’s familiar.

But when teams apply usage pricing to productivity tools, consumer products, or exploratory workflows, adoption stalls. Users don’t want to think about cost while they’re still learning what the product can do.

There’s another subtle risk: usage pricing assumes users can control cost drivers. In AI systems, they often can’t. A user might submit the same request twice and get two radically different internal cost profiles because one path triggered retries or heavier models.

From the user’s perspective, that feels unfair, even if it’s economically justified.

Usage pricing works when:

you understand what drives cost

the system behaves predictably

you are comfortable optimizing usage

If any of those fail, usage pricing becomes a growth ceiling, not a revenue lever.

Example: OpenAI API

OpenAI prices its API based on input and output tokens. The more tokens you send and receive, the more you pay. This is the clearest and most widely accepted example of usage-based pricing in AI. Nearly every major AI API provider follows this same principle.

3.2 Hybrid pricing: predictability for you, protection for the business

Hybrid pricing exists because pure usage pricing is too harsh for most real-world products.

In a hybrid model, users pay a base subscription that includes a reasonable amount of usage, with overages kicking in once they exceed that baseline. Psychologically, this creates safety. Economically, it creates a buffer.

This is the most common and most misunderstood AI pricing model.

The strength of hybrid pricing is that it decouples exploration from punishment. Users can play, learn, and build habits without watching a meter, while the business retains the ability to capture revenue from heavy or expensive usage.

But hybrid pricing fails when teams treat it as a generosity exercise instead of a control system.

The included usage must reflect what the system can handle sustainably for the median user, not what looks attractive on a pricing page. Over-including usage trains users to behave expensively, and once that behavior is learned, clawing it back is painful.

Another common mistake is hiding overage mechanics. Teams worry that showing overage pricing will scare users, so they bury it or avoid it altogether. This backfires later when costs spike and pricing has to change abruptly.

Done well, hybrid pricing creates a quiet but powerful dynamic: most users stay comfortably within the base tier, while a minority of heavy users fund the variance for everyone else. Done poorly, it subsidizes your most expensive customers indefinitely.

Hybrid pricing is ideal when:

users need freedom to explore

costs vary widely across users

you want predictable revenue without unlimited exposure

Example: Notion AI

Notion AI is bundled into a subscription that includes a fixed allocation of AI credits. Once users exceed those credits, they must purchase additional credits or upgrade to a higher plan. This is a classic example of hybrid pricing — subscription first, usage second.

3.3 Outcome-based pricing: alignment at a cost

Outcome-based pricing is the model everyone talks about and few teams can sustain.

Instead of paying for usage, you pay for results: a ticket resolved, a lead qualified, a document processed correctly.

From your perspective, this is perfect. You don’t care how the AI works. You care if it delivers value.

From a system perspective, it is unforgiving.

Outcome pricing only works if teams can:

define outcomes unambiguously

measure them reliably

deliver consistently

absorb failures without destroying margins

Most AI systems aren’t stable enough for this early. When outcomes are priced, every failure becomes a revenue problem.

This forces heavy investment in evaluation, monitoring, and often human-in-the-loop.

There’s also a psychological trap: when users only pay for success, they push the system harder. They retry more. They test boundaries. That behavior increases cost even when revenue stays flat.

Outcome pricing works best when:

the task is narrow and well-defined

the value of success is high

the system is mature enough that reliability is not a question

For early-stage AI products, outcome pricing is often aspirational. It becomes viable later, once reliability is no longer a question.

Example: Enterprise AI Automation & Copilot-Style Agents

In enterprise environments, companies (including Microsoft through its evolving Copilot strategy) are increasingly exploring pricing based on work performed by AI agents, rather than raw token usage. Satya shared this in a podcast with Dwarkesh.

This moves pricing closer to outcome-aligned models, where customers pay for completed tasks or delivered value.

3.4 Capacity-based pricing: selling availability, not usage

Capacity-based pricing is underused despite mapping well to how AI systems fail.

Instead of paying for how much you consume, you pay for how much capacity you reserve: concurrency limits, throughput guarantees, response-time SLAs, parallel workflows.

This model recognizes a truth: many AI costs are driven not by total volume, but by peak demand.

If ten customers hit the system at once, the business pays for that concurrency whether you use it continuously or not. Capacity pricing monetizes that reality.

From the customer’s perspective, this model makes sense in enterprise and mission-critical contexts. They don’t want “cheap.” They want reliable. They are willing to pay to know the system will respond when needed.

The challenge is that capacity pricing requires operational maturity. You must actually be able to enforce limits, manage queues, and honor guarantees. You can’t fake it.

Capacity pricing works best when:

latency matters

concurrency drives cost

you value reliability over raw usage

workloads are predictable in bursts

It is rarely the first pricing model a company adopts, but it is often the one that unlocks sustainable scale.

Example: GPU / AI Compute Marketplaces

Platforms like SF Compute allow organizations to buy or reserve compute capacity, such as GPU time, instead of paying per request. This reflects capacity-based pricing, where customers pay for guaranteed availability and peak throughput rather than average usage.

3.5 The real mistake: choosing based on fashion, not physics

What kills pricing strategies isn’t picking the “wrong” model. It’s picking a model that doesn’t match the physics of your system.

If costs spike with concurrency but pricing is purely usage-based, you lose money under success.

If costs vary wildly by task complexity but pricing is per seat, heavy users destroy margins.

If the system is unstable but pricing is per outcome, reliability costs overwhelm revenue.

Pricing models are economic constraints. They must reflect how your system behaves, not how you wish it behaved.

3.6 The rule you learn in postmortems

If your pricing model doesn’t get stricter as usage grows, your margins will get worse as success grows.

Every viable AI pricing model tightens constraints as demand increases. It charges more, limits capacity, enforces overages, or demands higher commitment.

If pricing only encourages more usage without increasing discipline, it isn’t pricing. It’s a subsidy that increases your problems.

Side Note: Pricing is system design. You can’t do it well without understanding how AI products actually work.

If you want to learn that end to end, here’s the program I recommend: AI Product Management Certification (with Miqdad Jaffer, Product Lead at OpenAI). I lead the AI Builds Lab and run 3 live sessions in the cohort.

Next cohort: January 26, 2026. $500 off for our community:

Continue Reading

Up to here, you’ve got the foundation for AI product pricing (3,800+ words):

why AI pricing is different from SaaS

the 7-layer cost stack (and why the layers compound)

the 4 pricing models that survive real usage

the rule you learn in postmortems

If you want the practical part, the rest (4,200+ words) goes deeper into:

stability vs scale, and how premium tiers are really “stability budgets”

a decision tree you can apply to your product

an AI P&L breakdown (including peak behavior and concurrency, even when you use APIs)

AI cost glossary

If this helped, forward sections 2 and 3 to your product and engineering leaders. It’s the fastest way to get aligned before you ship pricing.

Keep reading with a 7-day free trial

Subscribe to The Product Compass to keep reading this post and get 7 days of free access to the full post archives.