14 Principles of Building AI Agents (Learned the Hard Way)

What I learned by building 50+ AI agents and copying the Multi-Agent Research System by Anthropic. Best practices and mistakes to avoid.

Hey, welcome to the archived edition of The Product Compass newsletter.

Every week, I share actionable tips, resources, and insights for PMs.

Here’s what you might have missed recently:

Consider subscribing or upgrading your account for the full experience:

Updated: 11/2/2025 (added experiment results for GPT-5)

In the recent months, I’ve built 50+ AI agents, experimented with 7+ agentic frameworks, and copied the Multi-Agent Research System by Anthropic.

Here's what I learned (best practices and mistakes to avoid):

1. Don’t Use Agents If You Don't Have To

Nobody cares if it's an AI agent or a simple script, as long as it works. A good old if/else is faster, cheaper, and more reliable. And it's often all you need.

Save the agents for when you really need them. They might easily become a liability.

2. Small, Specialized, and Decoupled

Think "team of specialists," not "one agent to rule them all." A planner plans. A summarizer summarizes. A verifier checks. Decoupled agents are cheaper to run, easier to test and fix, and way more predictable.

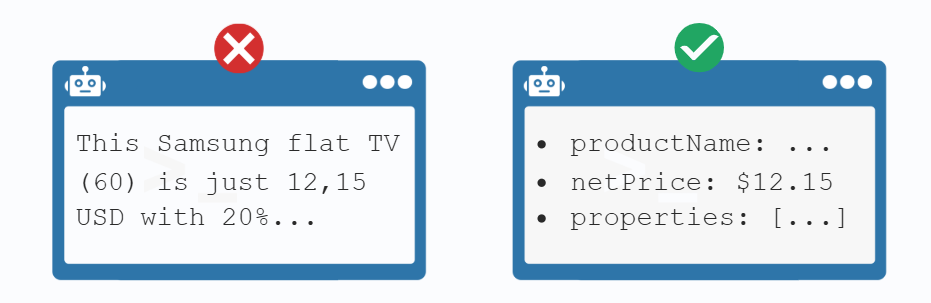

3. Enforce Structured Output

I've learned that text is a mess to deal with. JSON is easier to debug, cheaper to parse, and acts like a contract between agents.

Bonus: you can validate it automatically and stop errors before they spread.

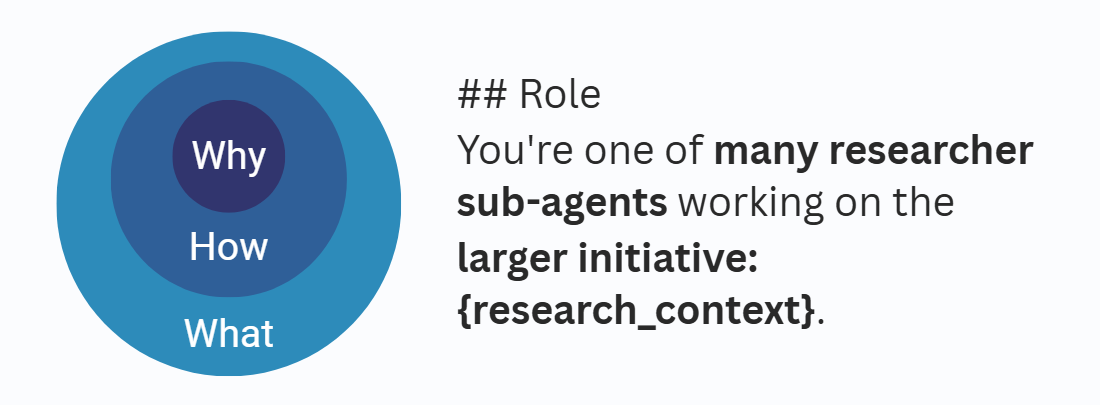

4. Explain the Why, Not Just the What

I've discovered that anthropomorphizing AI works in many contexts. Here, lead with context not control.

When delegating a task, don't just define the objective. Explain why it matters and provide the context in which you need it.

This helps AI agents make better decisions with shorter prompts.

5. Orchestration > Autonomy

Autonomy sounds great, but what you need more in real life is predictability. Move all known logic (if/then, loops, retries, known procedures) out of agent prompts and into the orchestration layer.

6. Prompt Engineering > Fine Tuning

Before you jump to fine-tuning, ask: Why is the model failing?

If it’s missing facts → try RAG.

If it’s wrong formatting or doesn't follow your brand style → maybe fine-tune. But 80% of the time, it’s just a prompt problem.

Keep reading with a 7-day free trial

Subscribe to The Product Compass to keep reading this post and get 7 days of free access to the full post archives.