The Ultimate Guide to Fine-Tuning for PMs

Your playbook: when to fine-tune, when to stick with RAG, and how to practice the exact methods top AI teams use (SFT, DPO, ORPO). Step-by-step. No coding.

Fine-tuning is one of the most powerful techniques product teams can easily use to adjust the model (e.g., LLM) to their needs.

But it’s easy to confuse it with RAG or context engineering.

What’s the difference? How do you decide which method to use? And how can you practice fine-tuning starting today?

In today’s issue, we discuss:

The Big Picture: What is Fine-Tuning?

Fine-Tuning vs. Reinforcement Learning

Fine-Tuning (Gradient-Based Methods) in Depth

🔒Step-by-Step: How to Teach an LLM to Do Request Classification Using SFT

🔒Step-by-Step: How to Make an LLM More Human-Like Using DPO

🔒How to Choose the Right Fine-Tuning Approach

Hey, it’s Paweł 🙂 Welcome to the Product Compass, the #1 actionable AI PM newsletter. Every week I share research and hands-on guides to help you:

Break into the top 10% of AI PMs

Learn by building, not just reading

Future-proof your career, whatever comes next

In case you missed it, here are some of the latest guides:

Consider upgrading your subscription so we can dive deeper together:

1. The Big Picture: What is Fine-Tuning?

At its core, fine-tuning is about teaching a model new habits so it becomes better at a specific task than a general model.

It's one of the key levers you have to make a general model fit your product.

Why should you care as a Product Manager?

In some cases RAG and prompting (or more broadly: context engineering) hit their limits.

For example:

You need a consistent style, brand voice, or complex structure.

You want to accurately classify inputs (e.g., inquiry type) at scale.

You struggle to eliminate mistakes (failure modes) with prompting.

The prompt becomes too long or too complex.

Imagine you’re playing basketball for the first time. Reading a book about how to throw a ball wouldn’t be enough. You need to practice and build muscle memory.

In fine-tuning, we train the model using examples. You directly show the model how it should respond and, depending on the specific FT method, what to avoid. This helps the model internalize the desired behavior rather then relying on detailed instructions every time.

As a result, a smaller, specialized model might outperform a larger and, theoretically, smarter off-the-shelf model.

2. Fine-Tuning vs. Reinforcement Learning

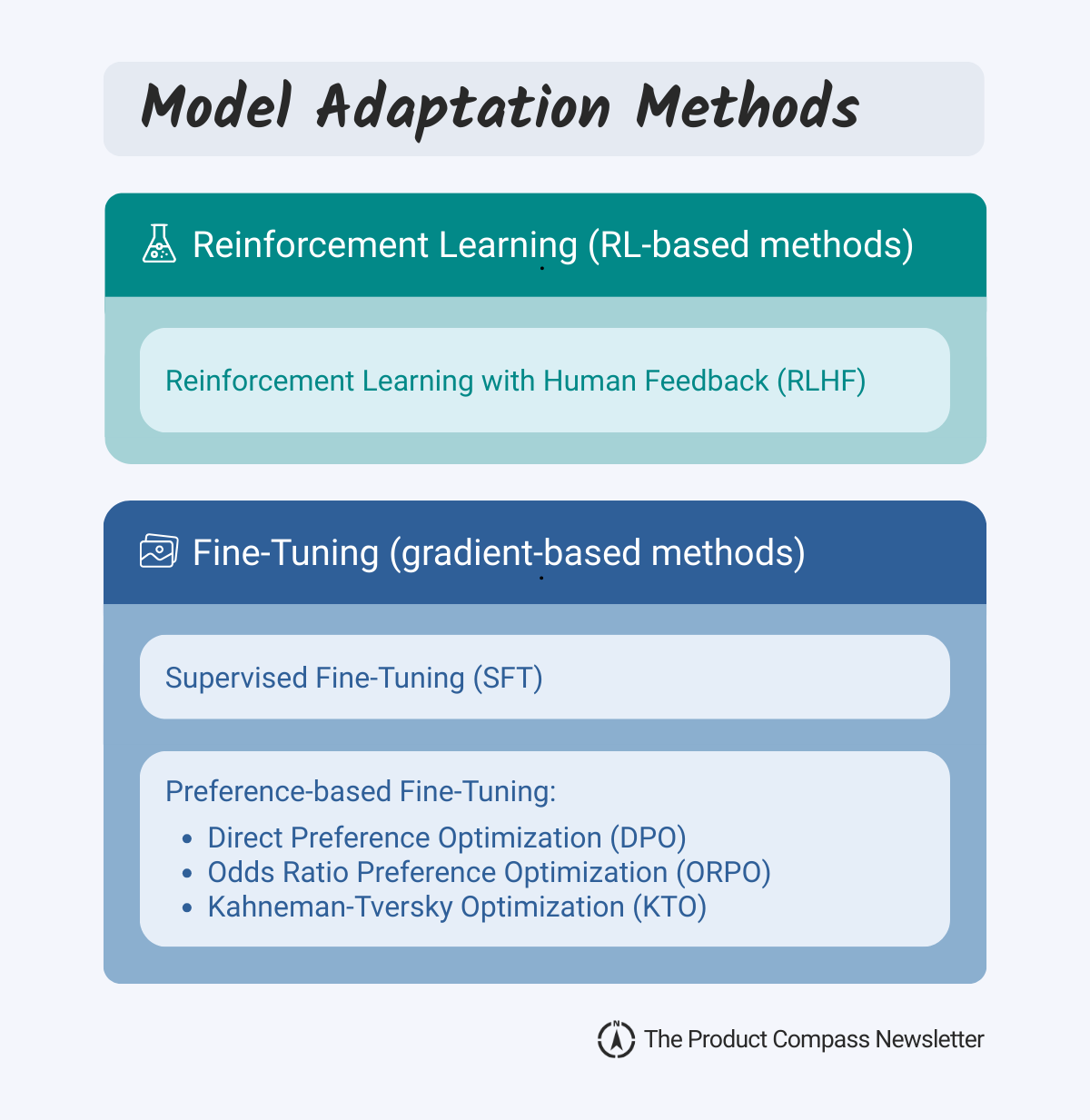

There are two primary ways to adjust the model to our needs: Reinforcement Learning (RL) and Fine-Tuning.

In Reinforcement Learning (RL), rather than studying examples, the model explores different options, gets feedback, and improves. Well-known examples include training an AI to play chess or Go, where the gains or losses were easy to calculate automatically.

A special type of RL is Reinforcement Learning with Human Feedback (RLHF), where model responses are assessed by human labelers.

Reinforcement Learning is powerful but also heavyweight and costly. It’s commonly used by AI labs. Fine-tuning is the primary lever for product teams.

3. Fine-Tuning (Gradient-Based Methods) in Depth

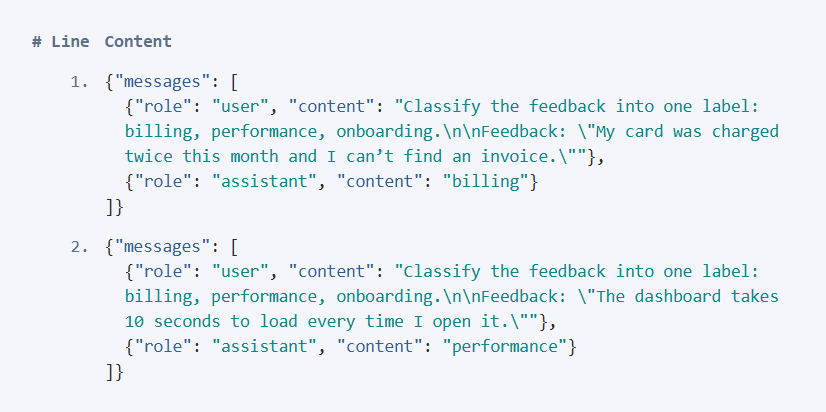

As discussed previously, fine-tuning is about training the model using examples. We typically use JSONL files that contain one training example in each line.

Let’s discuss the common fine-tuning methods together with example files.

Group 1: Supervised Fine-Tuning (SFT)

SFT is the most common approach to fine-tuning. The model learns how to imitate good examples.

It works best when you have one correct answer per input. One perfect use case is text classification.

An example JSONL file:

Note: I wrapped lines visually, but a real JSONL file would have two lines.

Group 2: Preference-based Fine-Tuning

Preference-based fine-tuning is a group of methods, where instead of just imitating good examples, the model learns what to prefer.

There are three major approaches:

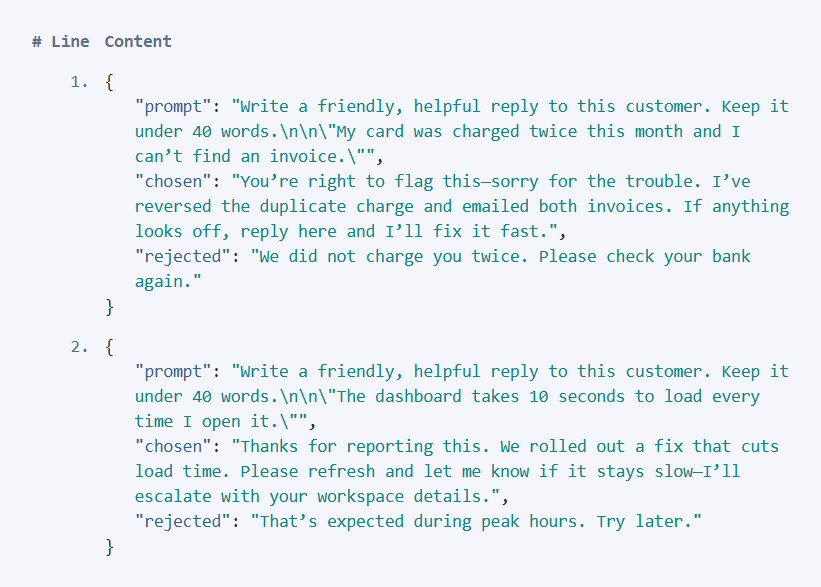

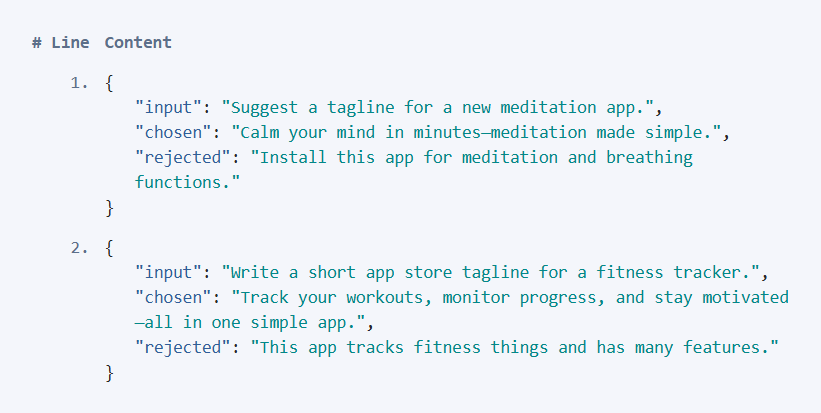

Approach 1: Direct Preference Optimization (DPO)

DPO is the second most common fine-tuning method. It compares your fine-tuned model to a frozen copy of the original model (“reference model”) to prevent drift.

To perform DPO, we must create a dataset of examples and for each define:

prompt (input),

chosen (preferred) output,

rejected (non-preferred) output.

An example JSONL file in the Hugging Face format:

OpenAI requires a slightly more complex structure:

input → a dictionary with messages (system + user).

preferred_output → an array of assistant messages.

non_preferred_output → an array of assistant messages.

Approach 2: Odds Ratio Preference Optimization (ORPO)

ORPO is lighter and cheaper, as we don't need a reference model. As a consequence, it’s also less stable and our model might drift.

It uses the same format and structure as DPO:

prompt (input),

chosen (preferred) output,

rejected (non-preferred) output.

Just to visualize, an example JSONL file in the Hugging Face format:

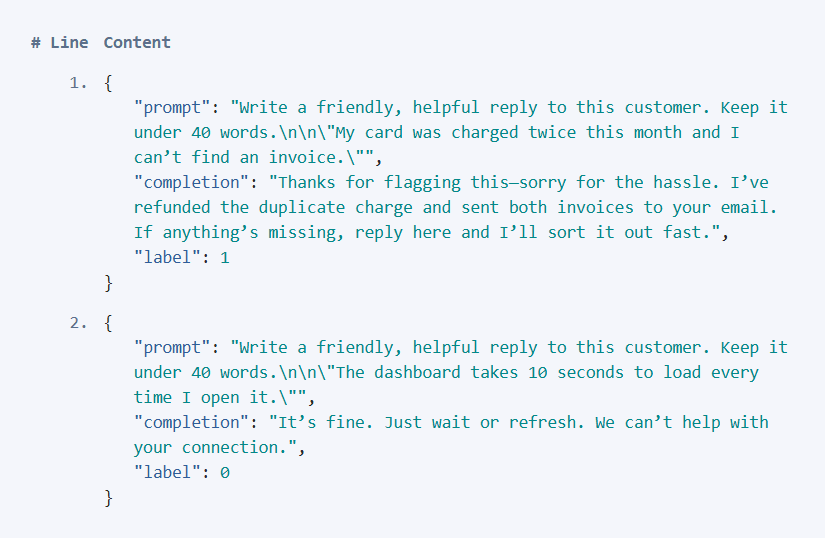

Approach 3: Kahneman-Tversky Optimization (KTO)

KTO is a fine-tuning method inspired by behavioral economics (in particular, risk aversion). It punishes bad outputs more than it rewards good ones.

This method is useful when your feedback signals are noisy or ambiguous (for example, clicks, likes, or upvotes that don’t reflect true quality you care about).

But there’s a trade-off. Models trained with KTO often converge toward “safe, average answers.” To get real value, you need a wide range of positive examples so the model doesn’t just play it safe.

In the screenshot above:

label: 1 means the response is preferred/positive

label: 0 means not preferred/negative

That might resemble datasets for DPO/ORPO, but we could use integers or floats, too, depending on the task (regression, classification).

In my work and research I used other fine-tuning methods. I have never tested KTO. It’s good to know so you know where to start in case you need it, but the details go beyond the scope of this post.

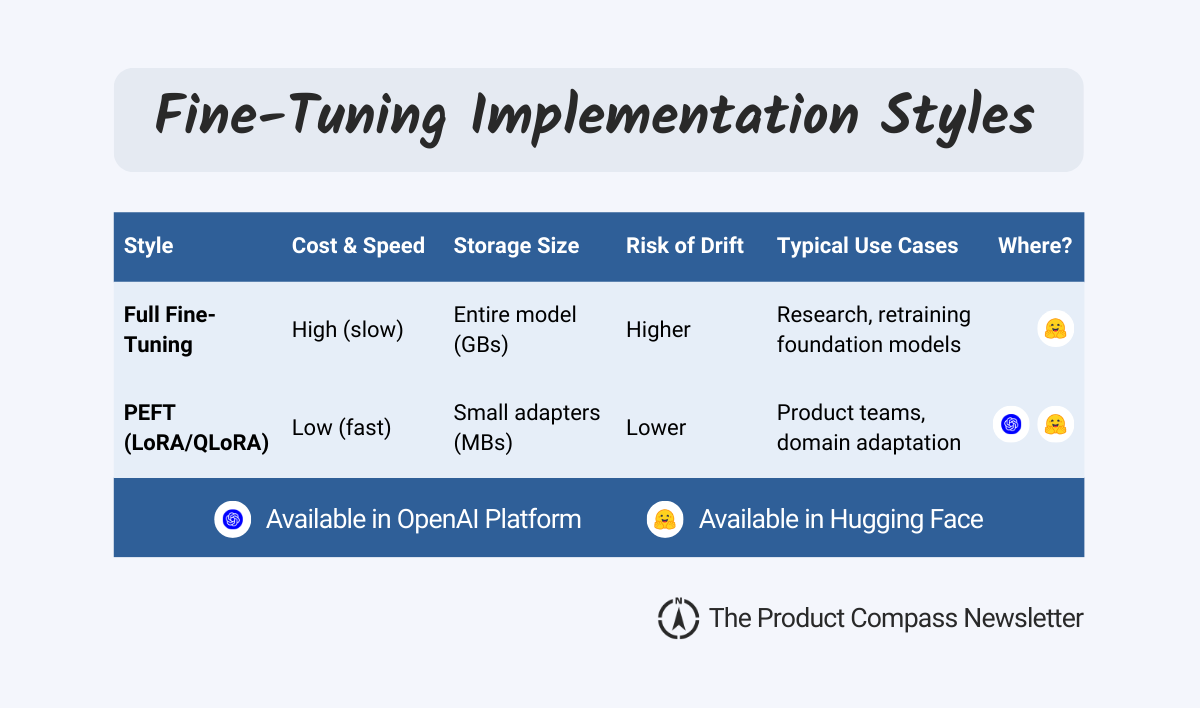

Fine-Tuning Implementation Styles

Regardless of the method (SFT, DPO, ORPO, KTO), there are two main ways to implement fine-tuning:

Way 1: Full Fine-Tuning

Updates all original weights of the model. Very flexible, but slow, expensive, and storage-heavy. It's mostly used in research, not by “standard” product teams.

Way 2: Parameter-Efficient Fine-Tuning (PEFT)

PEFT adds small adapters (LoRA/QLoRA) instead of changing everything. It’s much cheaper, faster, and lighter to store.

In practice, PEFT is what most teams use today. It gives you 80-90% of the benefits of full fine-tuning at a fraction of the cost.

Hope that was helpful. Next, only for the premium subscribers, we discuss:

🔒Step-by-Step: How to Teach an LLM Request Classification Using SFT

🔒Step-by-Step: How to Make an LLM More Human-Like With DPO

🔒How to Choose the Right Fine-Tuning Approach

4. Step-by-Step: How to Teach an LLM to Do Request Classification Using SFT

Step 1: Prepare a dataset

Request classification seems like an easy task, but most models get 65-75% accuracy and even with a few-shot examples 80-85% due to edge cases.

At the same time, a fine-tuned model should reach 90%-95% accuracy pretty reliably.

Let’s test that together.

Keep reading with a 7-day free trial

Subscribe to The Product Compass to keep reading this post and get 7 days of free access to the full post archives.