How to Build an AI Product Team

A practical guide to hiring, structuring, and scaling your AI team - with insights from Bryan Bischof, Head of AI at Theory Ventures

Hey, Paweł here. Welcome to the Product Compass, the #1 actionable AI PM newsletter. Every week I share my research and hands-on guides for PMs.

Here’s what you might have recently missed:

Consider upgrading your account, if you haven’t already, for the full experience.

Building AI products requires a different approach.

As a product leader, you need to understand how to hire, structure, and guide an AI team. This is not only about adding new roles. It’s about rethinking team composition, development processes, and even your own role.

To explore what that looks like in practice, I spoke with Bryan Bischof, Head of AI at Theory Ventures, one of the top voices in the AI engineering space. Previously, he led AI at Hex and was the Head of Data Science at Weights and Biases.

Below you’ll find my notes and opinions, paired with Bryan’s answers to specific questions - so you get both a structured playbook and the experience of someone who has done this several times before.

In this issue, we discuss:

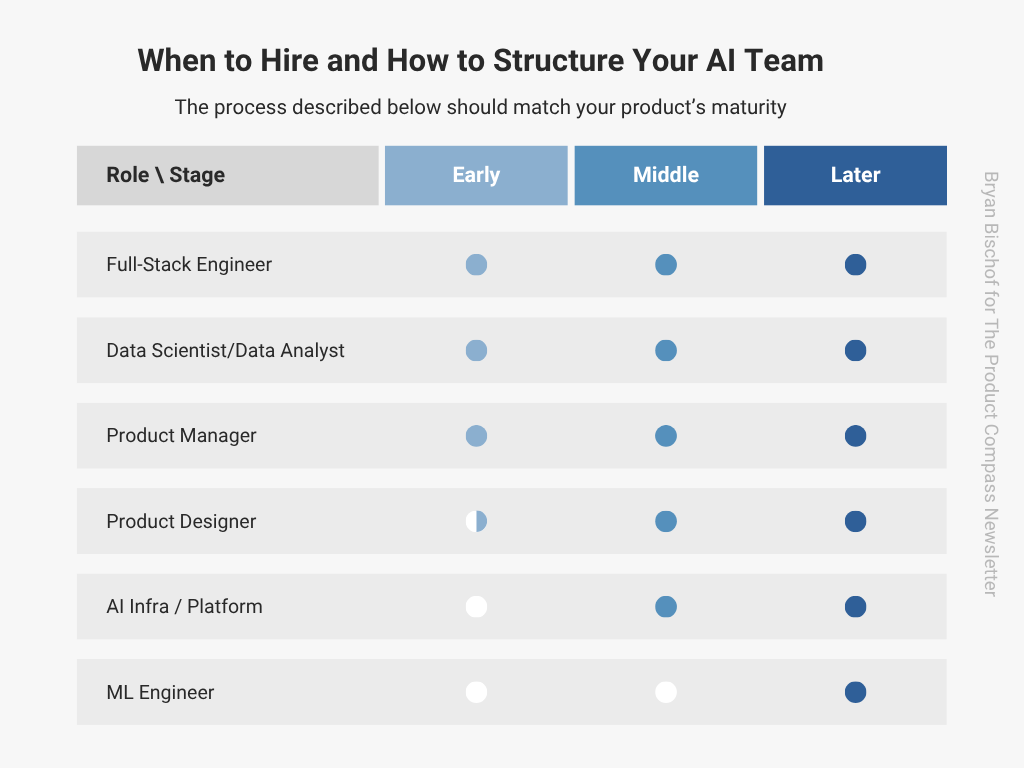

When to Hire and How to Structure Your AI Team

How to Interview AI Team Members

Beyond Hiring: Rethinking AI Development Process

The Secret to Success

1. When to Hire and How to Structure Your AI Team

Principle: start small, then scale. In AI, adding too many people too early slows you down even more than in classic software.

1.1 Stage Definitions and Hiring Gates

Based on Bryan’s experience, what works best is a phased approach:

Early Stage

You are still proving value. Little or no production usage, thin logs, no real eval harness. The goal is to ship a vertical slice and start collecting traces from dogfooding and early users.

At minimum, you need:

A full-stack engineer to integrate with an LLM provider and handle infra and product engineering basics.

A data scientist/analyst to drive early evals, early error analysis.

A product manager who focuses on customer value and business viability.

Paweł: In my experience a good designer helps you with user research, UX, ideation, and experiments. I strongly believe in cross-functional teams working together. For a typical product where AI is just one of the components, I’d introduce Product Designer in that stage. We agreed with Bryan that in other cases, you might want to stay lean.

Middle Stage

You have users and repeatable workflows. You can run basic evals and see recurring failure modes. Now reliability, UX, and observability become the bottlenecks.

Two additional roles to consider:

AI infra/platform owner

Product designer if not already on the team. Most companies underestimate how critical this role is.

Later Stage

You hit ceilings with off-the-shelf models on quality, cost, latency, or safety. You have data to justify targeted optimization.

Consider:

ML engineer(s) for fine-tuning or other modeling approaches, retrieval quality, and experiment design.

Keep a small platform group to own shared infra and evals across products or teams.

1.2 Centralized vs Embedded AI Team

Paweł (Question): When should a company move from a centralized AI team to embedded AI inside product teams?

Bryan (Answer): Start centralized to concentrate scarce expertise and build shared infra and evaluators. As product teams adopt a common platform, embed AI into each product team while keeping a lean platform team for tooling, eval suites, and governance. This mirrors how mature data teams evolve from central to hybrid models when domain context becomes the limiter.

2. How to Interview AI Team Members

2.1. Define the Hiring Thesis

For every hire, answer: why this role, why now, why not someone already on the team?

For example:

Paweł (Question): What makes a great 0→1 hire for an AI team?

Bryan (Answer): Look for people who are comfortable in ambiguity. A full-stack engineer who can hack things together across Python and Typescript is often more valuable early than someone with deep ML theory. Layer specialists later.

2.2 Expect Roles to Blur

In practice:

Product manager reviews traces with Data Scientist/Analyst and domain experts and names failure modes.

Product designer experiments with vibe-engineering tools to discover better UX patterns.

Engineers deepen domain expertise to interpret outputs and suggest fixes.

Paweł (Question): And what’s the key trait across those roles?

Bryan (Answer): It’s curiosity. Also, screen hard for: data intuition, product sense, and urgency.

2.3 How to Test for AI Thinking in Interviews

Skip theory trivia. Use three hands-on exercises that surface reasoning, collaboration, and judgment. What matters is how candidates reason, collaborate, and adapt in ambiguous problem spaces.

Instead of traditional interviews, you can try:

Example 1: Ambiguous dataset deep-dive

Give a small, messy slice from evals or user logs. Ask them to find something useful. Observe how they clean, segment, hypothesize, and whether they tie insights to user or business impact.

Rubric: data literacy, problem framing, dealing with uncertainty, next steps.

Example 2: Trace review and failure-modes

Hand 10 real traces with mixed outcomes. Ask them to name failure modes and propose evaluators for them. Then suggest a UX or retrieval change that would reduce frequency.

Rubric: clear taxonomy, evaluator granularity and how aligned they are with user experience, formulating hypotheses.

Example 3: Minimal agentic workflow design

Ask to design the smallest agent or tool-use loop that solves X. Discuss observability, guardrails, and how you would evaluate it.

Rubric: error analysis, choice of tools, orchestration vs. autonomy, human-in-the-loop points, guardrails.

2.4 Rubric and Debrief

Your goal in assessment is reproducibility while leaving room for different approaches. It can be helpful to enumerate for each component of the interview:

What the minimal set of requirements is that they must cover.

What are some further milestones that show a really strong understanding of the space?

Two things I (Bryan) like to do are:

Provide “common missteps” for interviewers who guess at places the candidate may go which are ultimately bad ideas.

Suggest some “alternative approaches” which are very different than the expected solution approach, but are still reasonable.

The other key opportunity is to do a “feedback interview.“ After they’ve done a technical take-home and you’ve reviewed it, schedule an interview where you talk through your feedback on their submission.

This allows you to achieve a few goals simultaneously:

Check your understanding of their approach via questions about their submission.

Check their understanding of their approach – it’s a great way to validate how deeply they understand the choices they made.

Get signal on their ability to take constructive feedback on the aspects you think could be improved.

Provide signal on the collaborative style of the candidate.

3. Beyond Hiring: Rethinking AI Development Process

3.1. Discovery and Delivery Blend

Traditional product discovery results in a validated Product Backlog, which means that highest risks are addressed before “real” implementation starts.

But in AI, feasibility prototypes aren’t enough. You must build real pipelines to test your assumptions. Discovery and delivery streams merge. You run a significant portion of experiments in production-like environments.

Paweł (Question): How do you approach building the first AI pipeline, what matters most?

Bryan (Answer): Don’t spend months polishing prototypes. Ship a pipeline that gets into users’ hands, even if it’s rough. You’ll learn more in two weeks of live usage than months of whiteboarding.

3.2 Putting AI Evals at the Center

Evals are not about asking “does my AI system work?” They are about finding which of your assumptions are false.

Use the “failure funnel” to break down your system step by step:

Did it retrieve the correct database tables?

Did it generate syntactically valid SQL?

Did the query execute without errors?

Did the results match the user’s intent?

Did it solve the real problem?

Failures early in the funnel cascade downstream. Fixing them early has the biggest payoff.

Paweł (Question): Can you share a time when an eval surprised you and changed direction?

Bryan (Answer): One eval showed us our retrieval was failing 40% of the time before we even got to generation. Fixing that single step had more impact than weeks of prompt tuning. It taught me to measure every link in the chain.

Side note: Bryan has partnered with Hamel Husain and Shreya Shankar in their latest course on AI Evals.

The third edition just started, but you can enroll until Oct 10 using the special code below for a last-minute $1,330 discount:

P.S. Paweł: OpenAI has just invited Hamel to co-author the AI Evaluation Flywheel Cookbook. I have no doubt this course is the best source of information on AI evals worldwide.

4. The Secret to Success

No matter how skilled your team is, success depends on domain experts. If you build a customer support bot, your support team must use it daily. If you build a data science copilot, your data scientists must collaborate daily.

Paweł (Question): What advice do you give founders who want to bring domain experts into the loop?

Bryan (Answer): Make them first-class citizens. Don’t treat them as testers you bring in at the end. Their feedback should shape what you build from day one.

Thanks for Reading The Product Compass

It’s amazing to learn and grow together.

Make sure you follow Bryan on LinkedIn and X (Twitter).

Have a great rest of the week,

Paweł