Gemini File Search API Explained: A Practical Handbook for PMs

What Google’s “RAG-as-a-Service” really gives you, what it hides, and how to ship document-aware AI prototypes in hours. No coding.

Google just dropped the File Search tool in the Gemini API.

It’s a RAG-as-a-Service. It drastically speeds up building RAG systems and might be particularly useful for PMs who need to quickly prototype and build solutions.

But when I tested it with coding agents like Lovable and Claude Code, they struggled to understand Google’s examples. That’s a common issue in coding agents — they get confused when implementing new APIs if similar older ones already exist.

And that’s the real story here:

Gemini File Search is powerful, but it’s a black box. You get speed, but you give up control — no custom embeddings or retrieval strategy.

You also hit hard limits like “5 stores per query,” and the API leaves several key decisions to you that aren’t well documented.

That’s why I wrote this post.

Instead of piecing everything together, you get one practical handbook that helps you build a working RAG prototype in a few prompts.

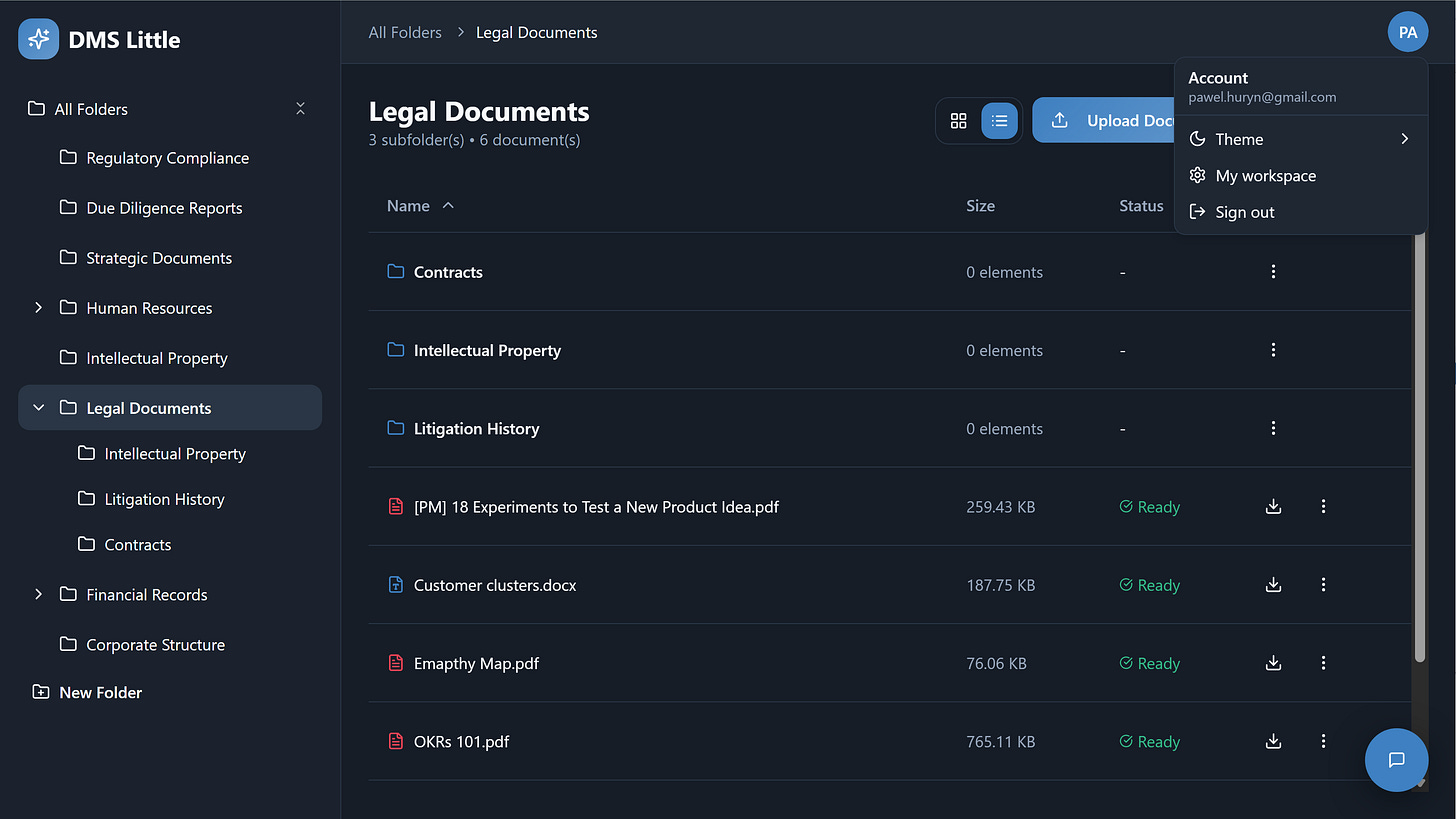

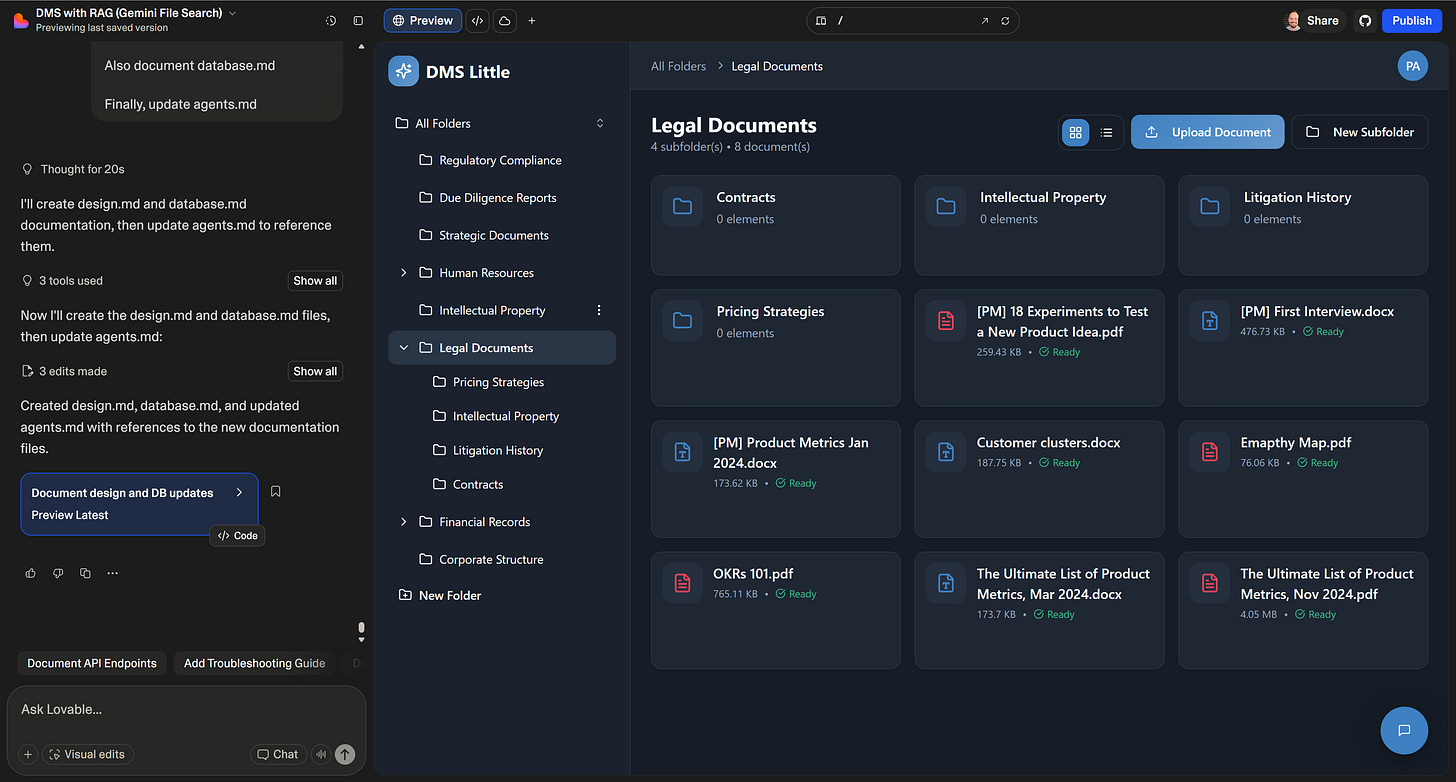

Here’s a fully working example I built this week with Lovable:

In this issue, we discuss:

Why PMs Should Consider Gemini File Search

Example: Little DMS (Document Management System)

Gemini File Search vs Traditional RAG

How to Easily Implement Gemini File Search in Your Product (+Public Handbook)

🔒 A Ready-to-Use Little DMS Template (Not Only For Lovable)

🔒 Conclusion

If you want to ship document-aware AI prototypes, products and agents faster, this is for you.

Let’s dive in.

Hey, Paweł here. Welcome to the Product Compass, the most actionable AI PM newsletter written by a product and AI practitioner.

Every week I share hands-on advice and step-by-step guides for AI PMs.

Here’s what you might have missed:

Introduction to AI Product Management: Neural Networks, Transformers, and LLMs

AI Agent Architectures: The Ultimate Guide With n8n Examples

Consider subscribing and updating your account for the full experience:

1. Why PMs Should Consider Gemini File Search

Think of Gemini File Search API as a managed vector search engine for your product.

You upload files and you get:

Semantic search over your content.

Grounded answers with citations.

Support for PDF, DOCX, TXT, JSON, and many common text file types.

File lifecycle operations: upload, import into a store, delete.

The main benefits for you as a PM/AI PM:

Speed: you can test ideas in hours.

Focus: you spend time on UX, flows, and use cases instead of infra.

Cost: storage and query-time embeddings are free, indexing is very cheap ($0.15 per 1 million tokens).

This is perfect for:

Prototyping and testing ideas fast, without getting stuck on technical aspects.

Building agents that need fast access to your docs.

But it comes with serious tradeoffs you should understand upfront:

Limited document chunking strategies.

You cannot pick or tune embedding models.

You cannot change how retrieval is ranked.

You cannot inspect embeddings or scores.

You’re opting into Google’s choices for chunking, embeddings, and retrieval. That’s fine for many use cases and prototypes, but it’s a real constraint.

Side note: What about our previous RAG Chatbot?

A while ago, I showed you how to build a RAG chatbot without coding with n8n, Pinecone, and Lovable:

This article is still important. It demonstrates how RAG actually works and helps you develop AI intuition. As an AI PM, you should understand the available chunking, embedding, retrieval, and generation techniques.

2. Example: Little DMS (Document Management System)

To really understand how Gemini File Search behaves in a real app, I built a small DMS on top of it: Little DMS.

The motivation was simple:

There’s a lot of hype.

Coding agents failed to implement Google’s examples.

Gemini File Search is powerful, but you still need to make key decisions and design a system around it.

So I decided to tackle all issues once, learn, and turn it into something repeatable.

Short demo (no sound: log in, switch views, upload a document, and use the RAG chatbot):

Later in this post, I share:

A public Gemini File Search Integration Handbook for everyone.

My Little DMS template + documentation, so you can make it your own or clone specific aspects.

3. Gemini File Search vs Traditional RAG

Here is the key mental model:

Traditional RAG: you control everything — chunking, embeddings, ranking, vector DBs, permissions.

Gemini File Search: you outsource the search engine and chat, but you still own the database, metadata, permissions, and UX.

What Gemini File Search actually gives you

Automatic chunking,

Automatic embeddings,

Managed vector storage and indexing,

Semantic search + grounded answers with citations.

No other tools such as n8n and Pinecone are required.

What you still have to design

Your document database,

Folder structure + metadata,

Upload flow + status tracking,

Permissions and org structure,

UX for browsing files, search, and chat.

Hard limits

Limited chunking strategies

No control over embedding models or retrieval strategies

No retrieval-only endpoint (only the “chat experience”)

Limited debug visibility

If you need full control over retrieval quality, classic RAG is still the better option (like the n8n plus Pinecone plus Lovable I shared earlier).

The deeper tradeoffs and architecture options are in the integration handbook linked below.

4. How to Easily Implement Gemini File Search in Your Product (+Public Handbook)

At a high level, you and your team only need to do two things:

Phase 1: Data + storage decisions

Add a documents table

One place where you store: file name, folder, owner, organization, file type, status, and the Gemini IDs you get back from File Search.

Pick a store strategy

For prototypes: one global store is enough.

For real products: one store per organization works best by default.

(The pros, cons, and why “store per folder” is a trap are in the handbook.)

Phase 2: Build simple flows

Build a simple upload flow

User uploads a file.

You store it, send it to Gemini.

You save the returned IDs and mark the file as “ready” in your UI.

Map citations back to real file names

Gemini returns internal document names in citations. Your backend looks them up in the documents table and replaces them with human-readable names and links.

An alternative: You can use metadata, but keep in mind document names can change.

Attach metadata and enforce permissions

When you import a new document, attach metadata like folder, org, user groups, and owner. When querying, enforce your permission checks so users only see what they’re allowed to see.

All the technical details, options, and edge cases are described in a separate Gemini File Search Integration Handbook (.md):

What Gemini provides,

What you must build,

Key architecture decisions,

Implementation patterns,

Best practices,

Common mistakes,

Limitations.

You can drop it into your agent and let it guide the implementation step by step.

For prototyping: don’t overthink it

If you’re just testing an idea, you don’t need a 2-hour meeting.

Tell your agent (adjust as needed):

Build a simple RAG chatbot that uses Gemini File Search.

Treat this as a single-tenant internal prototype. Use one store for everything, a simple documents table, and basic metadata. Don’t show me all possible options. Pick the simplest recommended path and explain it briefly.

Implement it in 4 phases:

Phase 1: Build a simple UI with two tabs: Documents (flat list, upload button), Chat.

Phase 2: [First tab] File upload into a single global store, file list, no folders.

Phase 3: [Second tab] Implement document chat without citations.

Phase 4: [Second tab] Implement document chat with citations.

Important: Use the attached .md file to understand Gemini File Search API and available choices and add it to the code base.

Common mistake: Ignoring the attached .md file.

If the agent makes a mistake, double down on the handbook. In Lovable, use the Chat mode so that the agent plans it’s actions before jumping into action, e.g.:

What’s the reason for errors?

I’m 100% sure the handbook is correct.

Make sure our implementation follows the handbook.

That’s how I built a simple prototype (18 sec demo):

The entire process took 31 min 20 sec, including adding a few extra features. A full recording, no cuts:

Start simple. That’s often enough to test an idea. You can improve the solution later.

5. A Ready-to-Use Little DMS Template (Not Only For Lovable)

To save you even more time, I wrapped what I learned into a Little DMS template.

You can use it to test complex ideas or build simple document chat-based apps.

What you get

The template includes:

A basic DMS architecture wired to Gemini File Search.

A persistent database for documents and mappings.

Folder browsing and document lists (list and tiles views).

Chat over your documents with citations mapped to real file names.

Metadata-based filtering by folder.

All you need to do is:

Clone the template.

Provide your Gemini API Key.

Cover symbolic hosting costs in Lovable Cloud. No Supabase required.

You can also export it to GitHub and open it with Claude Code or Replit.

Little DMS Documentation

Documentation you can read or give to your agent:

agents.md: Describes the entire system and the content of specific documents.

architecture.md: System design and component interaction documentation (data model, storage, permissions, components, citation flow).

database.md: Complete database schema documentation for the PostgreSQL/Supabase backend.

design.md: Design system and theming guide.

gemini_integration_handbook.md: Covers the Gemini integration specifics.

user_flows.md: Step-by-step documentation of all user-facing workflows.

search.md: Deep dive into search execution and citation handling.

Get the Little DMS template below: 👇

How you can extend it

Once you clone the template, you can extend it.

Based on my experience as a Virtual Data Room PM, I explained possible directions in feature_ideas.md:

Group permissions and multi-org support,

File previews and PDF conversion,

Secure viewing without downloads,

Event reporting and analytics,

Context menus and folder uploads,

Google Drive import integration,

Search-only mode for simpler UX.

Get the Little DMS template below: 👇

Keep reading with a 7-day free trial

Subscribe to The Product Compass to keep reading this post and get 7 days of free access to the full post archives.