Will We Lose Our Jobs to AI? Cutting Through the Hype

TLDR: We will not. But we need to adapt.

Hey, Paweł here. Welcome to the free edition of The Product Compass.

Each week, I share actionable tips and resources for product managers.

Here are a few posts you might have missed:

Assumption Prioritization Canvas: How to Identify And Test The Right Assumptions

Kano Model: How to Delight Your Customers Without Becoming a Feature Factory

You can also explore 85+ best posts in the archive.

Consider subscribing and upgrading for the full experience:

Since ChatGPT-3.5 was introduced, it feels like we’ve been drowning in “breaking AI news” every week.

For a while, I couldn’t tell whether it was just hype or if we were making fast progress toward AGI (Artificial General Intelligence) that could change everything, maybe even replace us.

If you’ve been following AI news, you’ve probably heard many leaders claim that AGI is just around the corner. But is it really?

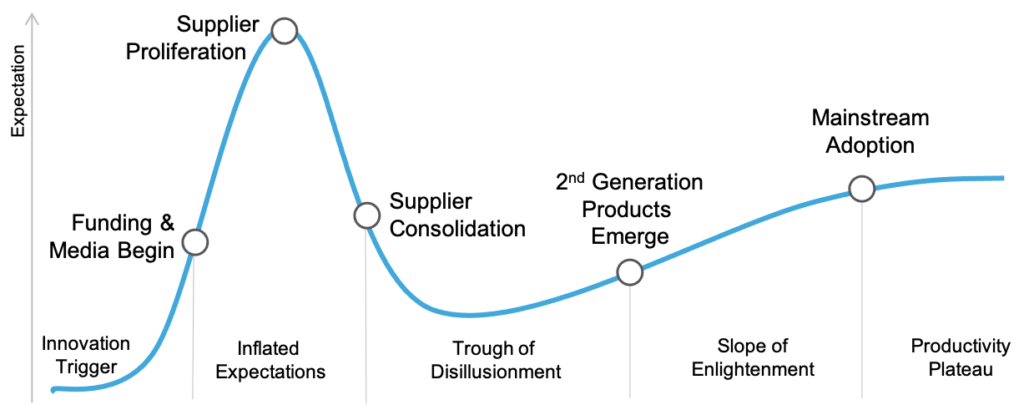

As Product Managers, we’re familiar with the classic Gartner Hype Cycle, which suggests that every new technology goes through five phases:

Innovation Trigger: A new technology is introduced.

Peak of Inflated Expectations: Early successes generate excitement and high expectations.

Trough of Disillusionment: Reality sets in as challenges and failures emerge.

Slope of Enlightenment: Understanding the technology's true potential begins to develop.

Plateau of Productivity: The technology reaches mainstream adoption with realistic expectations.

On the other hand, if AGI existed, the impact could be far more profound. According to popular belief, we could witness an intelligence explosion once we can delegate AI research to AI itself.

Over the last few months, one thing has become clear to me: Big tech companies fuel AI hype by lying to the public.

At the same time, many of the changes are real and we need to adapt.

Today’s newsletter is a bit different from the others. We’ll discuss:

The Artificial Hype

A Sober Look

How to Adapt to AI

Conclusions

1. The Artificial Hype

Let’s explore a few examples to highlight what AI is capable of, how the public is being deceived, and how accurate previous AI predictions have been.

Tesla (Jan 2016)

In January 2016, during a press conference, Elon Musk declared that Tesla would outperform human drivers within two to three years. Next, he claimed that within two years, you'd be able to summon a Tesla from across the country remotely.

Eight years later, we’re still waiting for that to happen. How many people were deceived into buying cars based on those promises?

Open AI, GPT-4 (January 2023)

Do you remember images like the one below circulating everywhere at the beginning of 2023, with comments like “If GPT-3 impressed you, wait for GPT-4?”

The reality? Here's a comparison of GPT-3.5 and GPT-4 from June 2023:

According to LMSYS Chatbot Arena, the current performance of those models:

GPT-3.5 (turbo, 175 billion parameters) scores 1,117.

GPT-4o (latest, 100 trillion parameters) scores 1,317.

Yet, Sam Altman keeps persuading startups that:

“There are two strategies to build on AI right now (…) Build assuming OpenAI will stay on the same trajectory” - Sam Altman, CEO Open AI

Startups keep spending their money. But the promised trajectory is looking flat. As a result, what these startups are building might not even be feasible.

Google AI (May 2023)

In May 2023, Sundar Pichai emphasized that Google focuses on AI this year. His talk quickly became a meme:

Google Gemini Demo (January 2024)

After what Pichai referred to as “focusing on AI,” Google presented the capabilities of their multimodal AI in January 2024:

The top comment on YouTube:

“Absolutely mindblowing. The amount of understanding the model exhibits here is way way beyond anything else.” - dpsdps01

The problem?

Google's video was deceptive. Although it was called "Hands-on with Gemini," the examples, images, and responses were staged, misleading us to believe the model was much more advanced than it was.

You can read more about this on Techcrunch.

AI Engineers (2024)

In February 2024, during the World Governments Summit, NVIDIA's founder, Jensen Huang, declared that AI would replace software engineers and that:

“It is our job to create computing technology such that nobody has to program (…) Everyone in the world is now a programmer. This is the miracle.” - Jensen Huang, CEO NVIDIA

One month later, Cognition AI introduced Devin, the first AI software engineer.

It quickly became evident that this instance of the “miracle” was nothing but a lie.

In the video below, Carl from the Internet of Bugs breaks down the Devin Upwork video frame by frame and explains what Devin was supposed to do, what it actually managed to do instead, and how bad a job it did:

But the manipulation succeeded. Cognition AI's recent valuation was $2 billion, which allowed it to raise $175 million one month after Series A.

AI Today (August 2024)

Big tech companies continue to claim that AI models regularly make drastic improvements in their capabilities.

Just a few days ago, in a leaked recording, Amazon's cloud chief told employees that most developers could stop coding as soon as AI takes over:

“If you go forward 24 months from now, or some amount of time — I can’t exactly predict where it is — it’s possible that most developers are not coding” - Matt Garman, AWS CEO

Those who follow the Internet of Bugs understand that’s nonsense. We are not even close to fully autonomous coding.

Do leaks like this really happen by accident? I have doubts.

To demonstrate what AI models are truly capable of after more than 20 months of “radical improvements” (ChatGPT-3.5 was launched in November 2022), I asked ChatGPT-4o to perform a simple task:

Paweł: Generate a picture of a product manager holding one chicken in the left hand and two chocolate bars in the right hand.

ChatGPT-4o: Here is the image of a product manager (…)

Paweł: Are you sure that's the left and right hand?

ChatGPT-4o: It appears that the orientation might be reversed in the image, making what should be the left hand appear as the right, and vice versa. Here is the corrected image with the chicken in the actual left hand and the chocolate bars in the actual right hand (…)

Does anyone think this is close to AGI?

2. A Sober Look

So, where do we stand today? While I don’t know everything, a few things have become clear.

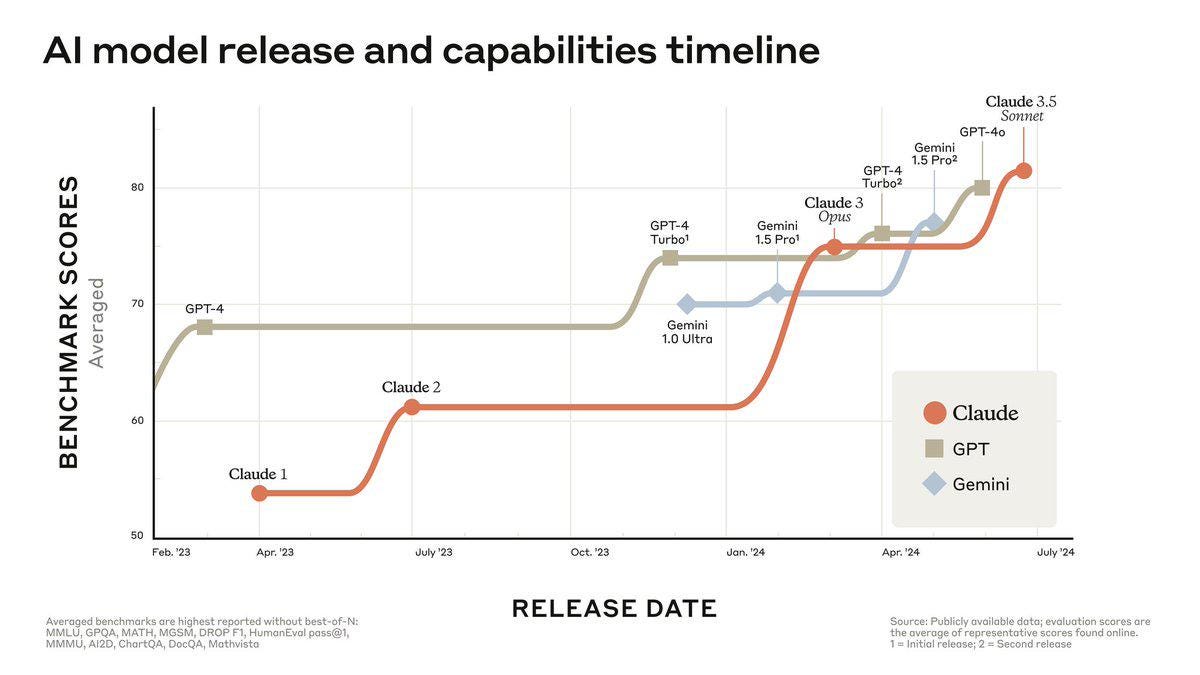

Progress is slowing

Despite vast resources - far surpassing Moore's Law - being poured into training AI models and improving algorithms, progress is stagnating.

A recent diagram from Anthropic AI illustrates this flat trajectory:

Big tech has no choice but to invest in the “next big thing”

Tech giants are under pressure from shareholders to boost revenue and keep up with the hype, so they have no choice but to chase the "next big thing."

For example, in Q2, Alphabet (Google) invested $12 billion in building AI, with another $100 billion projected soon.

With quarterly revenue exceeding $80 billion and net income over $20 billion, they can afford this. The cost of being disrupted would be far greater.

Similarly, in Q2, Microsoft invested $19 billion in cash capital expenditures and equipment, mostly related to AI.

Many AI companies are struggling to turn a profit

Recent information suggests that OpenAI could lose $5 billion this year alone. Also, Microsoft has disappointed investors with its recent revenue metrics.

Meanwhile, open-source AI models like Meta's LLaMA 3 (70B) score just as well as top performers across multiple benchmarks. They're shaking up the market:

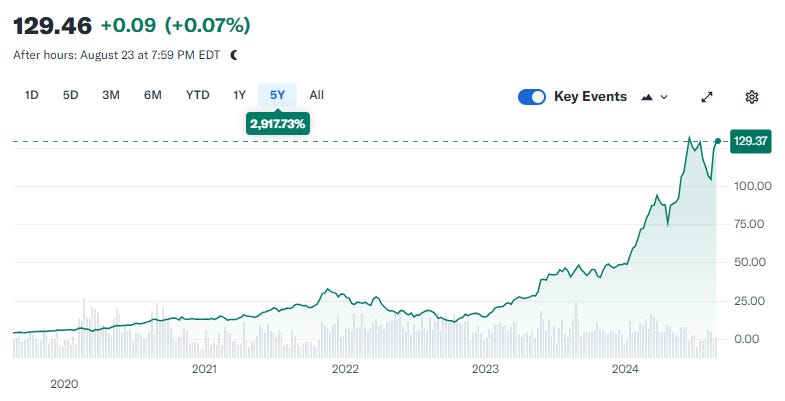

Only NVIDIA, a leader in AI training hardware, is thriving. It even briefly became the world’s most valuable company. Currently, with a $3.18 trillion market cap, it’s #2 after Apple ($3.44 trillion):

Even more resources are being poured into training AI models

In July 2024, Elon Musk announced that his xAI team, in partnership with X and Nvidia, had launched a supercomputer cluster with 100,000 liquid-cooled H100 GPUs. He claimed that this will allow xAI to create the world's most powerful AI by the end of this year.

But we already understand that simply increasing the size of AI models doesn't necessarily lead to significant improvements in their performance.

Additionally, larger models involve higher computational and operating costs, and monetizing the existing ones is already tough.

Almost every company now has an AI strategy

Nearly every company glorifies its AI strategy, often implementing features that aren't truly feasible.

For example, for content creators, it's evident that large language models (LLMs) still can't produce quality writing. Yet, this hasn't stopped companies like LinkedIn, which has integrated AI-generated articles into their platform:

Too often, implementing these AI features happens at the expense of addressing real customer needs.

3. How to Adapt to AI

Despite the hype, I believe AI can drastically improve our performance. I presented many examples of how to use it in Top 9 High-ROI ChatGPT Use Cases for Product Managers.

But there’s a catch. AI models need human judgment to distinguish junk from valuable output. The model is only as good as the person using it.

This means you can’t hand over tasks to an AI if you don’t fully understand how to do them correctly. For example, ChatGPT-4o might help you:

Write User Stories, but it won’t help if you don’t understand how and why User Stories are created, along with their pros and cons.

Identify assumptions and suggest experiments to test them, but it won’t help if you don’t know how product discovery works, how to prioritize assumptions, and risk management concepts.

Create a product strategy, but it won’t help if you don’t know what a good one looks like, how to perform market research, or what methods and tools are available.

I’m confident AI won’t replace senior roles like Product Managers and Software Engineers anytime soon.

At the same time, if you do a junior job, like handling feature requests or implementing CRUD operations based on detailed specifications, you might have a reason to worry.

So here’s my advice:

Use LLMs daily to boost your productivity: Experiment with prompts to understand what these models can do and how to include them in your workflow.

Educate yourself in AI: You should understand concepts like fine-tuning and AI agents, but there’s no need to obsess over them. YouTube videos are perfectly fine unless you want to tie your career more closely to AI.

Get interested in the business side of the product: How do your organization’s Sales, Success, and Support teams work? How exactly does your company make money? How do you acquire customers? What are the key acquisition, retention, and revenue metrics? How do these metrics differ depending on the customer segment? How have they changed over time? Who are your competitors? What’s unique about your strategy?

Upskill in Product Management: Managing a Product Backlog is not enough. Start with Product Discovery (a key area for any Product Manager) and build your understanding of Data Analytics, Product Growth, Product Strategy, and other related areas. This will help you make better decisions, equip you with a set of “thinking tools,” and enable you to collaborate more effectively with others in the organization.

Here are a few resources to start:

4. Conclusions

The AI hype is fueled by big tech companies. They repeatedly lie to the public about what AI is or might soon be capable of.

Despite investing more money and resources, progress is slowing down, and companies struggle to monetize AI.

While AI can significantly boost your productivity, it’s crucial to remember that AI models still require human judgment to separate junk from valuable output. The model is only as good as the person using it.

That’s why I’m confident AI won’t replace senior roles like Product Managers and Software Engineers anytime soon. Continuing to upskill and grow in your role is the best way to future-proof your career.

What are your thoughts?

Thanks for reading The Product Compass!

It’s fantastic to learn and grow together.

Have a wonderful, productive week ahead,

Paweł

Excellent article on the hype cycle of AI! I agree AI won't be replacing experienced product managers. You have great advice that we need to use and adabpt to AI to grow our PM skills.

AI must be in the air for PMs...my next article is also on the impact of AI on PMs 😊My article comes out on Tuesday.

💯💯💯👏🏻👏🏻👏🏻

"Too often, implementing these AI features happens at the expense of addressing real customer needs."