AI Product Manager Glossary

80+ key terms across 12 categories critical for AI Product Managers, AI engineers, and AI builders.

A special, AI PM glossary free for all subscribers. Print it and hang it on the wall.

Last updated on 11/3/2025.

Contents:

1. AI Product Management

AI Literacy & AI Intuition

The ability to understand foundational AI concepts (e.g., LLMs, AI Agents, MCP), perform error analysis, and iteratively refine prompts or models based on hands-on experimentation. I recommend developing it by working with AI, not studying theory.

AI Product Manager

A PM who works on AI-powered products and features. Unlike traditional PMs, AI PMs must understand the technology and develop an AI intuition.

AI-Powered Product Manager

A PM who uses AI in their daily work. I have a strong opinion: soon, there will be no such thing as a PM who doesn’t use AI virtually every day. We should be living and breathing AI.

Product Manager

The team member especially responsible for the two risk areas:

Customer value: Do customers want this product or feature? Can we actually deliver on the value promised?

Business viability: Will it work for different parts of the business?

The most important activity for a PM is participating in Product Discovery. Its goal is to address key risks before implementation, to avoid wasting time and resources.

Recommended reading:

2. Generative AI: Basic Terms

Deep Learning

A subset of machine learning that uses neural networks with many layers (deep neural networks). It can be applied to supervised, unsupervised, and reinforcement learning.

Fine-Tuning

The process of taking a pre-trained model and continuing its training on a smaller, task-specific dataset. This adapts the model's behavior for specialized applications, like following specific style or formatting.

Generative AI (Gen AI)

AI systems, typically based on large pre-trained models, that generate new content (such as text, images, audio, or code) by learning patterns from large datasets.

Neural Networks

Computational models inspired by the human brain, consisting of layers of interconnected nodes ("neurons") that process data to learn patterns.

Reinforcement Learning

A training method where a model learns by interacting with an environment. It receives rewards for desired actions and penalties for undesired ones (e.g., AlphaGo).

Reinforcement Learning from Human Feedback (RLHF)

A technique to align LLMs with human preferences. It involves training a reward model based on human ratings of model outputs and then using that reward model to guide reinforcement learning that updates the LLM.

Supervised Learning

A training method where a model learns from a labeled dataset, meaning each piece of input data is paired with a correct output. This is the basis for fine-tuning.

Transformers

A neural network architecture designed for sequential data, like text. Its key innovation is the self-attention mechanism, which weighs the importance of different parts of the input.

Unsupervised Learning

A training method where a model learns from unlabeled data, discovering patterns and structures on its own.

Recommended reading:

3. AI Models

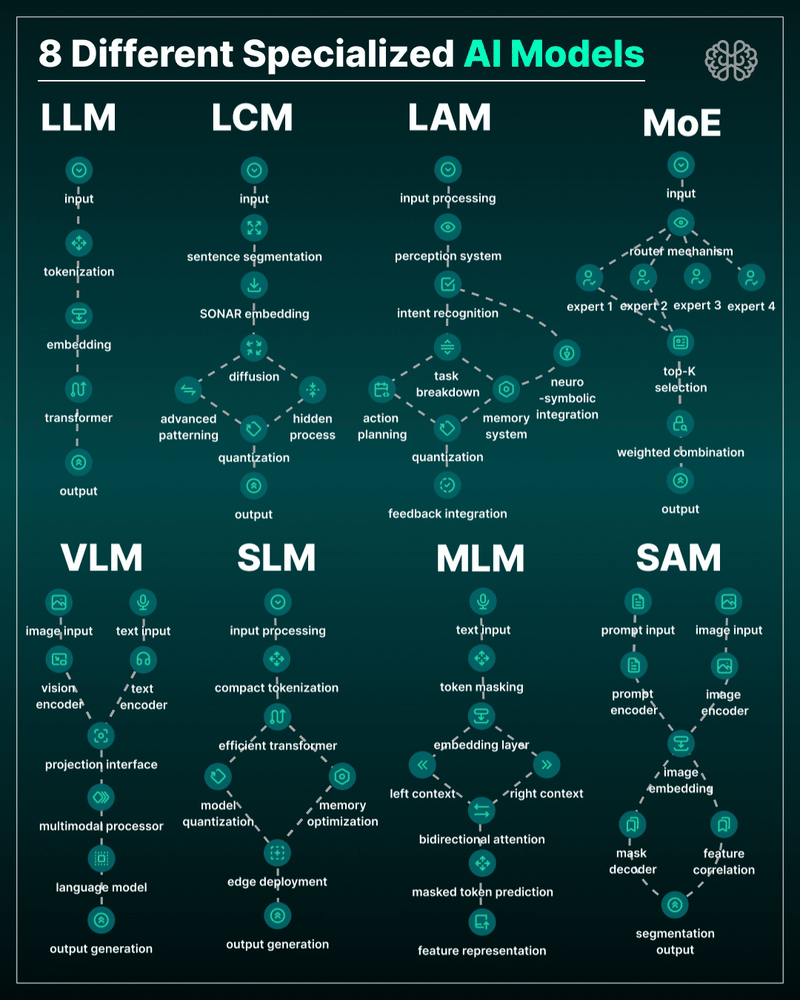

Language Action Models (LAM, advanced)

Models designed not just to understand language but to take action based on it, such as interacting with software or APIs.

Large Language Models (LLMs)

Models trained on large datasets to generate and understand human-like text. They combine supervised, unsupervised, and deep learning, making them excellent for natural language understanding and generation (e.g., ChatGPT).

Latent Concept Models (LCM, advanced)

Powerful models that excel at capturing nuanced or hidden concepts within data.

Masked Language Models (MLM, advanced)

Models trained to predict masked or missing words in a sentence. This approach helps them master context and understand language structure deeply.

Mixture of Experts (MoE)

An architecture that combines outputs from multiple specialized "expert" models. It intelligently routes queries to the most relevant expert, improving performance and efficiency.

Segment Anything Models (SAM, advanced)

Models designed for precise image segmentation, capable of identifying and outlining objects in an image with high detail and accuracy.

Small Language Models (SLMs)

More compact models that are ideal for efficiency and speed, especially in resource-constrained environments or for specialized tasks.

Vision-Language Models (VLMs)

Models that handle both text and images, bridging the gap between visuals and language to understand and generate content based on both modalities.

Recommended reading:

4. Prompting & Context Management

Chain-of-Thought (CoT) Prompting

A technique to help the model think step-by-step. Instead of jumping straight to the answer, you ask it to break down the problem and explain its reasoning first. This usually leads to more accurate results, especially for questions where the model needs to simulate logical reasoning.

Context Management

Since the model has a memory limit, you need to be smart about what info you include. Context management means deciding what matters most, trimming what doesn’t, and structuring it in a way the model can understand and use. Specific techniques include prompt engineering (yes, it’s not dead, despite what some say) and RAG. Some make the mistake of including fine-tuning, but it’s not a context management technique - it modifies the model itself.

Context Window (Token Limit)

The model has a limited memory for each prompt, measured in tokens (chunks of words). The context window sets how much info you can include, like past messages or documents. If we go over the limit, the model starts forgetting the earliest parts.

Few-Shot Learning

Instead of zero examples, you give the model a few. Just enough to show what kind of response you want. Great for formatting tasks, tone matching, or guiding the model when clarity matters without fine-tuning.

Prompt Engineering

This is the art (and a bit of science) of talking to an AI in the right way. It’s about crafting prompts that get the best results by rephrasing, formatting, or structuring input.

Prompt Injection

An attack where malicious instructions are hidden in prompts to trick an LLM into bypassing its safety protocols, disclosing private data, or deviating from its intended behavior. For example, telling the model to ignore earlier instructions. A real security concern in AI systems.

Temperature

A setting that controls how creative or predictable the model’s response is. Low values make it focused and safe. High values let it get more varied or helpful for brainstorming, less so for factual tasks.

Zero-Shot Learning

Instructing an LLM to perform a task without providing any examples in the prompt, relying entirely on its pre-trained knowledge.

Recommended reading:

5. Retrieval-Augmented Generation

Agentic RAG

A version of RAG where the AI decides on its own when to retrieve information, what to retrieve, and how to use it.

Adaptive RAG

Enhanced RAG that makes retrieval and generation process more dynamic and self-correcting, adapting to the complexity of a query and the quality of retrieved information.

CAG (Cache-Augmented Generation)

An alternative to RAG. Instead of retrieving data during each interaction, CAG loads relevant information into the model’s context beforehand. This speeds things up and works well when the data is stable and fits in the model’s memory. It is also called Context-Augmented Generation.

Embedding

A way to convert data, like text or images, into numerical vectors that capture meaning. These vectors help the model understand relationships between different pieces of data and allow for similarity searches.

Hybrid RAG

A retrieval approach that combines multiple retrieval methods to improve the quality of context provided to the model. Typically, it merges dense retrieval (using vector similarity) with sparse retrieval (like keyword-based search or BM25). This way, it captures both semantic meaning and exact keyword matches, making the retrieval more robust.

HyDE (Hypothetical Document Embeddings, advanced)

A method that boosts retrieval by having the model generate a fictional answer to the query. That generated answer is turned into an embedding, which is then used to find real documents that are similar. This helps connect short queries to more complete responses.

Reranking

A technique used in RAG that improves retrieval results by reordering the initial set of documents or chunks. A model evaluates which items are most relevant and puts the best ones at the top.

Retrieval-Augmented Generation (RAG)

A method where the model first retrieves relevant information from external sources such as a vector store, database, or file system. It then uses that information as context to generate a better response.

Vector Databases (Vector Stores)

Specialized databases that store and search embeddings efficiently. They are built to handle high-dimensional vectors and find similar items quickly, even at large scale.

You might also benefit from out Interactive RAG simulator:

Recommended reading:

RAG types from the Guide to Context Engineering for PMs

Point 5, Three Essential Agentic RAG Architectures from AI Agent Architectures

Case study from Base44: A Brutally Simple Alternative to Lovable

6. AI Agents

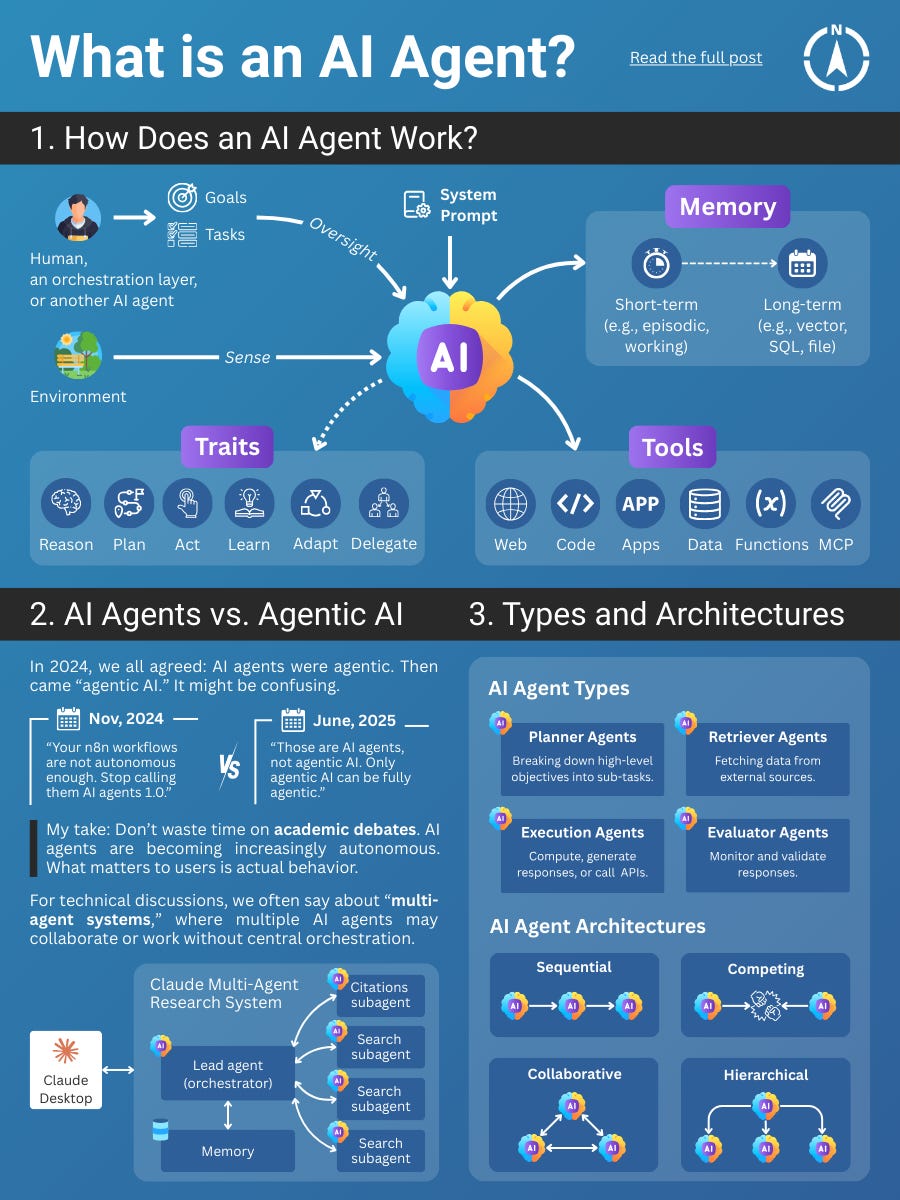

AI Agent

A system where a language model (LLM) takes control of how it completes a task. It decides what tools to use, when to use them, and how to adapt its process as it goes. The agent isn't just responding but actively managing its own steps. Critically, AI agents might have a different level of autonomy.

AI Chain (advanced)

A setup broader than prompt chaining, where different components (including prompts, code, external tools, or even other models) are connected in a sequence. Each component passes its output to the next, forming a pipeline. AI Chains are often used to build full workflows, not just chains of prompts. For example, we extract info using an LLM, validate it with code, enrich it with an API call, then ask the LLM to write a final summary. Think n8n.

Agent-to-Agent (A2A)

A protocol that allows AI agents to talk directly to each other. It supports things like sharing results, passing off tasks, or negotiating responsibilities between agents.

Agentic Workflows

Automated systems where an LLM and supporting tools are connected through predefined logic. The steps are set in code, but the LLM adds intelligence by handling inputs, making decisions, or generating content along the way.

Model Context Protocol (MCP)

An open standard introduced by Anthropic that defines how AI models and agents can connect to external tools, APIs, and data sources in a consistent and secure way. Think of it as a USB-C port for AI.

Multi-Agent System

An architecture where several AI agents work together to solve tasks. Each agent may specialize in something different. They communicate, share information, or divide responsibilities to handle complex problems.

Prompt Chaining

A method where you split a task into smaller steps and run them through the LLM one at a time. Each step is handled with its own prompt, and the output from one step is passed into the next prompt. This helps guide the model through complex tasks or multi-stage reasoning. For example, we ask the model to summarize a text, then ask it to generate a title based on that summary.

Recommended reading:

7. Data & Feature Management

Concept Drift

A specific kind of Data Drift where the underlying relationship between inputs and the target variable changes over time. For example, if customer behavior evolves, a model trained on past preferences might no longer make accurate predictions.

Data Annotation / Labeling

The process of tagging or categorizing raw data to create a labeled dataset for supervised learning. This step is essential for training models to recognize patterns and usually requires a lot of manual effort. Another context in which we use these terms is error analysis (part of AI evals).

Data Augmentation

A method used to expand and diversify a training dataset by making slight changes to existing data. Examples include rotating images, adding noise, or rephrasing sentences to help the model generalize better.

Data Drift

When the data a model sees in production starts to differ from the data it was trained on. This shift can reduce accuracy because the model is no longer operating in the same conditions it learned from.

Feature Store (advanced)

A feature store is like a shared database where machine learning teams keep useful data pieces (called features) that help models make predictions. These features are cleaned and ready to use, so teams don’t have to rebuild them every time. It also makes sure the model uses the same information when it’s being trained and when it’s used in the real world.

Model Drift

This is a broader, more casual term than Data Drift and Concept Drift. It usually means that a model's performance is getting worse over time but doesn’t specify why. It might be caused by data drift, concept drift, or something else like bugs.

8. AI Evals (AI Evaluation Systems)

AI Evaluation System

A framework of automated and human-in-the-loop evaluations (e.g., code-based scripts, LLM-as-judge checks) that measures whether an AI product “actually works,” supporting rapid learning cycles and alignment with user and business goals.

Bottom-Up vs. Top-Down Analysis

Two approaches to defining AI metrics. Bottom-up analysis identifies application-specific failure modes directly from the data. Top-down analysis applies generic metrics (like hallucination or toxicity) that may miss domain-specific nuances.

Code-Based Evals

Automated evaluators implemented as deterministic scripts (e.g., using Python with XML, SQL, or regex checks) that objectively flag specific, rule-based failure modes.

Error Analysis

The process of reviewing model outputs (LLM traces), labeling errors, and classifying them into distinct failure modes. This is repeated iteratively until no new failure modes emerge and is a high-ROI activity in AI product development.

F1-Score

The harmonic mean of precision and recall, providing a single score that balances both metrics.

Failure Modes

Coherent and non-overlapping categories of errors that emerge from analyzing LLM traces (e.g., hallucination, incorrect format, or missed instruction). Each binary failure type is easy to recognize and forms the basis for targeted metrics.

LLM Traces

A complete record of an LLM pipeline's execution, including the user query, intermediate reasoning steps, any tool calls, and the final output. These traces serve as the raw data used in error analysis to identify patterns and failure modes.

LLM-as-Judge Evals

Evaluation modules where a secondary LLM acts as the "judge," assessing outputs against a single failure mode in a binary pass/fail format. This is useful for complex or subjective checks but requires monitoring for alignment with human judgment.

Precision

A metric that measures the proportion of true positives out of all positive predictions (True Positives / (True Positives + False Positives)).

Recall (True Positive Rate, TPR)

A metric that measures how often a model correctly identifies a positive case. For judge alignment, this answers: "How often does the judge say 'Pass' when a human said 'Pass'?"

Synthetic Traces

Artificially generated LLM traces created by sampling combinations of hypothesized failure dimensions. These are converted into natural-language queries and run through the model pipeline when production data is scarce.

Theoretical Saturation

The point in an error analysis cycle when reviewing additional LLM traces yields no new failure modes, indicating that the failure taxonomy is complete.

True Negative Rate (TNR)

A metric that measures how often a model correctly identifies a negative case. For judge alignment, this answers: "How often does the judge say 'Fail' when a human said 'Fail'?"

Recommended reading:

AI Evals: How to Find The Right AI Product Metrics (Error Analysis)

9. Deployment & Operations

Latency

The time it takes for an AI system to respond after getting a request. Lower latency means faster responses, which is especially important in real-time applications like chatbots or fraud detection.

MLOps / ModelOps

A set of tools and practices that help teams manage AI models throughout their lifecycle. This includes training, testing, deploying to production, monitoring performance, and updating models as needed. The goal is to make working with AI models as smooth and reliable as software engineering.

Model-as-a-Service (MaaS)

A way to use pre-trained AI models through a cloud API, without needing to build or host the models yourself. Examples include the OpenAI API or Gemini API. This lets teams quickly add AI features to their apps with less effort and cost.

Throughput

The number of requests or tasks an AI system can process in a given time, such as per second or per minute. High throughput is important for scaling and handling many users at once.

10. Model Performance & Cost

Inference

The process of using a trained model to make predictions on new data. In the case of large language models, this means generating a response based on the input prompt. It’s what happens when you “ask” the model something.

Time to First Token (TTFT)

The time it takes from sending a request to when the first token of the response appears. Even if the full answer takes longer, a fast TTFT makes the system feel more responsive to the user.

Tokens Per Second

A measure of how fast a language model generates output. It tracks how many tokens (chunks of text) the model produces each second. Faster speeds mean quicker responses and better user experience.

Quantization

A method to shrink the size of a model and make it run faster. It works by converting the model’s internal numbers from high precision (like 32-bit floats) to smaller ones (like 8-bit integers). This helps reduce memory usage and speed up inference with only a small drop in accuracy.

11. Safety, Ethics & Alignment

Bias & Fairness

AI models can pick up biased patterns from the data they were trained on. This can lead to unfair or harmful outcomes, especially when decisions affect people. Fairness means detecting, reducing, and preventing those biases from impacting results.

Explainability / Interpretability

The ability to understand why a model made a certain prediction. This helps people trust the system, find errors, and meet legal or ethical standards. Techniques might show which inputs mattered most or how decisions were made step by step.

Hallucination

When a language model makes something up and presents it as if it were true. The response might sound convincing, but the facts are incorrect or completely invented. This is a major issue for tasks that require accuracy or trust, especially in AI Agents and Multi-Agent Systems.

Human-in-the-Loop (HITL)

A setup where humans stay involved in the AI’s workflow. People might review outputs, make the final decision, or correct the model when it’s unsure. This helps improve safety, quality, and accountability, especially in sensitive or high-risk situations.

12. Neural Networks & Transformers

Activation Function (advanced)

A mathematical rule inside a neuron that decides how it reacts to input. It helps the network capture complex patterns by adding non-linear behavior to the model.

Backpropagation (advanced)

The learning process used in neural networks. It calculates how much each weight contributed to the error and then updates those weights to reduce the loss in future predictions.

Feedforward Network (advanced)

A part of the transformer that processes the results from the self-attention step. It’s a small neural network that helps the model refine its understanding before passing data to the next layer.

Loss Function

A way to measure how far off the model’s predictions are from the correct answers. It gives the model feedback during training so it knows what to improve.

Masked Attention (advanced)

Used in transformer decoders to make sure the model doesn’t look ahead when generating text. This is important during training so the model learns to predict the next word without cheating.

Neural Networks

A type of machine learning model inspired by how the human brain works. It’s made up of layers of connected units called neurons. Each neuron processes input data and passes signals forward to help the model learn patterns.

Positional Encoding (advanced)

Since transformers don’t process data in order by default, this adds information about the position of each token in the sequence. It helps the model understand the order of words.

Self-Attention (advanced)

A key mechanism in transformers that lets each token in a sequence look at the others. This helps the model understand relationships between words, even if they’re far apart.

Tokenization

The step where input text is broken down into smaller parts, called tokens. These can be words, characters, or subwords. The model processes these tokens instead of raw text.

Weights of a Model

The internal settings of a neural network that get adjusted during training. They control how strongly each input affects the model’s prediction and are what the model "learns" over time.

Recommended reading:

Keep Learning

Thanks for reading The Product Compass!

You might also explore our practical step-by-step guides available in the AI Product Management section and office hours recordings.