The Ultimate Guide to AI Observability and Evaluation Platforms

Every AI observability & eval platform, explained. LangSmith, Langfuse, Arize, OpenAI Evals, Google Stax, PromptLayer & more - with real examples.

Everyone’s talking about observability and evals right now. My feed is full of frameworks and theory.

But here’s the problem: almost no one shows you how to actually run them.

After taking the AI Evals cohort, I spent 100+ hours testing platforms, breaking down the differences, and finding what’s common across all of them.

By the end, you’ll know:

Key Features of AI Observability and Evals Platforms

Comparison of AI Observability and Evals Platforms

How to Run the Evals Loop Yourself (step-by-step, no fluff)

Hey, Paweł here. Welcome to the Product Compass, #1 actionable AI PM newsletter. Every week I share hands-on advice and step-by-step guides for AI PMs.

Here’s what you might have missed :

Consider subscribing and upgrading your account for the full experience.

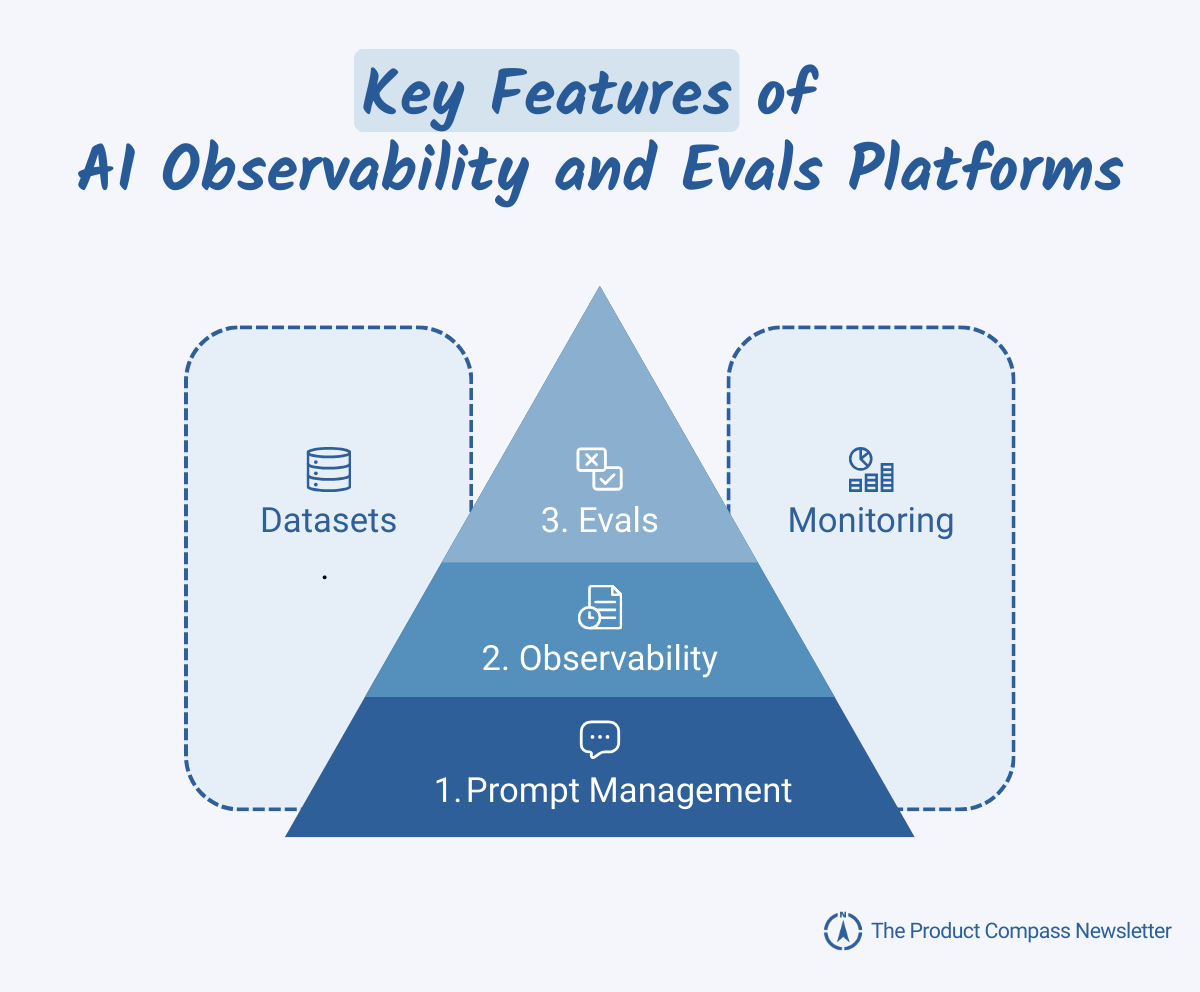

1. Key Features of AI Observability and Evals Platforms

After testing the major platforms, I noticed they share three core capabilities. The best platforms build each one on top of the last.

Capability 1: Prompt Management

Prompt management lets you define reusable prompts and test them in a playground.

Without it, you end up copy-pasting prompts and breaking things when you tweak them. With prompt management, you can:

Version prompts: call a specific version from your app so nothing breaks in prod.

Parametrize inputs: use variables instead of hardcoding everything.

Replay prompts on historical data: see how a new version would have performed.

A/B test in production: try new ideas safely.

Compare models: test prompts across models and parameters.

Think of it as Git for your prompts. A single place to manage, test, and evolve them.

Example from Arize with a short comment:

Example from OpenAI Platform with a short comment:

Capability 2: Observability

Observability is about logging and understanding what LLMs are actually doing.

There are two aspects that matter:

Aspect 1: What gets logged

There are two types of LLM traces:

Requests (aka completions, LLM calls): one prompt → one response. One row in the log.

Traces (aka chains, threads, runs): connected requests in one workflow. Example: step 1 draft → step 2 critique → step 3 rewrite. Perfect for debugging agents, agentic workflows, and chatbots.

Example from LangSmith with a short comment:

Aspect 2: How logs get captured

Four common ways:

Direct API calls: you call the provider’s API to log each LLM request and response (works in n8n, too).

Vendor SDKs/wrappers: auto-log everything, but more vendor lock-in.

OpenTelemetry - a vendor-neutral standard, integrates with existing infra. Requires instrumenting code around each LLM call with OTEL libraries.

Proxy / gateway - easiest setup. You swap the URL, vendor intercepts traffic.

Using Helicone as an LLM proxy

Many platforms support several methods simultaneously. For example:

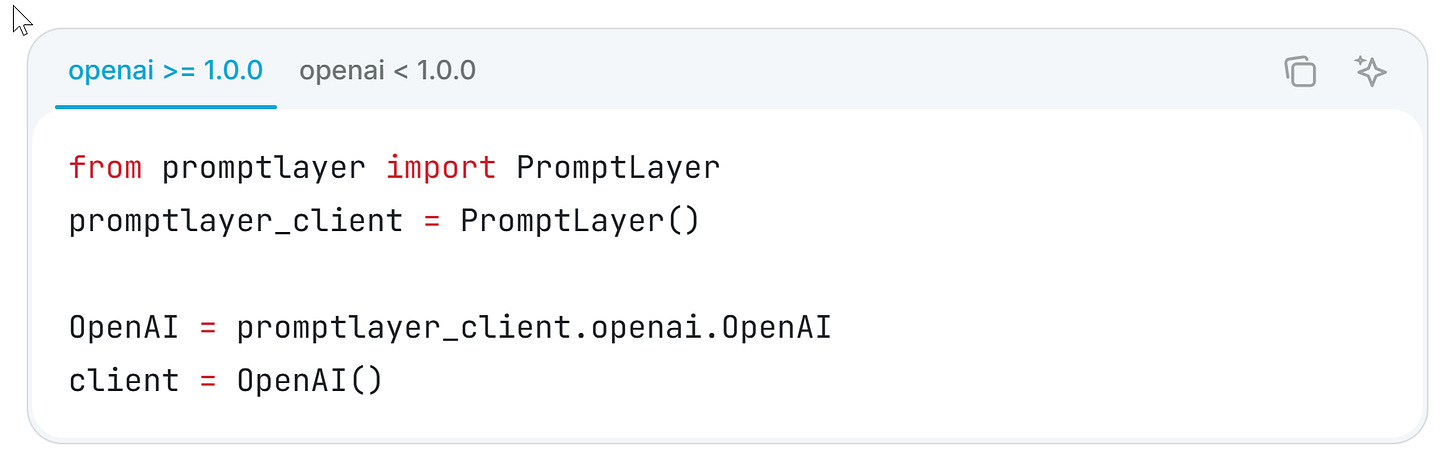

PromptLayer offers both an SDK wrapper and a REST API.

LangSmith has SDKs, an API, and OpenTelemetry integrations.

What you should care about:

Do you capture all LLM calls or just a subset?

How will you handle the Responses API calls? Are those logged, too?

Can you combine multiple LLM calls into one trace?

Are you okay with lock-in or do you need portability?

How hard would migration be, including artifacts like historical traces, evals, datasets, and dashboards?

Capability 3: Evaluations

If observability tells you what happened, evaluations tell you whether it was good.

The most important aspects:

Eval types

Code-based evals: objective checks like JSON/XML validity, SQL, or regex. Fast, cheap, deterministic.

LLM-as-judge evals: use another LLM as a judge. Great for complex or subjective checks.

Human evals (labels): label examples as good/bad (binary is easier to manage than granular scores).

Online evals (automatic sampling): pull a slice of production traffic for evaluation instead of manually uploading test sets.

Labeling queues: a queue of examples for you or your team to score.

Agreement tracking: how often human labels agree with model judgments (precision, recall, TPR, TNR).

Error analysis: The process of analyzing traces and identifying failure modes, especially when you’re starting up. Platforms don’t support this well yet.

What matters

Can you automate evals, or are you stuck exporting traces?

Can you reuse production data?

Can you track human vs. model agreement?

Done well, evals close the loop: log → sample → evaluate → improve.

Two Cross-Cutting Capabilities: Datasets and Monitoring

These cut across everything:

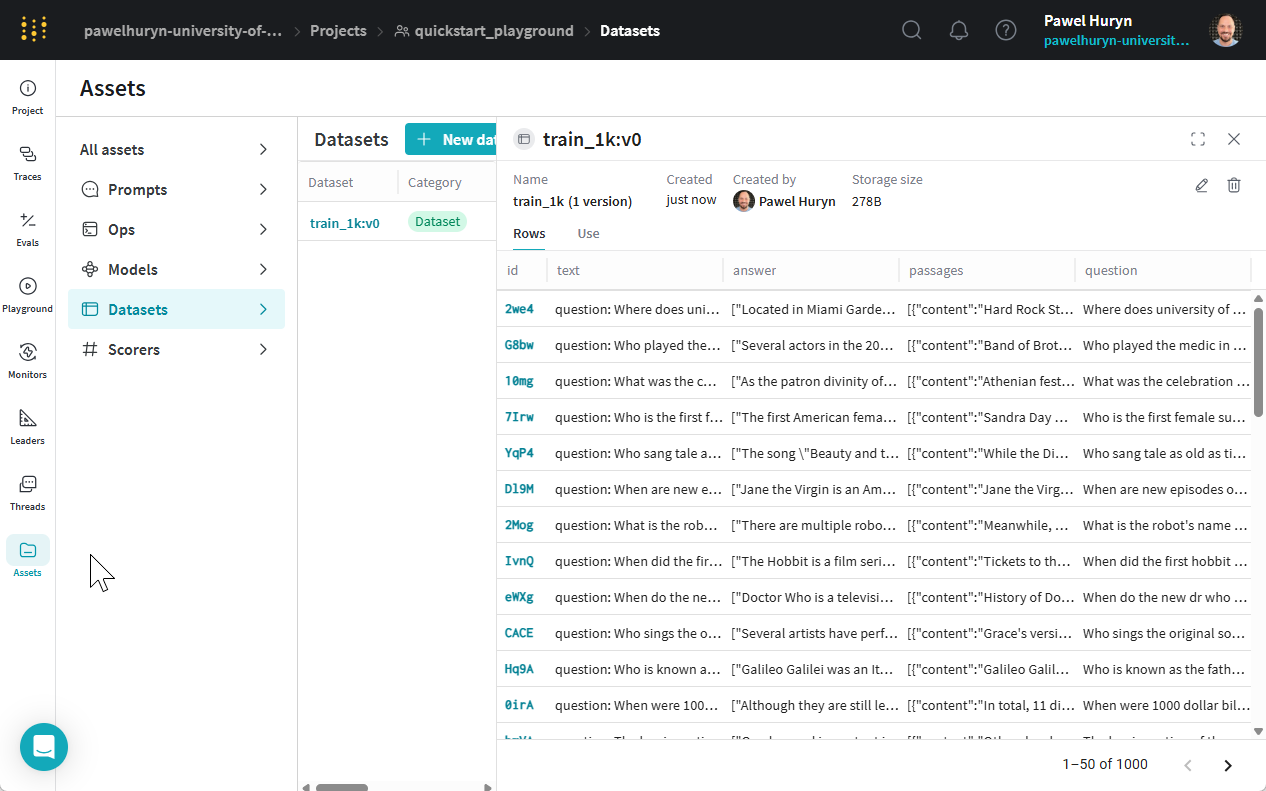

Datasets: the glue between prompt management, observability, and evals. You can use datasets to replay prompts, build eval benchmarks, and track improvements over time. Good platforms let you version, label, and reuse them.

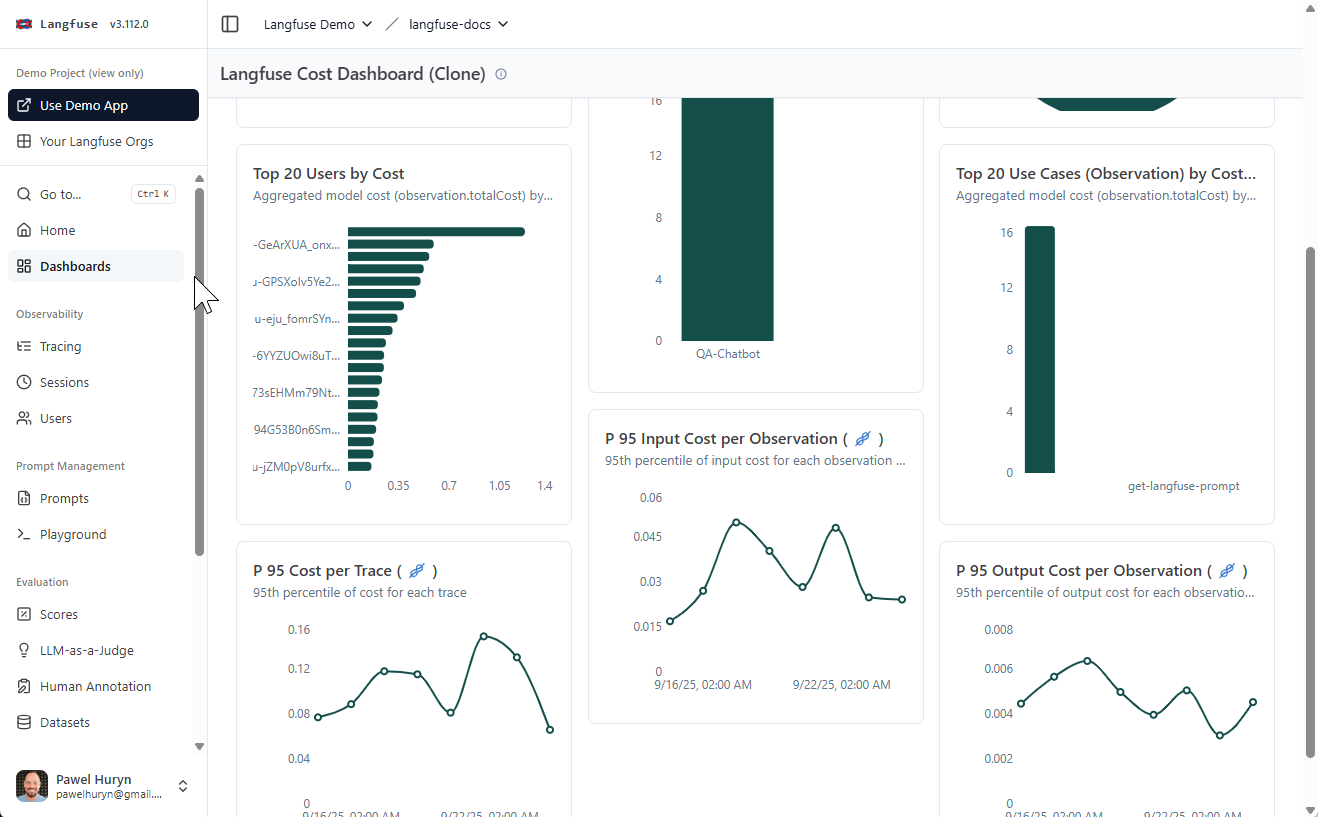

Monitoring: goes beyond raw logging. It means running evals continuously on production traffic, surfacing drift, and alerting you when quality drops. Monitoring turns evals from a one-off project into an ongoing process.

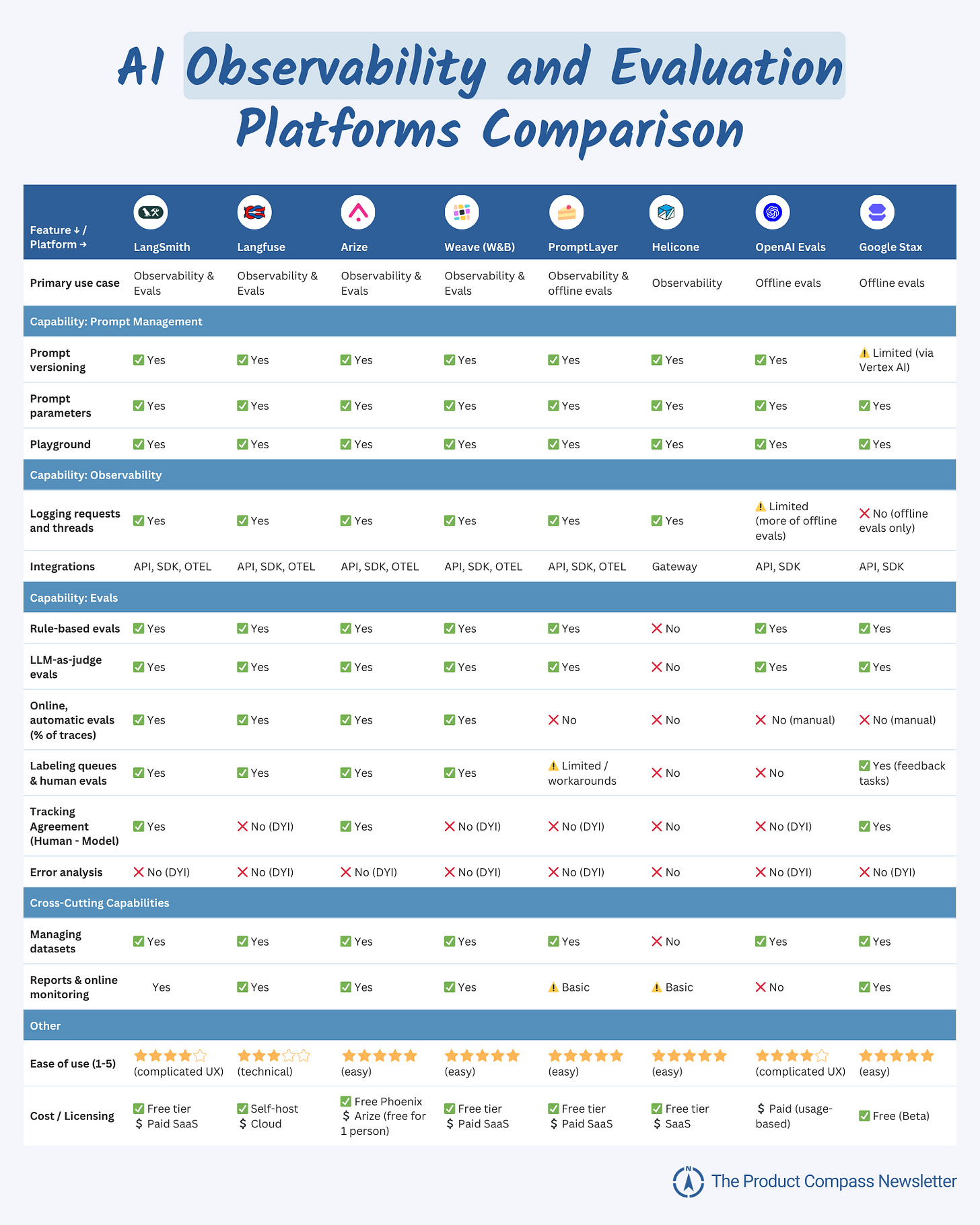

2. Comparison of AI Observability and Evals Platforms

The result of my research:

Note: I did my best to test every single platform and simplified the answers (e.g., Helicone is deprecting experiments, which were never a fully-flagged feature). In case I missed something, please let me know. I’ll update the survey.

3. How to Run the Evals Loop Yourself

Theory is nice, but you need a practical workflow. Building something that actually works helps you remember far more than reading or listening to podcasts.

Here’s the simplest manual loop with an example of a chatbot you can build in ~30 minutes (requires free Lovable and PromptLayer accounts).

Keep reading with a 7-day free trial

Subscribe to The Product Compass to keep reading this post and get 7 days of free access to the full post archives.