A PM's Guide to Evaluating AI Agents

Evaluate actions, not words. A step-by-step AI agent evaluation framework with n8n templates and practical examples.

In 2025, we spent many hours discussing AI evals with Hamel Husain (AI Evals course).

As he explained in his first post on AI evals, product teams that succeed obsess over measuring, learning, and iterating. They understand that, more than anything, you need a robust evaluation system.

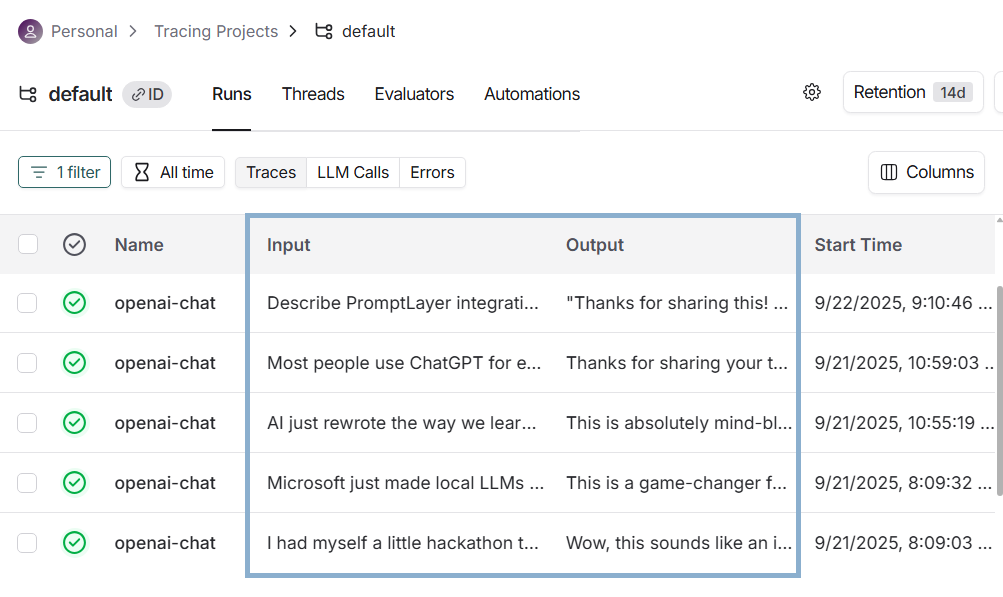

A common approach to evaluating LLMs focuses on reviewing input–output pairs, for example:

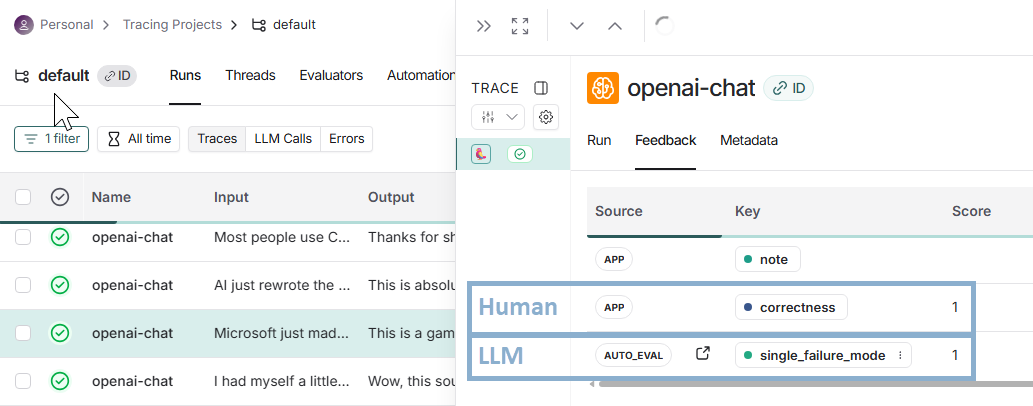

Next, for each trace, we can add metadata (latency, tokens, cost), automatic evals, and human annotations (we discussed available options in The Ultimate Guide to AI Observability and Evaluation Platforms), for example:

This approach works well for chatbots. But even though we can chain traces into threads for multi-turn chats, eval platforms struggle to track complex interactions.

In my experience, this becomes especially painful when working with AI agents, where flows are non-linear and each tool usage triggers a new model call.

But there is a better approach.

In this post, we cover:

AI Evals Recap

How to Evaluate AI Agents (Action-Based Framework)

n8n AI Evaluations: A Quick Review of Default Features

Ready-to-Use n8n Templates to Define Rule-Based Evals for AI Agents

Example: How Would I Evaluate the Trello Assistant Agent

Guardrails in n8n

Advanced: Evaluating Multi-Step Pipelines and Multi-Agent Systems

Let’s dive in.

Updated: 11/3/2025 - Added guardrails

1. AI Evals Recap

Many teams start evaluating their AI systems by measuring metrics such as “helpfulness” or “hallucinations.” But this approach misses domain-specific issues.

A better way is to perform error analysis and let app-specific metrics emerge bottom-up.

Some of the key points we discussed earlier:

Generate LLM traces: use real or synthetic data.

Classify failure modes: define app-specific categories of errors.

Theoretical saturation: repeat until no new failure modes appear. As a rule of thumb, you need about 100 diverse traces.

Fix systematically: for each failure mode, you can:

Do nothing if the problem is rare and not severe.

Fix the prompt.

Implement code-based evaluators for rule-based checks (SQL, XML, keywords).

Implement LLM-as-Judge evaluators for subjective checks (tone, reasoning).

Start with binary metrics: a simple pass/fail is better than a 1-5 scale.

Keep humans in the loop: measure human-model agreement (judge the judge).

Remove friction: make browsing and labeling traces as effortless as possible. A common approach is building custom, app-specific data viewers.

Never stop looking at data: Run evals on % of production calls and verify with humans to prevent drift.

You can learn more about AI evals from these posts:

Critically for PMs, LLM failures are closely related to value and viability risks. As an AI PM, get ready to regularly review and label traces and experiment with prompts.

We discussed that in WTF is an AI Product Manager.

2. How to Evaluate AI Agents (Action-Based Framework)

Based on my implementations, when evaluating agents, the primary thing to evaluate are often tools used by the agent, not only the text output.

In other words, does your agent take the right actions?

Let’s imagine a Trello Assistant Agent that has access to a wide variety of tools.

The agent gets the following user prompt:

Think step by step:

- Create a new list inside https://trello.com/b/yzyPOYHv/projectkanban Kanban board

- Next, use web search to find recent news about Amazon

- Finally, add exactly 10 of the top search results as cards to the Kanban list you created earlier

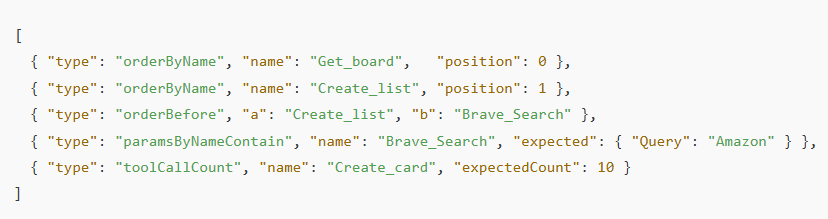

For this specific prompt, I’d like to verify:

Did it try to call “Get_board” as the first action?

Did it try to call “Create_list” as the second action?

Was the tool “Brave_Search” called after the tool “Create_list”?

Did it call “Brave_Search” with “Amazon” in the query?

Did it call “Add_card” 10 times?

How can we do that?

Agents with high autonomy can take multiple paths, depending on the prompt. Hardcoding analogous checks for each possible prompt would be impractical.

An alternative would be to use an LLM-as-Judge, but it wouldn’t be 100% reliable and would still require humans to review the traces. Also, we couldn’t really verify whether the agent found the best possible plan if specific steps weren’t specified in the prompt.

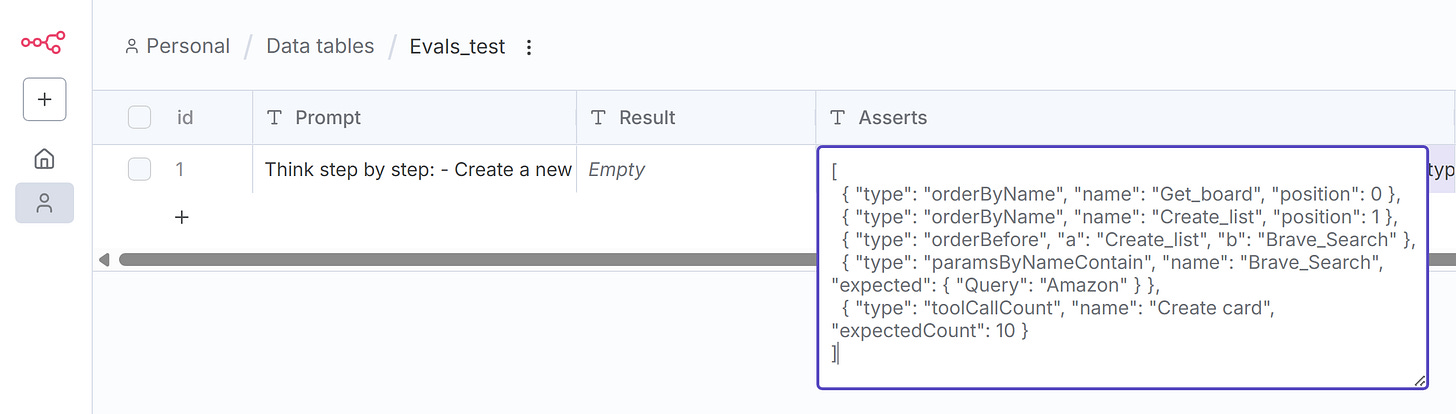

What works for me best is including assertions about the tools in the test dataset, for each input/prompt separately, e.g.:

That way, you can create deterministic unit tests for your agent. Each row describes the desired agent behavior (actions) in a specific scenario.

Notes:

The actions-based framework covers specific checks related to agent actions. When performing error analysis you might discover other failure modes. That's okay .

While I use n8n to illustrate key concepts, this approach is universal.

Later, I’ll share ready-to-use n8n templates you can copy for your own agent evaluations. We will also discuss evaluating agents during our office hours on Tuesday.

But first, let’s briefly review the default n8n eval features with recommended resources.

3. n8n AI Evaluations: A Quick Review of Default Features

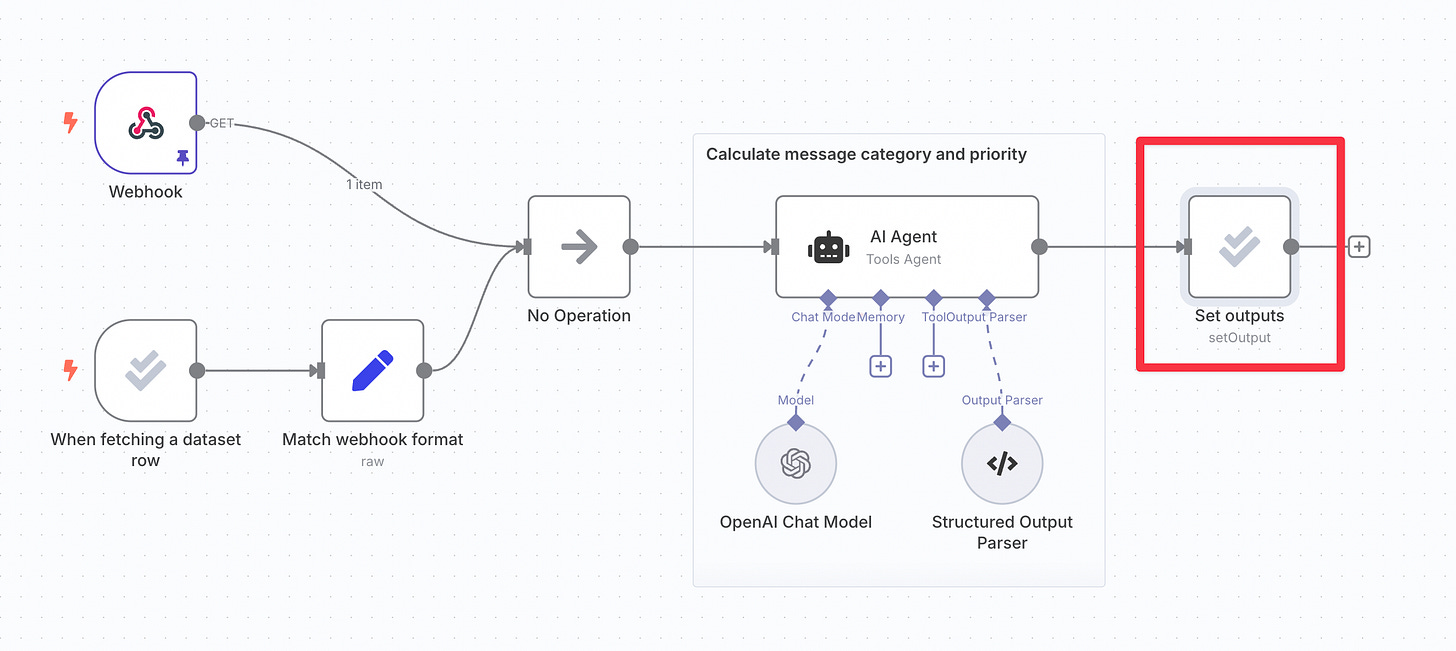

n8n offers two ways to evaluate your AI workflows. Each mode relies on a few special nodes that define how evaluations are run and recorded.

In this post, I want to focus on evaluating agents, not describing the existing n8n features, which they already did perfectly. So, just briefly:

Two n8n evaluation modes to choose from:

3.1 Light evaluations

Theoretically, best for quick prompt checks during development. See 3.4, I do not fully agree with the official documentation.

Building blocks:

Eval Trigger (When fetching a dataset row) - starts an evaluation run. You can connect it to a dataset stored in an n8n Data Table or a Google Sheet. Each row becomes one test case.

Evaluation node/Set Outputs operation - defines what you want to record for each test case (e.g., the model’s final answer). These outputs appear in the same dataset. So, for example, you could use conditional formatting in a Google Sheet to compare “Output” with “Expected Output.”

Check If Evaluating - works for both modes, creates a separate branch executed only during evaluation.

A full, step-by-step guide: Light evaluations by n8n

3.2 Metric-based evaluations

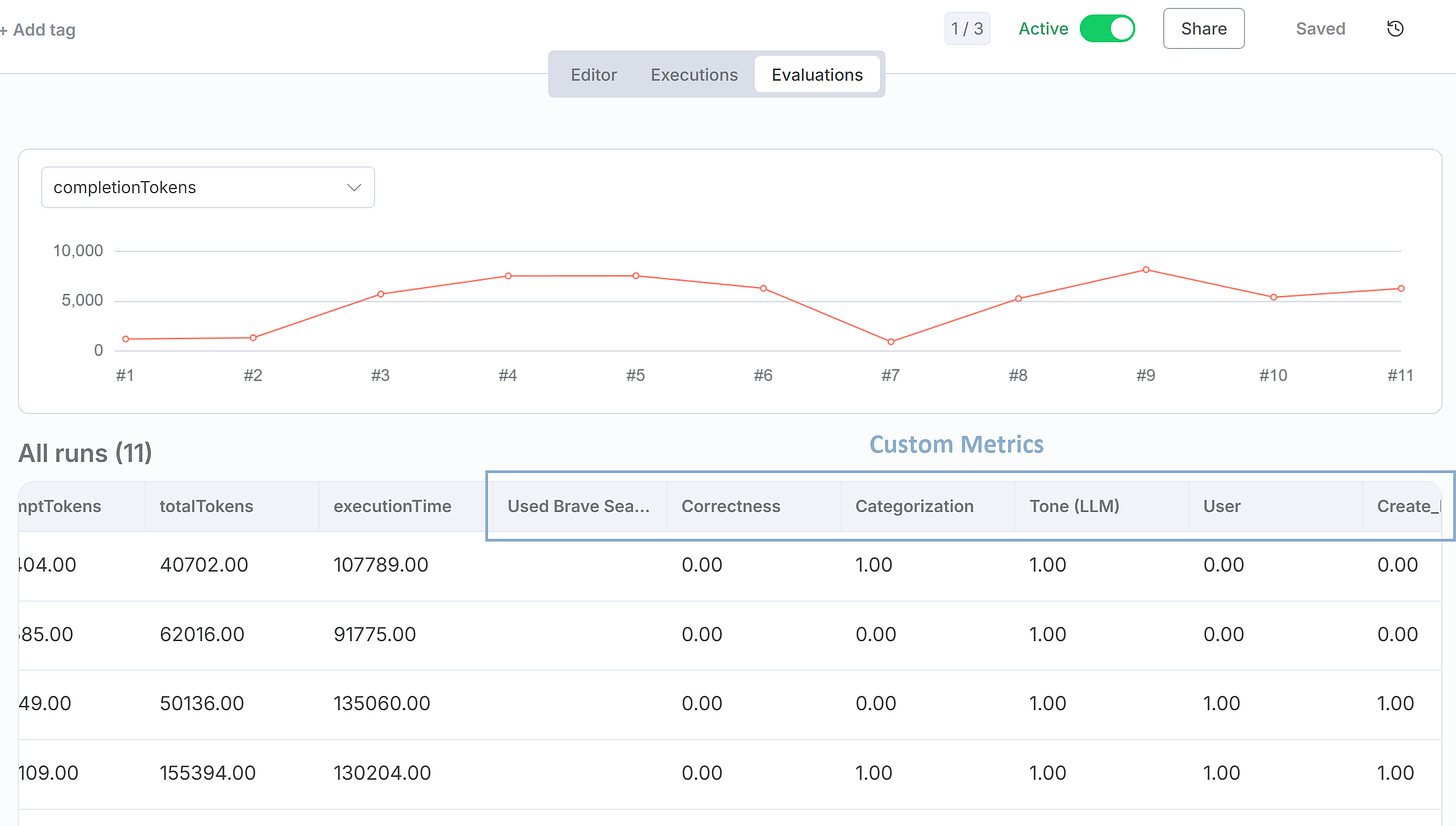

This mode can be used for structured testing and allows you to track progress of your metrics over time.

Additional building blocks:

Evaluation node/Set Inputs operation – the name might be misleading; these are the outputs that show up on the Evaluations tab.

Evaluation node/Set Metrics operation – allows you to use built-in metrics: Correctness, Helpfulness, String Similarity, Categorization, and whether a specific tool was used. It also allows you to set custom metrics that need to be calculated before the node.

Step-by-step guide: Metric-based evaluations by n8n

3.3 Why n8n built-in metrics are insufficient

I find n8n metrics not adjusted to AI agents:

Critical checks like tool parameters, tool call order, tool call count are unavailable. As discussed, we need to evaluate actions - that’s where many AI agents fail the most.

n8n setup works for the entire workflow. It can’t be defined for each test case separately. But agents do not have to be static. What if there are dozens of possible scenarios an agent can execute, depending on the prompt?

3.4 Licensing limitations of n8n metric-based evals

The free Community Edition allows you to enable metric-based evaluations for only one workflow.

The simplest workaround:

Use light evaluations and calculate all metrics in your workflow, without relying on the “Set Metrics.”

Write them back to your dataset using the Set Outputs.

Warning: Before publishing this post, users have reported that “Evaluate All” button disappeared from n8n. This means that temporarily light evaluations can only be triggered for a single test case. n8n is working on fixing the problem (“this week” - commented 6 hours ago).

Next, I will:

Share n8n templates you can use to define agent-specific evals (focus on the actions).

Demonstrate an example of evaluating the Trello Assistant Agent, while following the error analysis process.

On Tuesday, during our office hours, we will build evals for the Trello Assistant Agent together, step-by-step. The Zoom link: premium resources.

🔒4. Ready-to-Use n8n Templates to Define Rule-Based Evals for AI Agents

We start with a dataset that contains test cases and unique rules to check:

An improved formatting for one of the test cases:

Keep reading with a 7-day free trial

Subscribe to The Product Compass to keep reading this post and get 7 days of free access to the full post archives.